RabbitMQ Client Use Cases

Last updated:2026-01-05 11:00:21

Connections and Channels

Avoiding Creating a Connection/Channel for Every Message Sent

In RabbitMQ, creating a connection is a "costly" operation. Each connection consumes at least 100 KB of memory, and having too many connections increases the memory pressure on the broker. The handshake process during connection establishment is complex and involves at least 7 TCP data packets. In contrast, channels provide a much lighter communication method. It is recommended to reuse connections as much as possible by using channels. By creating a connection at program startup and reusing this persistent connection for every message sent, the sending performance is improved, and server memory usage is reduced. Ideally, each process uses one connection, and each thread uses one channel.

Avoiding Sharing a Channel Among Threads

Ensure that channels are not shared among threads. Most RabbitMQ client channel implementations are not thread-safe; sharing them may cause message processing errors, degrade performance, or result in unexpected connection issues.

Using Independent Connections for Producers and Consumers

Due to the unique traffic control mechanism of RabbitMQ, if producers and consumers reuse the same connection and high consumption traffic triggers traffic control, the producer may be under control, causing slow sending or timeouts. Therefore, it is recommended that different physical connections are used during the initialization of producers and consumers to avoid mutual interference.

Large Number of Connections and Channels Affecting RabbitMQ Management API Performance

The management interface needs to collect operational metrics for every connection and channel in real time, perform data analysis, and provide a visualized display. These operations consume significant system resources. As the number of connections and channels increases, the response speed of the management interface may decrease, impacting monitoring and management efficiency.

Production and Consumption

Unrecommended to Enable Automatic Message Acknowledgment for Consumers

The RabbitMQ server provides at least once consumption semantics to ensure that messages are correctly delivered to the downstream business systems. If the automatic message acknowledgment is enabled for the consumer, the server will automatically acknowledge and delete the messages after pushing them to the consumer, even if the consumer encounters an error during message processing. This can cause the business to miss the processing of messages.

Idempotent Message Processing for Consumers

The RabbitMQ server provides at least once consumption semantics, which may result in repeated delivery of messages in extreme scenarios. Therefore, it is recommended that critical businesses implement idempotent message processing. This ensures that even if messages are received repeatedly, there is no adverse impact on the businesses.

Idempotent processing on businesses can be achieved by including the unique business identifier in messages. Consumers can check these identifiers and message statuses during consumption and process repeated messages according to business requirements to prevent adverse impact on businesses caused by receiving messages repeatedly.

Using Consume to Consume Messages

Get is a pull mode message consumption method; when it is used to consume messages, a request needs to be sent to the broker for each message consumed. However, Consume allows receiving a batch of messages at once, with the server pushing messages according to the actual situation. It is not recommended to use Get to consume messages because it is inefficient and resource-intensive. Continuous empty pulls (where there are no messages to be consumed in the queue, but the consumer keeps pulling messages) can lead to abnormally high CPU loads on the server.

Configuring a Reasonable Message Load

Processing message loads (size and type) is a common challenge faced by RabbitMQ users. It is important to note that the number of messages processed per second generally has a greater impact on performance than the size of an individual message. Although sending overly large messages is not a good practice, frequently sending a large number of small messages may cause even greater pressure on the system.

A recommended approach is to merge multiple small messages into a larger message for sending, which can be unpacked and processed after being received by the consumer. However, message merging may introduce the following issues:

If the processing of a certain sub-message after the merge fails, is a full batch reupload required?

Batch processing may increase the processing time per message, potentially affecting real-time performance.

Therefore, when it is decided whether to batch process messages, the network bandwidth, system architectures, and business fault tolerance requirements should be comprehensively considered.

Setting a Reasonable Prefetch Count Value

The Prefetch Count value is used to specify the number of messages sent to consumers simultaneously. A low Prefetch Count value may cause consumers to frequently wait for new messages, resulting in idle resources; a high Prefetch Count value may cause certain consumers to be overloaded while leaving others idle, resulting in an imbalance in load distribution.

If there is one or a few consumers that can process messages quickly, it is recommended to increase the Prefetch Count value appropriately. For example, increase it to 250. Prefetching multiple messages at once keeps the client busy continuously.

If message processing time is relatively stable and network conditions are controllable, the Prefetch Count value can be estimated using the following method: Prefetch Count value ≈ Total round-trip time (RTT)/Average processing time per message.

In scenarios with a large number of consumers and a short processing time for a single message, it is recommended to set a lower Prefetch Count value.

If there is a large number of consumers and/or the processing time for a single message is long, it is recommended to set the Prefetch Count value to 1 to ensure even distribution of messages among consumers.

Note:

If the client is set to automatic acknowledgment (auto-ack), the prefetch mechanism will not take effect.

A common mistake is to use an unlimited prefetch policy, which may cause a single client to receive all messages, leading to memory exhaustion and crashes. This can result in the redelivery of all messages, which severely impacts system stability.

The global prefetch has been deprecated and should not be used.

Queue

Controlling the Number of Messages in the Queue

Excessive message backlogs in a queue consume a large amount of memory. To reduce memory usage, RabbitMQ flushes some messages to the disk, which can slow down the message processing speed. When there is a large backlog of messages, reading messages in pagination mode may reduce the processing speed of the overall system.

Additionally, when it is required to restart a RabbitMQ cluster, a large backlog of messages may cause two issues:

1. Rebuilding the message index during a restart takes a long time.

2. Synchronizing messages between nodes after a restart requires extra time.

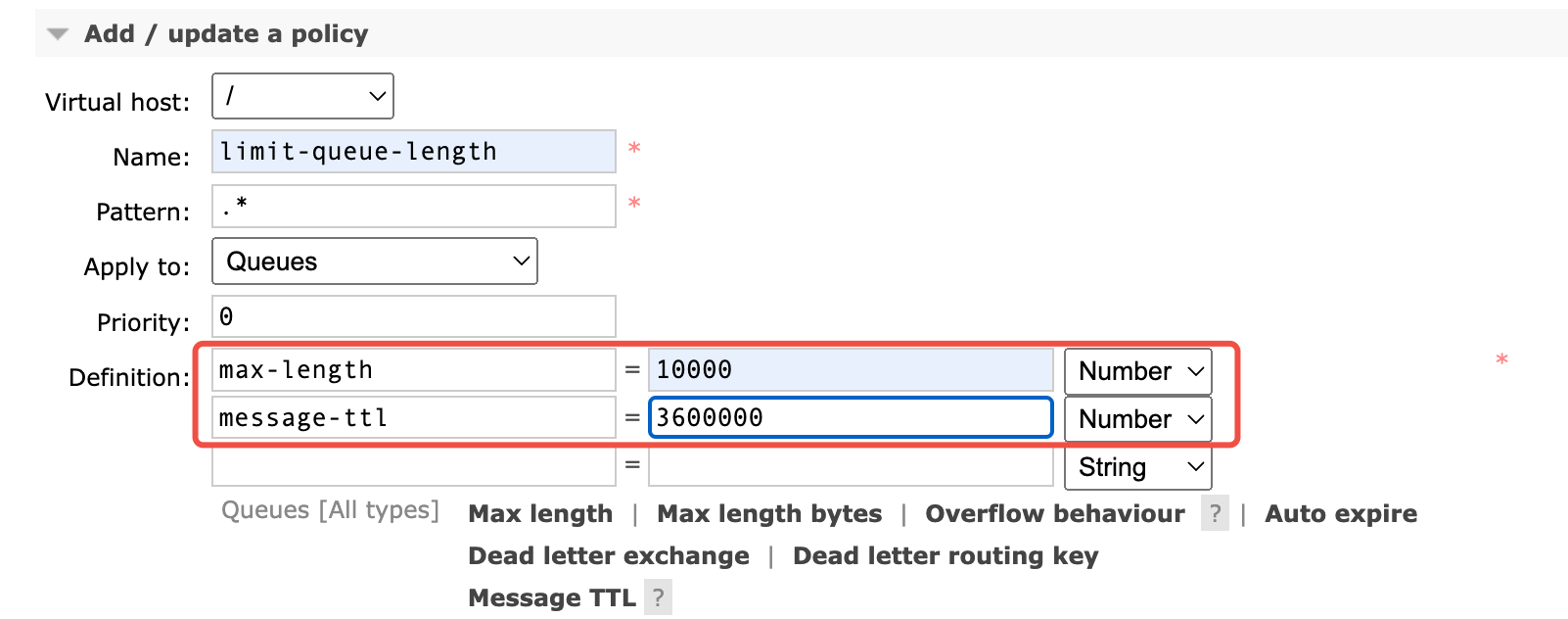

It is recommended to control the number of messages in the queue by increasing consumption or use means, such as setting max-length or Time To Live (TTL), to prevent message backlogs, thereby maintaining optimal system performance.

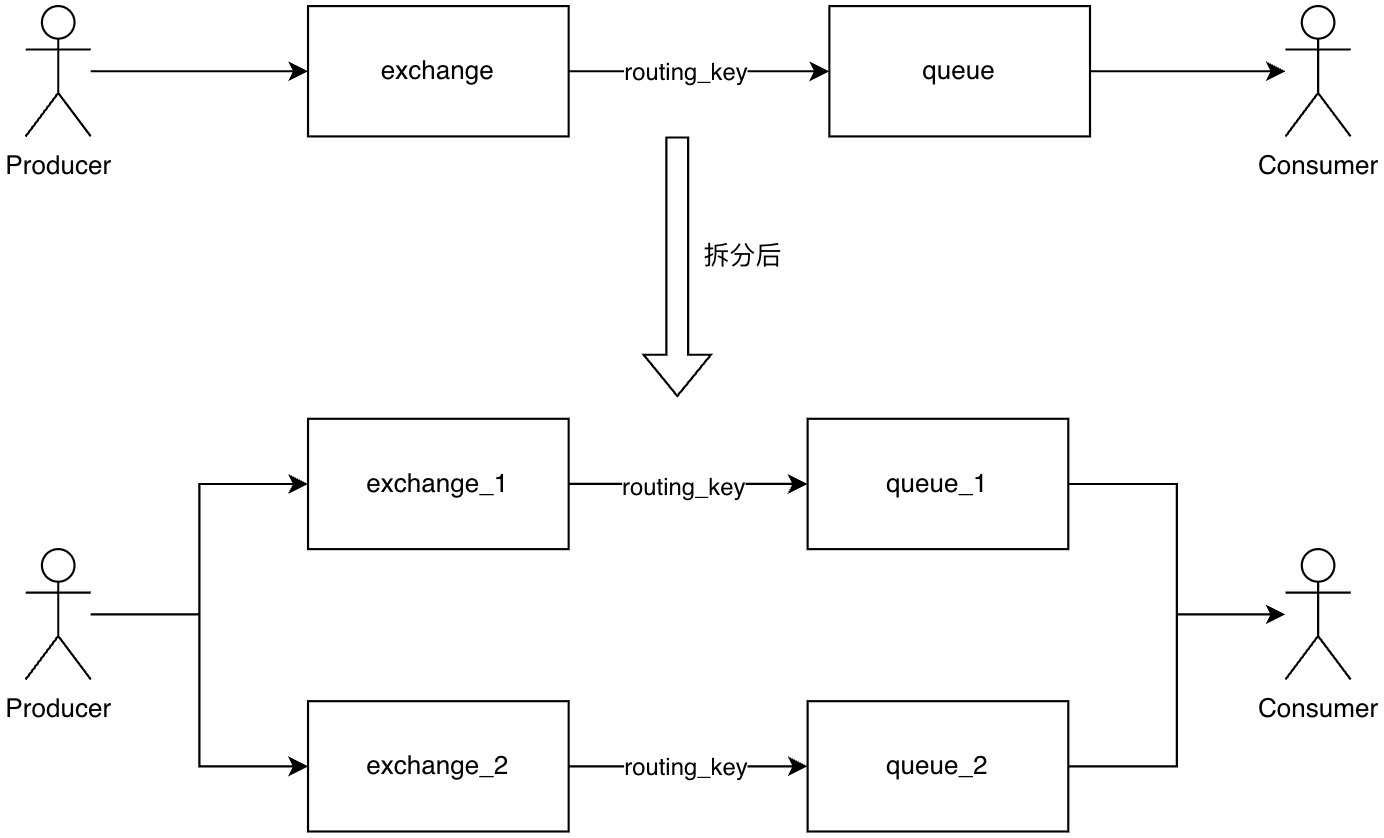

Splitting Queue Traffic

Due to the limitations of the Erlang process model, RabbitMQ queue performance is limited by single-core CPU performance and cannot scale horizontally. Therefore, to increase cluster throughput, traffic can be distributed to different queues to use multi-core CPU capabilities. Below is a splitting solution for reference.

Controlling the Number of Queues

The RabbitMQ management plugin collects and calculates metrics for each queue in the cluster, and every queue consumes certain system resources. An excessive number of queues can affect cluster performance. It is recommended to select appropriate node specifications on the purchase page according to the required number of queues estimated based on the business needs.

Setting a Reasonable Number of Priority Queues

RabbitMQ priority queues consume additional resources (since each priority level is processed separately at the underlying level).

Suggestions:

In most business scenarios, setting 3 to 5 priority levels is sufficient.

Avoid setting excessive priority levels (for example, over 10), as this wastes system resources.

Automatic Deletion of Unused Queues

Client connections may fail due to unexpected interruptions, leaving unused queue resources that can affect system performance. The following three mechanisms support automatic deletion of queues:

1. Setting a queue TTL policy can configure TTL for queues. For example, after TTL is set to 28 days, a queue will be automatically deleted if it is not used for 28 days.

2. Auto-delete queues: Such queues will be automatically deleted when the last consumer cancels the subscription or the channel/connection that declares the queue is disabled (including loss of the TCP connection to the server).

3. Exclusive queues: An exclusive queue can only be operated by the declaring connection (such as consumption, cleanup, or deletion). When the declaring connection is disabled or disconnects abnormally (such as loss of the underlying TCP connection), the exclusive queue is immediately and automatically deleted.

Using Quorum Queues

Starting from RabbitMQ 3.8, a new queue type of the quorum queue is introduced. This replicated queue is implemented based on the Raft protocol and is designed to provide higher data security and availability. In 3.13, quorum queues are recommended to be prioritized over the traditional mirrored queues.

Enabling Lazy Queues to Improve Performance Predictability (Suitable for RabbitMQ Versions Earlier than 3.12)

RabbitMQ 3.6 introduced the lazy queue feature. Lazy queues persist messages directly to the disk rather than keeping them in memory whenever possible, significantly reducing memory usage but potentially increasing message processing latency.

Based on the actual Ops experience, lazy queues help build a more stable cluster environment with more predictable performance. Messages cannot be flushed to the disk suddenly without warning, avoiding sudden drops in queue performance. Therefore, it is recommended to enable lazy queues when a large number of messages are required to be processed at once (such as batch task processing) or when the processing speed of the consumer is estimated to lag continuously behind that of the producer.

Note that starting from RabbitMQ 3.12, all classic queues have adopted the same behavior mechanism as that of lazy queues by default, without the need for separate configuration.

Client Mechanism

Reconnection Confirmation Mechanism

Brokers will be automatically restarted for self-healing in extreme scenarios such as OOM and container host failures. Daily business operations, such as cluster upgrades, may also trigger broker restarts. To prevent continuous client connection failures during broker restarts, it is required to ensure automatic recovery of the connection. Common clients, such as Java and .NET, provide automatic reconnection mechanisms, whereas other clients, such as Python (pika), PHP (php-amqplib), and Go (amqp091-go), need to capture exceptions in code on the application layer to recover connections. View the specific client documentation for guidance on how to implement automatic connection recovery.

Avoiding Disabling the Heartbeat Settings

Heartbeat values are configured on both the server and the client (the default value for the server is 60 seconds). The finally effective heartbeat value is negotiated between the server and the client, with negotiation mechanisms varying in different languages and client versions. Setting the heartbeat to 0 indicates disabling the heartbeat detection, preventing the server from automatically removing useless connections with no data interaction for a long time, which may result in unexpected connection leaks.

Network Partitioning

Network partitioning is an inevitable issue when RabbitMQ is used. It causes cluster status inconsistencies. Even after network recovery, RabbitMQ requires broker restarts to resynchronize the status. TDMQ for RabbitMQ currently uses the autoseal mode, which automatically elects a winning partition and restarts brokers in untrusted partitions.

It is recommended that the client take the following measures to minimize the negative impact caused by network partitioning:

Message senders: consider using the mandatory mechanism when sending messages, and have the capability to process basic.return to address possible message routing failures during network partitioning.

Message consumers: Be aware that message duplication may occur during the appearance or processing of a network partition. Consumers need to perform idempotent processing.

Was this page helpful?

You can also Contact Sales or Submit a Ticket for help.

Yes

No

Feedback