Mobile Terminal iOS SDK Integration

Last updated:2025-11-05 10:05:00

Software and Hardware Requirement

System | iOS 15.6 or later |

Processor Architecture | arm64 CPU Architecture |

Support Terminal Device | A12+ CPU, M1+ CPU iPhone XS, iPhone XR, iPhone SE2, iPhone 11+ and newer devices iPad 8+, iPad Mini 5+, iPad Air 3+, iPad Pro 3+, New iPad Pro 1+ and newer devices (iPhones released after 2018, iPad devices released after 2019) |

Development IDE | Xcode |

Memory Requirements | More than 500 MB |

Model Package Description

Model package is divided into two.

Base Model Package

The Base Model package is irrelevant to the avatar and is necessary for functioning 2D endpoint rendering. The model package directory name is:

common_model/. If there are multiple character models, they can share a Base Model package.Character Image Pack

The avatar package contains image data and inference models driven by 2D Digital Human. Each avatar has a model package. Load different human model packages to change the image. The directory name of the model package is:

human_xxxxx_540p_v3/, where xxxxx is the name of the custom avatar.The avatar package supports 540p (960 x 540) and 720p (1280 x 720) resolution.

The current SDK package includes a public image with the package name: human_yunxi_540p_v3. It can be used directly.

Note:

The SDK integration party needs to place the model package into the App sandbox. When initializing the SDK, pass the absolute paths of the two models as arguments to the SDK.

The model package can be dynamically downloaded. The logic for dynamic download and updates needs to be implemented by the connected App.

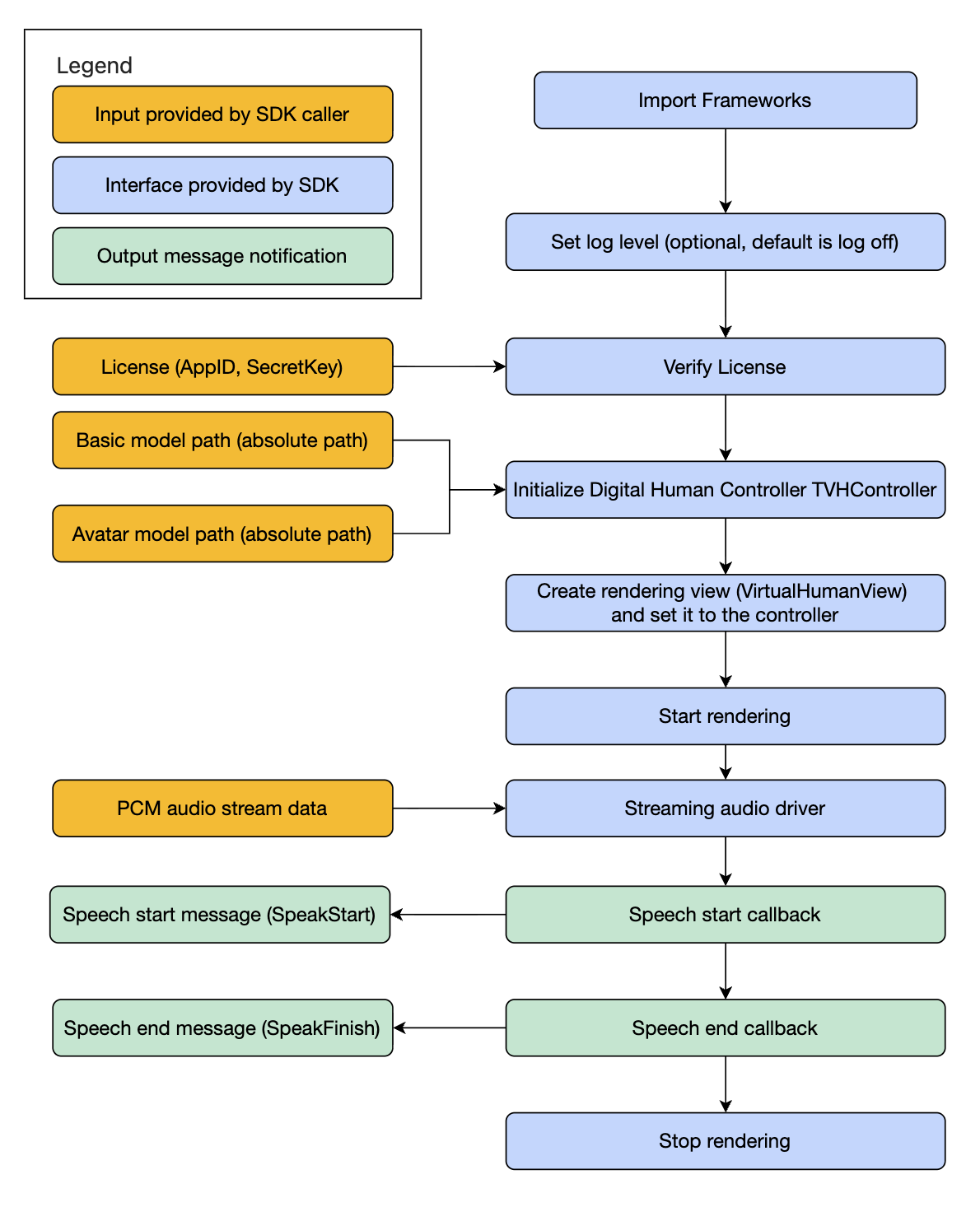

Digital Human Client-Side Rendering SDK Integration

The calling process of Digital Human SDK is as follows:

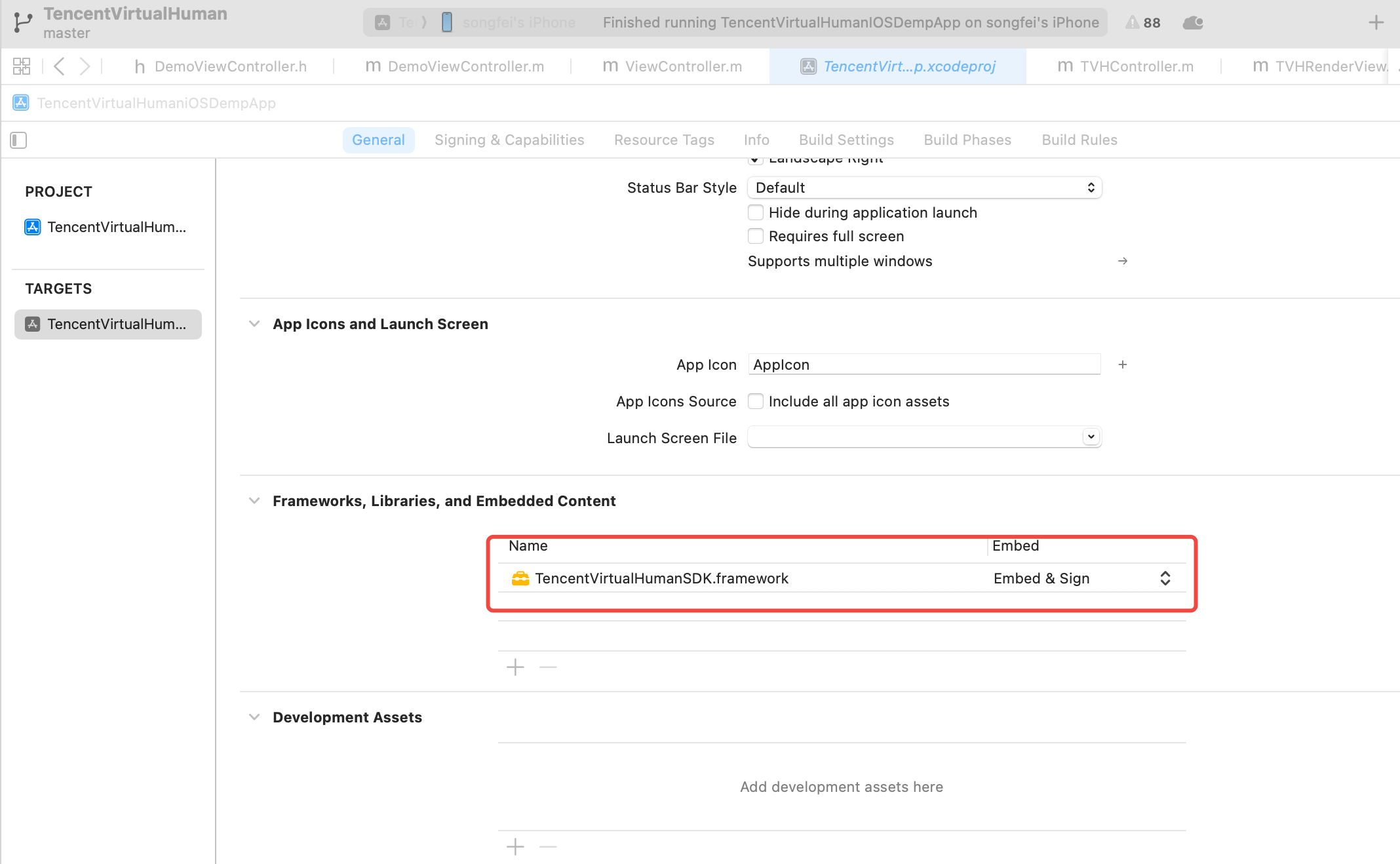

Introducing Framework

Add Framework to the project, select Embed & Sign as the embed method.

In Objective-C, directly import the header file. In Swift, import the header file in the xxxxx-Bridging-Header.h file.

#import <TencentVirtualHumanSDK/TencentVirtualHumanSDK.h>

2. Set Log Level (Optional)

The SDK log outputs to standard output stdio by default, with customizable log output level. The default level is

TVHLogLevelOff.Note:

Note: The log level can be set only once. Only the first call takes effect for multiple calls.

[[TVHLogManager shareInstance] setLogLevel:TVHLogLevelInfo];

TVHLogManager.shareInstance().setLogLevel(TVHLogLevelInfo)

log output level:

Log level | Severity Flag |

Disable logs | TVHLogLevelOff |

Error logs | TVHLogLevelError |

Warning logs | TVHLogLevelWarn |

Log information | TVHLogLevelInfo |

Debug Logs | TVHLogLevelDebug |

Trace Logs | TVHLogLevelTrace |

3. License Verification

Before using the Digital Human SDK, call the authorization method first, otherwise ALL other initialization methods will fail.

Authentication can be performed at App startup or when opening the Digital Human interface for the first time. The authentication module is a singleton and can be called multiple times, with the last auth result taking precedence.

int result = [[TVHLicenseManager shareInstance] authWithAppID:@"0000000000" andSecretKey:@"xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"];NSLog(@"license result: %d", result);

let result = TVHLicenseManager.shareInstance().auth(withAppID: "0000000000", andSecretKey: "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx");print("license result: \\(result)")

Returns 0 indicates authentication successful, returns other value means authentication failure.

4. Creating Digital Human Controller (TVHController)

Through the controller, you can initialize the Digital Human, control it to start and stop rendering, and make it open its mouth to speak.

// Place the general model and avatar model under the Documents directory in the sandbox firstNSArray *paths = NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES);NSString *documentsPath = [paths firstObject];NSString* common_model = [NSString stringWithFormat:@"%@/common_model", documentsPath];NSString* human_model = [NSString stringWithFormat:@"%@/human_yunxi_540p_v3", documentsPath];// Initialize the controllerself.controller = [[TVHController alloc] initWithCommonModelPath:common_model humanModelPath:human_model];self.controller.delegate = self;// Start rendering[self.controller start];

// Place the general model and avatar model under the Documents directory in the sandbox firstlet paths = NSSearchPathForDirectoriesInDomains(.documentDirectory, .userDomainMask, true)let documentsPath = paths.first!let commonModel = "\\(documentsPath)/common_model"let humanModel = "\\(documentsPath)/human_youyou3_720p"// Initialize the controllercontroller = TVHController(commonModelPath: commonModel, humanModelPath: humanModel)if(controller != nil) {controller.delegate = self;// Start renderingcontroller.start()}

5. Creating Rendering View (TVHRenderView)

The Digital Human SDK uses Metal to implement a high-performance, transparent background page rendering. The Digital Human images will be drawn on this

RenderView. You can set the size and position of this RenderView the same as an ordinary View, and can add this RenderView as a subview to other views.Note:

**Note:** If the connecting party has special requirements, they can implement their own render view. It is required to implement

TVHRenderViewDelegate to obtain RGBA original data for drawing.We strongly recommend that you use the SDK's encapsulated

TVHRenderView to get the best rendering effectself.renderView = [[TVHRenderView alloc] initWithFrame:self.view.bounds];self.renderView.fillMode =TVHMetalViewContentModeFit;[self.view addSubview:self.renderView];// Set renderView to the controllerself.controller.renderView = self.renderView;

renderView = TVHRenderView(frame: self.view.bounds)renderView.fillMode = .fitview.addSubview(renderView)// Set renderView to the controllercontroller.renderView = renderView

You can set the fill mode of the render View:

TVHMetalViewContentModeStretch | Stretch image |

TVHMetalViewContentModeFit | Crop image |

TVHMetalViewContentModeFill | Reserve black border (transparent edge) |

6. Streaming Input Audio-Driven Digital Human

Stream the input audio to make the Digital Human speak.

The input audio format is s16le (signed 16 bits little endian) PCM, with a sampling rate of 16000 and mono.

You can call

appendAudioData multiple times to stream audio data. The input audio will be saved to the buffer queue. The duration of the audio data in each call can be any value.Note:

The real-time rate of input data must be greater than 1.0. Ensure the quantity of input data exceeds the data consumed by real-time decoding and playback. If audio data is insufficient, it may cause waiting for audio data, and the digital human might get stuck during broadcast.

- For the last piece of input audio data, set `isFinal` to `YES`. Otherwise, the SDK will consider the audio unfinished and keep waiting, causing the Digital Human to get stuck during playback.

// This code snippet demonstrates reading PCM data from a file, simulating streaming segmentation and sending to the controllerNSArray *paths = NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES);NSString *documentsPath = [paths firstObject];NSString* pcmPath = [NSString stringWithFormat:@"%@/test.pcm", documentsPath];// Read audio dataNSData* data = [NSData dataWithContentsOfFile:pcmPath];// Simulate streaming, send data in fragments (for demo only, the last fragment is dropped)int packageSize = 1280;int packageCount =(int)[data length] / packageSize;for(int i=0; i<packageCount; i++) {BOOL isFinal = (i == packageCount -1);[self.controller appendAudioData:[data subdataWithRange:NSMakeRange(i*packageSize, packageSize)] metaData:@"" isFinal:isFinal];}

// This code snippet demonstrates reading PCM data from a file, simulating streaming segmentation and sending to the controllerlet paths = NSSearchPathForDirectoriesInDomains(.documentDirectory, .userDomainMask, true)guard let documentsPath = paths.first else { return }let pcmPath = "\\(documentsPath)/test.pcm"// Read audio dataguard let data = try? Data(contentsOf: URL(fileURLWithPath: pcmPath)) else { return }// Simulate streaming, send data in fragments (for demo only, the last fragment is dropped)let packageSize = 1280let packageCount = data.count / packageSizefor i in 0..<packageCount {let range = i * packageSize..<(i * packageSize + packageSize)let subData = data.subdata(in: range)let isFinal = (i == packageCount - 1)controller.appendAudioData(subData, metaData: "", isFinal: isFinal)}

7. Interrupting the Broadcast

During Digital Human broadcast, you can interrupt at any time to enter silent state by invoking the

interrupt method of the controller. The Digital Human will immediately stop speaking and disable audio playback.[self.controllerinterrupt]

controller.interrupt()

Note:

After interruption, you will also receive the

speakFinish message.After the call is interrupted, the Digital Human will immediately stop speaking and disable audio playback. However, it takes 1-2 seconds to receive the

speakFinish message. Before receiving the speakFinish message, calling appendAudioData will also wait until speakFinish before resuming broadcast.8. Handling App Switching between Foreground and Background to Pause and Resume Digital Human

For most apps, when the App is switched to the background, Digital Human rendering and broadcast should suspend. Once the App is reactivated and returns to the foreground, rendering and broadcast should automatically recover and continue running.

The controller provides

pause and resume methods to control Digital Human suspension and resumption.

On the iOS platform, you can use

NSNotificationCenter to register a listener and obtain messages when the app enters the background or foreground.@implementation DemoViewController- (void)viewDidLoad {[super viewDidLoad];// ... Other code// Register notification[[NSNotificationCenter defaultCenter] addObserver:selfselector:@selector(appDidEnterBackground:)name:UIApplicationDidEnterBackgroundNotificationobject:nil];[[NSNotificationCenter defaultCenter] addObserver:selfselector:@selector(appWillEnterForeground:)name:UIApplicationWillEnterForegroundNotificationobject:nil];}- (void)dealloc{// Cancel authorization upon termination[[NSNotificationCenter defaultCenter] removeObserver:self];}- (void)appDidEnterBackground:(NSNotification *)notification {// Handle suspension[self.controller pause];}- (void)appWillEnterForeground:(NSNotification *)notification {// Handle recovery[self.controller resume];}@end

class DemoViewController2: UIViewController {override func viewDidLoad() {super.viewDidLoad()// ... Other code// Device registration notificationNotificationCenter.default.addObserver(self,selector: #selector(appDidEnterBackground(_:)),name: UIApplication.didEnterBackgroundNotification,object: nil)NotificationCenter.default.addObserver(self,selector: #selector(appWillEnterForeground(_:)),name: UIApplication.willEnterForegroundNotification,object: nil)}deinit {// Cancel authorization upon terminationNotificationCenter.default.removeObserver(self)}@objc private func appDidEnterBackground(_ notification: Notification) {// Handle suspensioncontroller.pause()}@objc private func appWillEnterForeground(_ notification: Notification) {// Handle recoverycontroller.resume()}}

9. Handling Digital Human Notification Messages

Digital Human rendering notification messages are implemented through the Delegate mode. Implement the

TVHControllerDelegate delegate, register the delegate object in the controller, and you can receive notification messages.// Implement TVHControllerDelegate@interface DemoViewController () <TVHControllerDelegate>// ... Other code@end@implementation DemoViewController// Render start- (void)renderStart {NSLog(@"render start");}// Broadcast start- (void)speakStart {NSLog(@"speak start");}// Broadcast done- (void)speakFinish {NSLog(@"speak finish");}// Broadcast error- (void)speakError:(NSInteger)errorCode message:(NSString*)message {NSLog(@"speak error code:%ld, message:%@", errorCode, message);}@end

// Implement TVHControllerDelegateextension DemoViewController2 : TVHControllerDelegate {// Render startnonisolated func renderStart() {print("render start");}// Broadcast startnonisolated func speakStart() {print("speak start");}// Broadcast donenonisolated func speakFinish() {print("speak finish");}// Broadcast errornonisolated func speakError(_ errorCode: Int, message: String!) {print("speak error code:\\(errorCode), message:\\(message)");}}

10. Handling Metadata

After inputting audio to the Digital Human, you may need to get the timing when it plays to a specific moment to handle some logic. A common use case is displaying subtitles during the Digital Human broadcast.

The Digital Human SDK introduced the concept of MetaData (additional information). When inputting an audio clip, you can attach a string to the beginning of the clip. When the Digital Human processes that audio clip, it will notify the caller via delegate.

If necessary, you can serialize JSON into a string for formatted information input.

Carrying MetaData in Input Audio Stream

[self.controller appendAudioData:data metaData:@"Here is additional information" isFinal:isFinal];

controller.appendAudioData(data, metaData: "Here is additional information", isFinal: isFinal)

Obtaining Notifications

// Implement TVHControllerDelegate@interface DemoViewController () <TVHControllerDelegate>// ... Other code@end@implementation DemoViewController// Broadcast Meta information start- (void)speakMetaStart:(NSString *)metaData {NSLog(@"speak meta start: %@", metaData);}// Broadcast Meta information done- (void)speakMetaFinish:(NSString *)metaData {NSLog(@"speak meta finish: %@", metaData);}@end

// Implement TVHControllerDelegateextension DemoViewController2 : TVHControllerDelegate {// Broadcast Meta information startnonisolated func speakMetaStart(_ metaData: String!) {print("speak meta start: \\(metaData)");}// Broadcast Meta information donenonisolated func speakMetaFinish(_ metaData: String!) {print("speak meta finish: \\(metaData)");}}

Downgrade Solution for Early Stage Devices

The endpoint rendering Digital Human SDK uses client computing power to complete AI Algorithm reasoning and image synthesis, requiring specific performance from mobile devices.

We support iPhones released after 2018 and iPads released after 2019. For early-stage devices, downgrade is needed. Prompt the user if unsupported or replace with other product solutions.

You can use the

canSmoothlyRun method to check whether the current device supports the rendering SDK.BOOL canRun = [[TVHDeviceManager shareInstance] canSmoothlyRun]

let canRun = TVHDeviceManager.shareInstance().canSmoothlyRun()

Note:

1. This API is based on the recommended results from pre-release performance testing, not actual running tests. Actual running results may be inaccurate due to CPU preemption by the client program.

2. Note: The result is conservative. Some models that return NO may also support smooth operation.

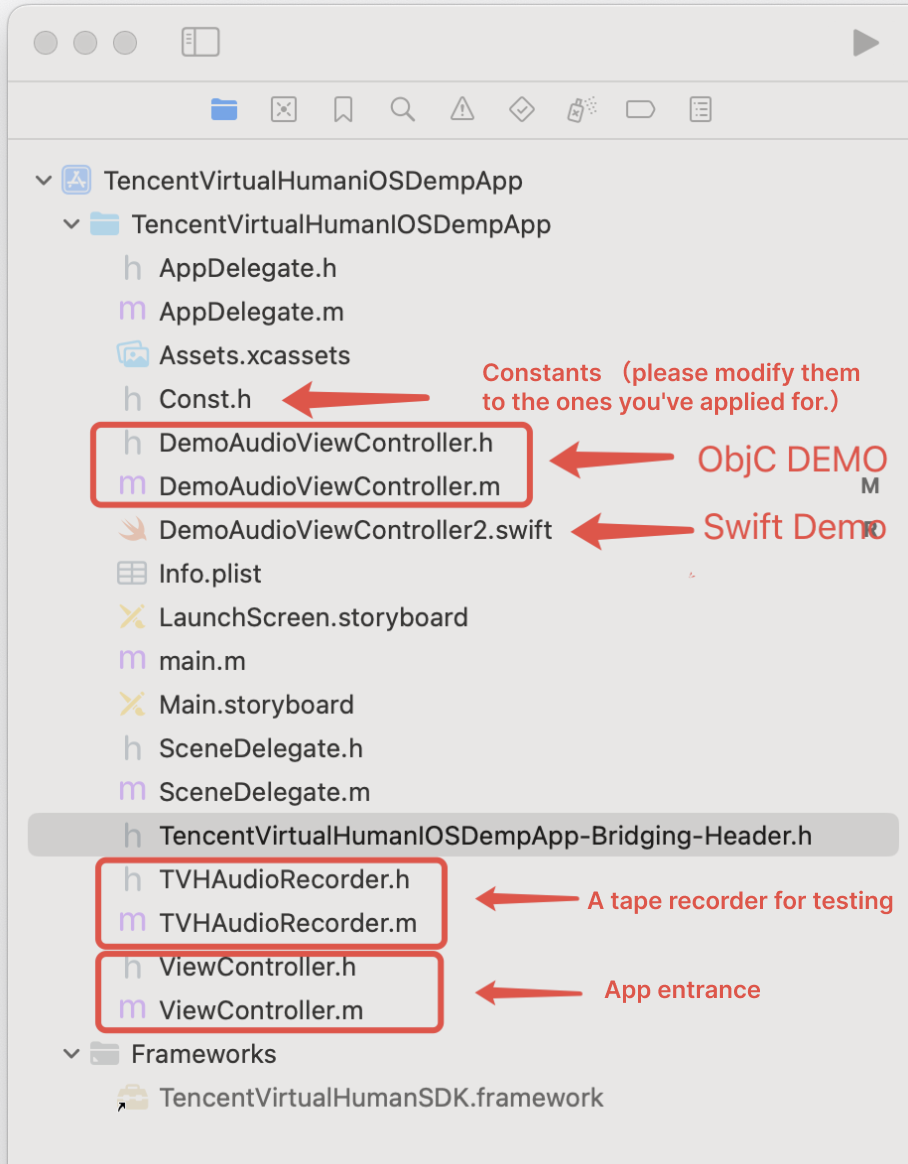

Demo App Running Instructions

The SDK includes a fully functional Demo App. The project structure is as follows:

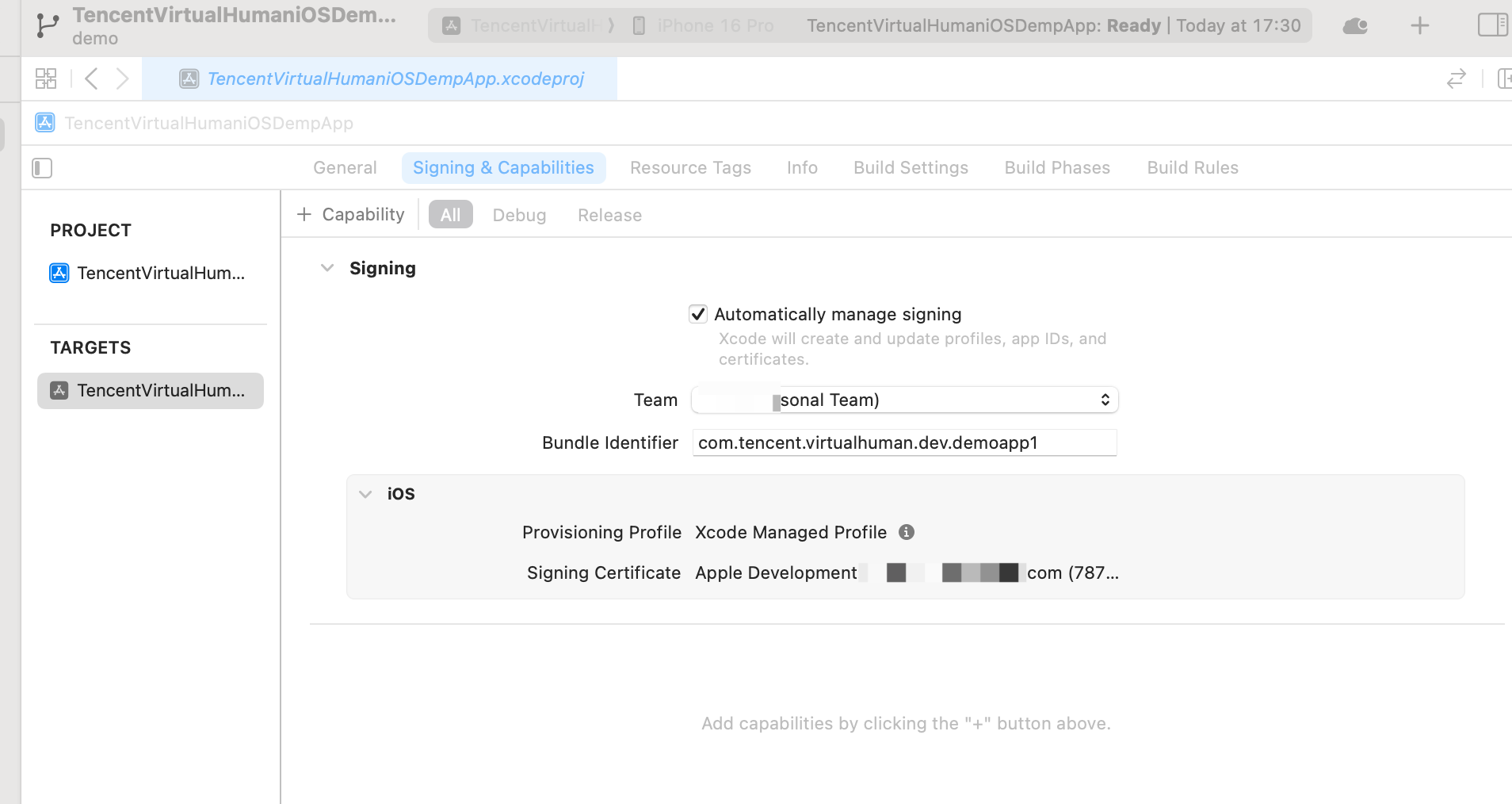

Modifying the Bundle ID

Modify the Bundle ID to ensure it matches the Bundle ID provided when applying for the License, and update the settings for the certificate and signature.

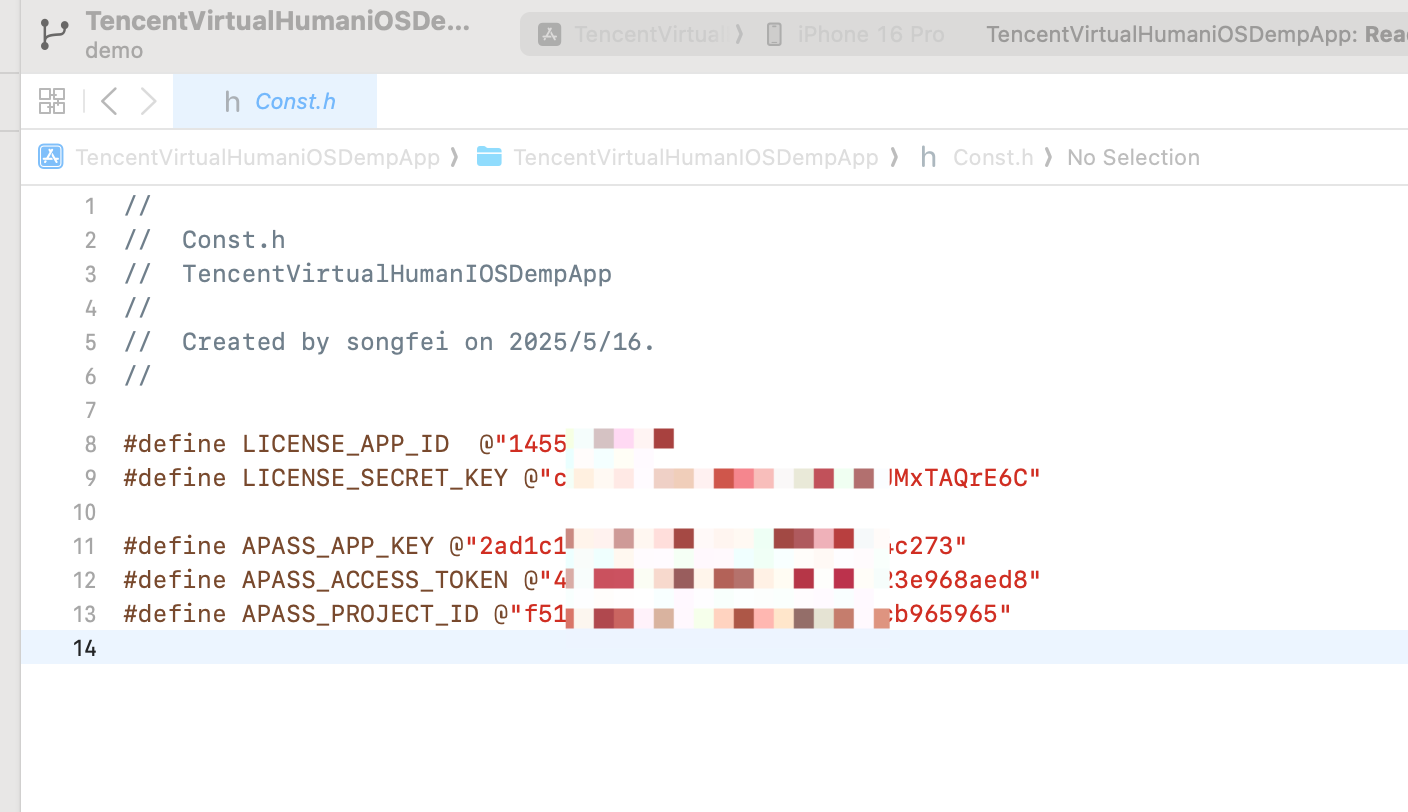

Modify the Const.H File

The Const.h file contains all keys used, such as AppKey and Secret. Change them to the ones from the customer application.

Copying a Model to the Sandbox

Run the App once, then copy the model to the mobile phone in the Finder application on the Mac computer.

4. Running Demo

Click the Run button, and the 2D endpoint rendering Demo application can run as soon as.

Was this page helpful?

You can also Contact Sales or Submit a Ticket for help.

Yes

No

Feedback