慢查询增多时延偏高原因分析与解决方法

最后更新时间:2024-05-07 16:12:47

现象描述

慢查询日志是用于记录查询时间超过阈值的查询日志,当系统中出现大量的慢查询时,会导致系统性能下降,响应时间变长,甚至可能导致系统崩溃。因此,需要对慢查询进行优化,减少慢查询的数量,提高系统性能。

登录 MongoDB 控制台,单击实例 ID 进入实例详情页面,选择系统监控页签,检查实例的监控数据。发现数据库时延监控类指标明显变长。时延监控指标主要反馈的是请求到达接入层直至处理完请求返回客户端的时间。具体监控指标项,请参见 监控概述。

可能原因

通过 $lookup 运算符查询,不使用索引或者使用的索引不支持该查询,需要遍历整个数据库进行完整的扫描,导致检索效率很低。

集合中的文档使用了大量的搜索和索引的大型数组字段,搜索和索引数据集过大,导致系统负载过高。

分析慢查询

基于数据库智能管家 DBbrain 的慢 SQL 分析排查慢查询(推荐)

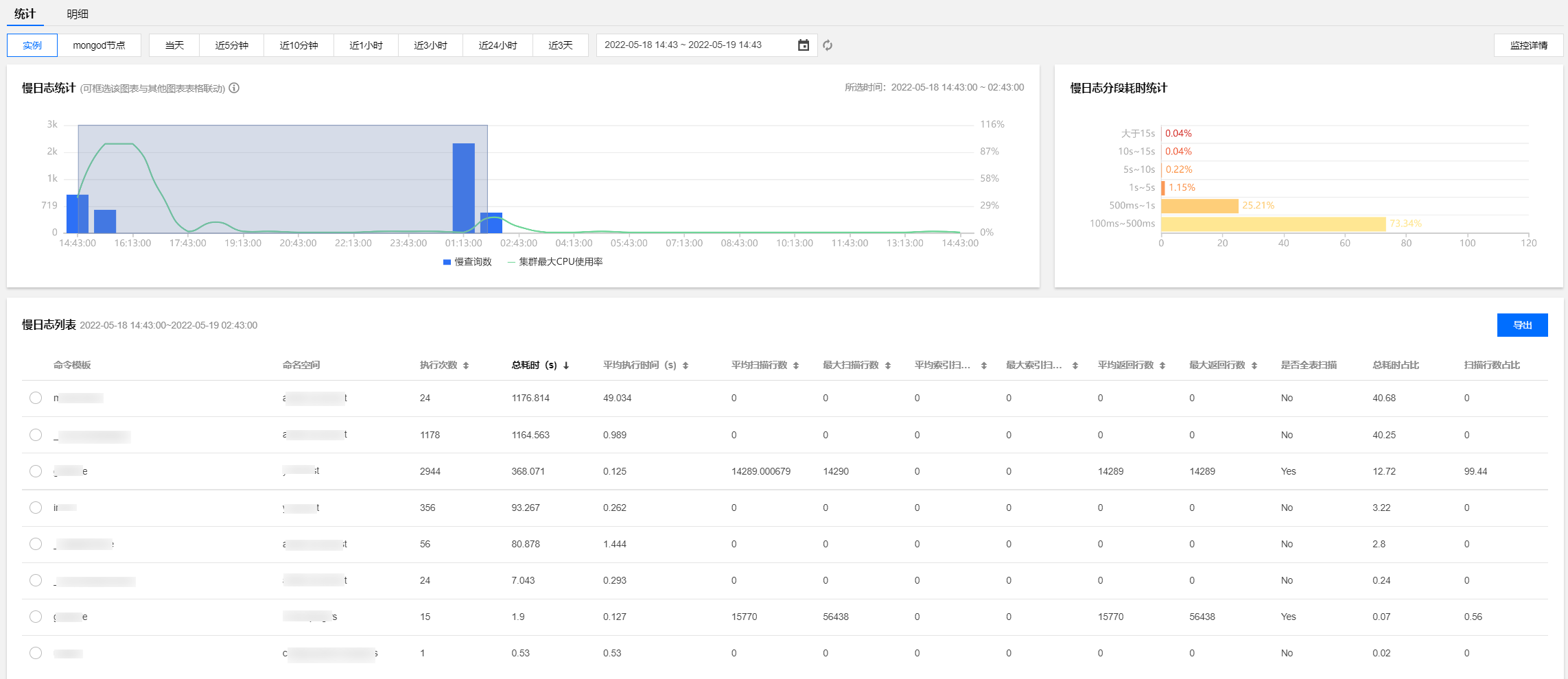

数据库智能管家(TencentDB for DBbrain,DBbrain)是腾讯云推出的一款为用户提供数据库性能优化、安全、管理等功能的数据库自治云服务。其中,针对 MongoDB 的慢 SQL 分析专用于分析 MongoDB 操作过程中产生的慢日志。具体诊断数据直观、易于查找,如下图所示。更多信息,请参见 慢 SQL 分析。

基于 MongoDB 控制台的慢日志分析慢查询

在 MongoDB 未接入数据库智能管家(TencentDB for DBbrain,DBbrain)之前,可在 MongoDB 控制台获取慢日志,逐一分析关键字段来排查慢查询的原因。

获取慢日志

1. 登录 MongoDB 控制台。

2. 在左侧导航栏 MongoDB 的下拉列表中,选择副本集实例或者分片实例。副本集实例与分片实例操作类似。

3. 在右侧实例列表页面上方,选择地域。

4. 在实例列表中,找到目标实例。

5. 单击目标实例 ID,进入实例详情页面。

6. 选择数据库管理页签,再选择慢日志查询页签。

7. 在慢日志查询页签,分析慢日志,系统会记录执行时间超过100毫秒的操作,慢日志保留时间为7天,同时支持下载慢日志文件,具体操作,请参见 慢日志管理。

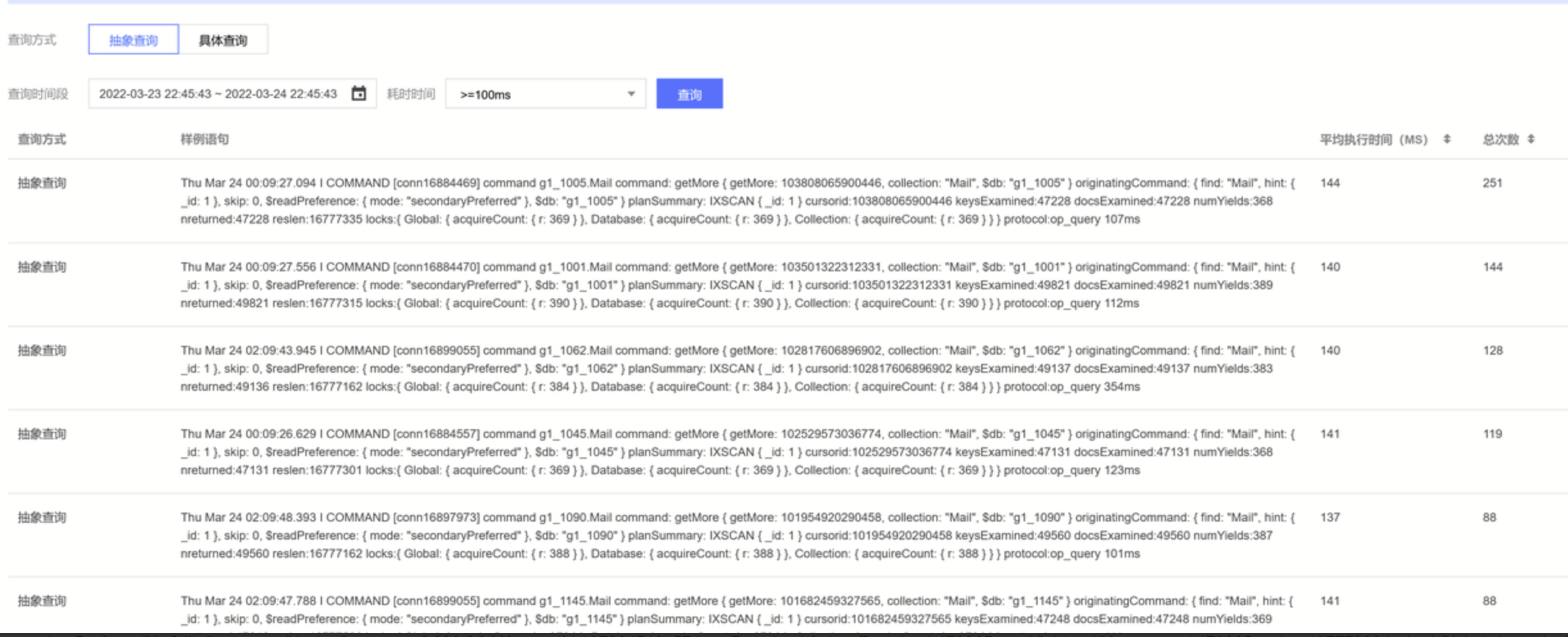

抽象查询:经过对查询条件的模糊处理后的统计值,这里可以看到按平均执行时长排序的慢查询统计,我们建议先优化 Top5 的请求。

具体查询:记录完整的用户执行请求,包括:执行计划,扫描的行数,执行时长,锁等待的一些信息。

分析慢日志关键字段

关键字段 | 字段含义 |

command | 指慢日志中记录的请求操作。 |

COLLSCAN | 指该查询进行了全表扫描。如果扫描的行数低于100,全表扫表的速度也会很快。 |

IXSCAN | 说明进行了索引扫描。该字段后面会输出具体使用的哪一个索引。有可能一个表有多个索引,当这里的索引不符合预期时,应该考虑优化索引或者通过 hint()来改造查询语句。 |

keysExamined | 指扫描索引的条目。"keysExined" : 0, # MongoDB 为执行操作而扫描的索引键的数量为0。 |

docsExamined | 说明扫描的集合中的文档数。 |

planSummary | 用于描述查询执行的摘要信息。每个 MongoDB 查询都会生成一个执行计划,该计划包含有关查询的详细信息,例如使用的索引、扫描的文档数量、查询的执行时间等。例如:"planSummary" : "IXSCAN { a: 1, _id: -1 }" 表示 MongoDB 使用了索引扫描(IXSCAN)的查询计划。具体而言,它使用了名为 "a" 的索引以及默认的 "_id" 索引,并按照升序(1)的方式对 "a" 索引进行了扫描。这是一种常见的查询计划,表示查询使用了索引来获取所需的数据。 |

numYield | 该字段表示操作在执行过程中让出锁的次数。当一个操作需要等待某些资源(例如磁盘 I/O 或锁)时,它可能会放弃 CPU 控制权,以便其他操作可以继续执行。这个过程被称为“让步”。numYield 的值越高,通常表示系统的负载越大,因为操作需要更多的时间来完成。通常,进行文档搜索的操作(查询、更新和删除)可交出锁。只有在其他操作列队等待该操作所持有的锁时,它才有可能交出自己的锁。通过优化系统中的让步次数,可以提高系统的并发性能和吞吐量,减少锁竞争,从而提高系统的稳定性和可靠性。 |

nreturned | 指查询请求返回的文档数。这个值越大,代表返回的行数越多。如果 keysExamined 值很大,nreturned 返回的文档很少,说明索引有待优化。 |

millis | 从 MongoDB 操作开始到结束耗费的时间,这个值越大,代表执行越慢。 |

IDHACK | 用于加速查询或更新操作。在 MongoDB 中,每个文档都有一个 _id 字段,它是一个唯一标识符。在某些情况下,如果查询或更新操作中包含了 _id 字段,MongoDB 可以使用 IDHACK 技术来加速操作。具体来说,

IDHACK 技术可以利用 _id字段的特殊性质,将查询或更新操作转换为更高效的操作。例如,如果查询条件是一个精确匹配的 _id值,IDHACK 可以直接使用 _id 索引来查找文档,而不需要扫描整个集合。 |

FETCH | 该字段表示在执行查询操作时,MongoDB 从磁盘读取的文档数量。当执行查询操作时,MongoDB 会根据查询条件和索引等信息,从磁盘中读取匹配的文档。FETCH 字段记录了这个过程中读取的文档数量。通常情况下 FETCH 字段的值越小,表示查询性能越好。因为 MongoDB 可以尽可能地利用索引等技术,减少从磁盘中读取文档的次数,从而提高查询性能。 |

解决方法

清理慢查询

1. 选择数据库管理 > 慢查询管理页签,列表会展示当前实例正在执行的请求(包括从节点的请求),您可单击批量 Kill 对不需要的慢查询请求进行 Kill 操作。具体操作,请参见 慢日志管理。

2. 对于非预期的请求,可在数据库智能管家(TencentDB for DBbrain,DBbrain)的诊断优化中,在实时会话页面,直接进行 Kill 操作,进行清理。具体操作,请参见 实时会话。

SQL 限流

云数据库 MongoDB 4.0 版本,在数据库智能管家(TencentDB for DBbrain,DBbrain)的诊断优化的SQL 限流页面,创建 SQL 限流任务,自主设置 SQL 类型、最大并发数、限流时间、SQL 关键词,来控制数据库的请求访问量和 SQL 并发量,从而达到服务的可用性。具体操作及应用案例,请参见 SQL 限流。

使用索引

若是基于数据库智能管家(TencentDB for DBbrain,DBbrain)的慢 SQL 分析,请在慢日志列表中,关注扫描行数是否很大,说明有大扫描的请求或者长时间运行的请求。

全表扫描引起的慢查询增多,请通过创建索引可减少集合的扫描、内存排序等。创建索引,请参见 MongoDB 官方 Indexes。

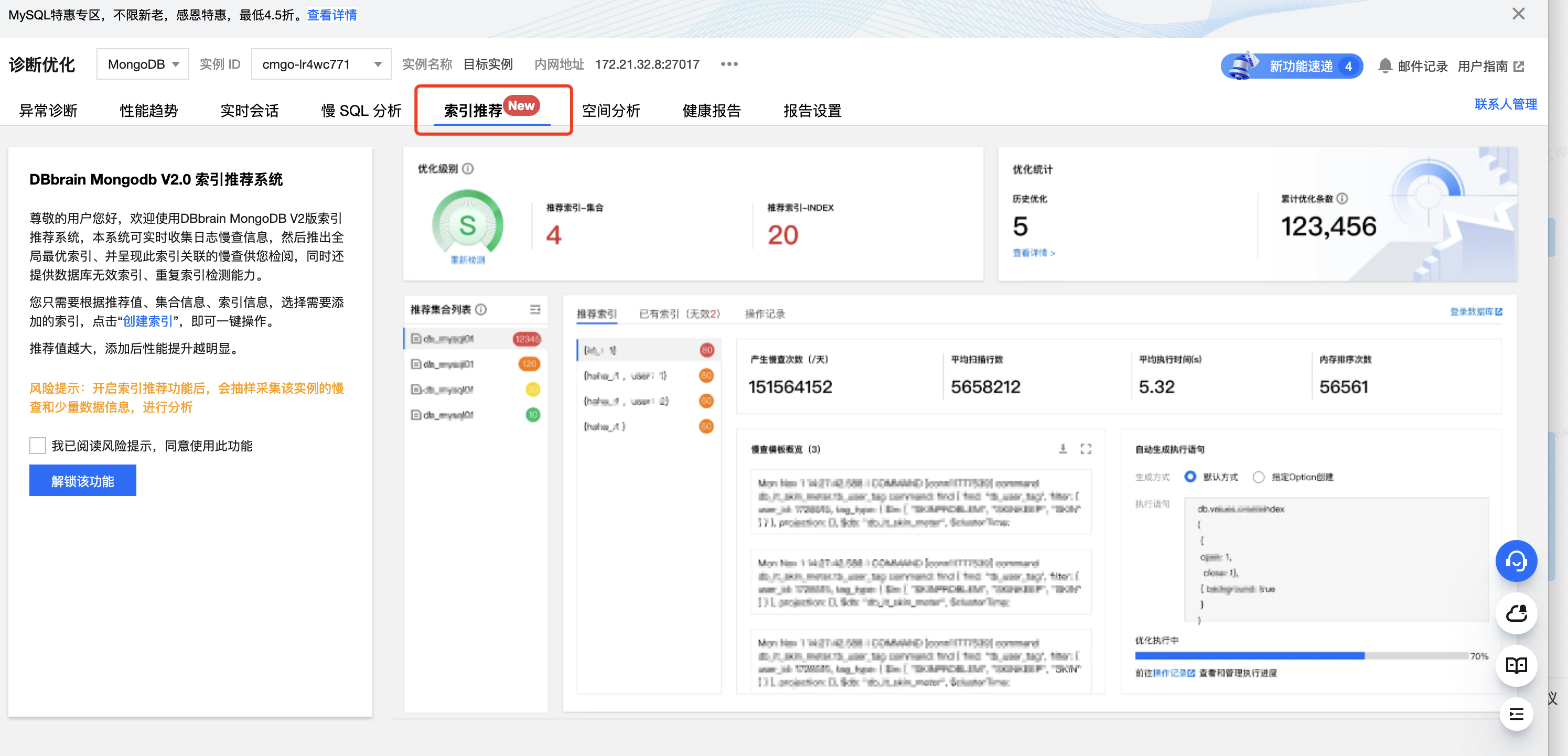

若使用了索引,而索引的扫描行数为 0,而扫描的行数大于0,说明索引需要优化,请通过数据库智能管家(TencentDB for DBbrain,DBbrain)的 索引推荐 功能,选择最优索引。索引推荐通过实时日志慢查信息的收集,进行自动分析,推出全局最优索引,并按照性能影响进行排列,推荐值越大操作后性能提升越显著。

若是基于慢日志分析,请根据如下情况分别进行处理。

keysExamined = 0,而 docsExamined > 0 ,planSummary 为 COLLSCAN ,说明执行了全表扫表,引起查询延迟明显,如下所示。创建索引,请参见 MongoDB 官方 Indexes。

keysExamined > 0,而 docsExamined > 0,planSummary 为 IXSCAN,说明有部分查询条件或者返回的字段,索引里面并不包含,需进行索引优化。请通过数据库智能管家(TencentDB for DBbrain,DBbrain)的 索引推荐 功能,选择最优索引。

关键字段 keysExamined > 0,而 docsExamined = 0 planSummary 为 IXSCAN,说明查询条件或返回的字段,索引已经包含,如果 keysExamined 值很大,建议优化索引的字段顺序、或者添加更合适的索引进行过滤。更多信息,请参见 索引优化解决读写性能瓶颈。

Thu Mar 24 01:03:01.099 I COMMAND [conn8976420] command tcoverage.ogid_mapping_info command: getMore { getMore: 107924518157, collection: "ogid_mapping_info", $db: "tcoverage" } originatingComhttps://www.tencentcloud.com/zh/document/product/1035/48613mand: { find: "ogid_mapping_info", skip: 0, $readPreference: { mode: "secondaryPreferred" }, $db: "tcoverage" } planSummary: COLLSCAN cursorid:107924518157 keysExamined:0 docsExamined:179370 numYields:1401 nreturned:179370 reslen:16777323 locks:{ Global: { acquireCount: { r: 1402 } }, Database: { acquireCount: { r: 1402 } }, Collection: { acquireCount: { r: 1402 } } } protocol:op_query 102ms

如果 MongoDB 为 4.2 之前版本,已确认业务查询所用索引并无问题,请确认当前是否在业务繁忙时段,进行了前台方式创建索引操作,请更改为后台方式。

说明:

前台方式创建索引:MongoDB 4.2 之前的版本一个集合创建索引时默认方式为前台方式,即将参数 background 选项的值设置为 false,该操作将阻塞其他所有操作,直到前台完成索引创建。

后台方式创建索引:即将 background 选项的值设置为 true,则在创建索引期间,MongoDB 依旧可以正常提供读写操作服务。然而,后台方式建索引可能会导致索引创建时间变长。具体创建索引的方式,请参见 MongoDB 官网。

后台方式创建索引,请通过

currentOp 命令来查看当前创建索引的进度。具体的命令,如下所示。db.adminCommand( { currentOp: true, $or: [ { op: "command", "command.createIndexes": { $exists: true } }, { op: "none", "msg" : /^Index Build/ } ] } )

文档反馈