集群弹性伸缩实践

最后更新时间:2024-12-13 21:25:13

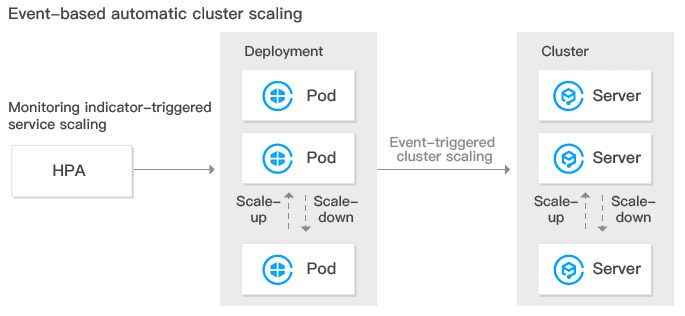

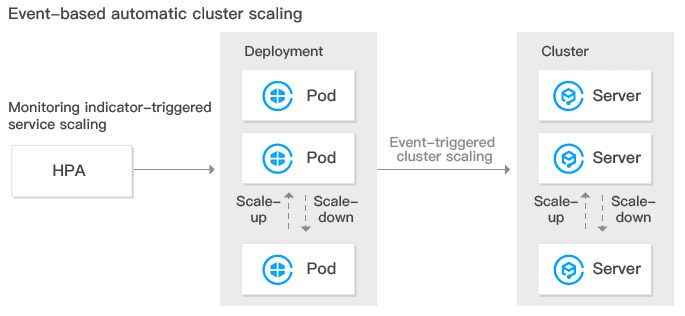

腾讯云容器服务(Tencent Kubernetes Engine,TKE)提供集群和服务两个层级的弹性伸缩能力, 能够根据业务运行情况监控容器的 CPU、内存、带宽等指标,进行自动扩缩服务。同时您可以根据容器的部署情况,在容器不够资源分配或者有过多剩余资源的情况下自动伸缩集群。如下图所示:

集群弹性伸缩特点

TKE 支持用户为集群开启自动伸缩,帮助用户高效管理计算资源,用户可根据业务设置伸缩策略,而集群弹性伸缩策略具备以下特点:

根据业务负载情况,动态实时自动创建和释放云服务器(Cloud Virtual Machine,CVM),帮助用户以最合适的实例数量应对业务情况。全程无需人工干预,为用户免去人工部署负担。

帮助用户以最适合的节点资源面对业务情况。当业务需求增加时,为用户无缝地自动增加适量 CVM 到容器集群。当业务需求下降时,为用户自动削减不需要的 CVM,提高设备利用率,为用户节省部署和实例成本。

集群弹性伸缩功能说明

Kubernetes cluster autoscaling 基本功能

支持设置多伸缩组。

支持设置扩容和缩容策略,详情请参见 Cluster Autoscaler。

TKE 伸缩组扩展功能

支持创建新的机型的伸缩组(推荐)。

支持从集群内的节点作为模板创建伸缩组。

支持竞价实例伸缩组(推荐)。

支持机型售罄时自动适配合适的伸缩组。

支持跨可用区配置伸缩组。

集群弹性伸缩限制

集群弹性伸缩可扩容节点数量受私有网络 、容器网络、TKE 集群节点配额及可购买云服务器配额限制。

扩容节点受机型当前售卖情况限制。若机型出现售罄,将无法扩容节点,建议配置多个伸缩组。

需要配置工作负载下容器的 request 值。自动扩容的触发条件是集群中存在由于资源不足而无法调度的 Pod,而判断资源是否充足正是基于 Pod 的 request 来进行的。

建议不要启用基于监控指标的节点弹性伸缩。

删除伸缩组会同时销毁伸缩组内的 CVM,请谨慎操作。

集群伸缩组配置

多伸缩组配置建议(推荐)

集群存在多个伸缩组时, 自动扩缩容组件将按照您选择的扩容算法选择伸缩组进行扩容,一次选择一个伸缩组。 当出现目标伸缩组由于售罄等原因扩容失败时,将会置为休眠一段时间,同时触发重新选择第二匹配的伸缩组进行扩容。

随机:随机选择一个伸缩组进行扩容。

Most-pods:根据当前 pending 的 Pod 和伸缩组的机型,判断并选择能调度更多 Pod 的伸缩组进行扩容。

Least-waste:根据当前 pending 的 Pod 和伸缩组的机型,判断并选择 Pod 调度后资源剩余更少的伸缩组进行扩容。

建议您在集群内配置多个不同机型的伸缩组,以防止某种机型出现售罄的情况。 同时可以使用竞价机型和常规机型混用以减少成本。

集群单伸缩组配置

若您仅接受单种机型作为集群的扩容机型, 推荐您将伸缩组配置到多个子网多个不同的可用区下。

文档反馈