应用高可用部署

最后更新时间:2024-12-19 21:49:45

高可用性(High Availability,HA)是指应用系统无中断运行的能力,通常可通过提高该系统的容错能力来实现。一般情况下,通过设置

replicas 给应用创建多个副本,可以适当提高应用容错能力,但这并不意味着应用就此实现高可用性。本文为部署应用高可用的最佳实践,通过以下方式实现高可用性。您可结合实际情况,选择多种方式进行部署:

将业务工作负载打散调度

1. 使用反亲和性避免单点故障

Kubernetes 的设计理念为假设节点不可靠,节点越多,发生软硬件故障导致节点不可用的几率就越高。所以我们通常需要给应用部署多个副本,并根据实际情况调整

replicas 的值。该值如果为1 ,就必然存在单点故障。该值如果大于1但所有副本都调度到同一个节点,仍将无法避免单点故障。 为了避免单点故障,我们需要有合理的副本数量,还需要让不同副本调度到不同的节点。可以利用反亲和性来实现,示例如下:

affinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- weight: 100labelSelector:matchExpressions:- key: k8s-appoperator: Invalues:- kube-dnstopologyKey: kubernetes.io/hostname

示例相关配置如下:

requiredDuringSchedulingIgnoredDuringExecution

此为反亲和性硬性条件,强调 Pod 调度时必须要满足该条件。当不存在满足该条件的节点时,Pod 将不会调度到任何节点(Pending)。如果不使用这种硬性条件,也可以使用

preferredDuringSchedulingIgnoredDuringExecution 来指示调度器尽量满足反亲和性条件。当不存在满足该条件的节点时,Pod 也可以调度到某个节点。 labelSelector.matchExpressions

标记该服务对应 Pod 中 labels 的 key 与 values。

topologyKey

本示例中使用

kubernetes.io/hostname,表示避免 Pod 调度到同一节点。如果您有更高的要求,例如避免调度到同一个可用区的节点,实现异地多活,则可以使用 failure-domain.beta.kubernetes.io/zone。但通常情况下,同一个集群的节点都在一个地域。如果节点跨地域,即使使用专线,时延也会很大。如果无法避免调度到同一个地域的节点,则可以使用 failure-domain.beta.kubernetes.io/region。2. 使用 topologySpreadConstraints

topologySpreadConstraints 特性在 K8S v1.18 默认启用,建议 v1.18 及其以上的集群使用

topologySpreadConstraints 来打散 Pod 的分布以提高服务可用性。将 Pod 最大程度上均匀的打散调度到各个节点上:

例如:将所有 nginx 的 Pod 严格均匀打散调度到不同节点上,不同节点上 nginx 的副本数量最多只能相差 1 个,若有节点因其它因素无法调度更多的 Pod (如资源不足),剩余的 nginx 副本 Pending。

apiVersion: apps/v1kind: Deploymentmetadata:labels:k8s-app: nginxqcloud-app: nginxname: nginxnamespace: defaultspec:replicas: 1selector:matchLabels:k8s-app: nginxqcloud-app: nginxtemplate:metadata:labels:k8s-app: nginxqcloud-app: nginxspec:topologySpreadConstraints:- maxSkew: 1whenUnsatisfiable: DoNotScheduletopologyKey: topology.kubernetes.io/regionlabelSelector:matchLabels:k8s-app: nginxcontainers:- image: nginxname: nginxresources:limits:cpu: 500mmemory: 1Girequests:cpu: 250mmemory: 256MidnsPolicy: ClusterFirst

topologyKey: 与 podAntiAffinity 中配置类似。

labelSelector: 与 podAntiAffinity 中配置类似,只是这里可以支持选中多组 pod 的 label。

maxSkew: 必须是大于零的整数,表示能容忍不同拓扑域中 Pod 数量差异的最大值。这里的 1 意味着只允许相差 1 个 Pod。

whenUnsatisfiable: 指示不满足条件时如何处理。DoNotSchedule 不调度 (保持 Pending),类似强反亲和;ScheduleAnyway 表示要调度,类似弱反亲和, 将 Pod 尽量均匀的打散调度到各个节点上,不强制 (DoNotSchedule 改为 ScheduleAnyway):

spec:topologySpreadConstraints:- maxSkew: 1whenUnsatisfiable: ScheduleAnywaytopologyKey: topology.kubernetes.io/regionlabelSelector:matchLabels:k8s-app: nginx

若集群节点支持跨可用区,可将 Pod 尽量均匀的打散调度到各个可用区 以实现更高级别的高可用 (topologyKey 改为 topology.kubernetes.io/zone):

spec:topologySpreadConstraints:- maxSkew: 1topologyKey: topology.kubernetes.io/zonewhenUnsatisfiable: ScheduleAnywaylabelSelector:matchLabels:k8s-app:: nginx

更进一步地,可以将 Pod 尽量均匀的打散调度到各个可用区的同时,在可用区内部各节点也尽量打散:

spec:topologySpreadConstraints:- maxSkew: 1whenUnsatisfiable: ScheduleAnywaytopologyKey: topology.kubernetes.io/zonelabelSelector:matchLabels:k8s-app: nginx- maxSkew: 1whenUnsatisfiable: ScheduleAnywaytopologyKey: kubernetes.io/hostnamelabelSelector:matchLabels:k8s-app: nginx

使用置放群组从物理层面实现容灾

当云服务器底层硬件或软件故障时,可能导致多台节点同时异常,即使利用反亲和性将 Pod 打散到不同节点上,可能仍无法避免业务异常。可使用 置放群组 将节点从物理机、交换机或机架三种物理层面其中一种进行打散,以避免底层硬件或软件故障造成节点批量异常。操作步骤如下:

注意:

置放群组需与 TKE 独立集群在同一地域。

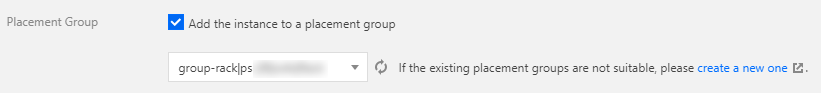

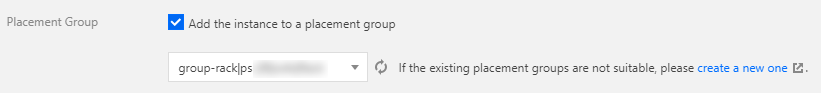

2. 批量添加节点,勾选“高级设置”中的“将实例添加到分散置放群组”,并选择已创建的置放群组。详情请参见 新增节点。如下图所示:

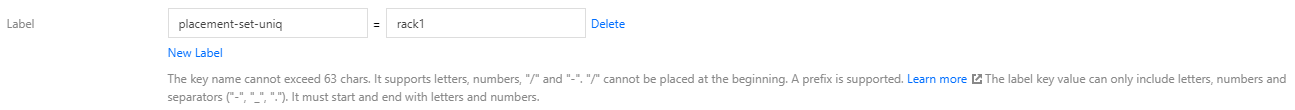

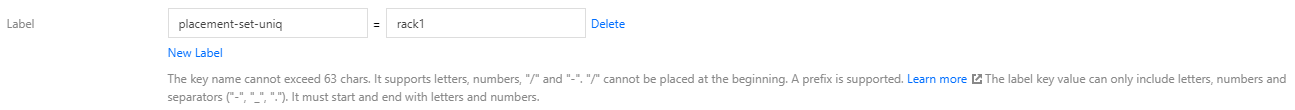

3. 在“节点列表”页面为该批节点编辑相同的 label 进行标识,这些节点是置放群组中某个同一批次添加的节点。如下图所示:

注意:

置放群组的策略仅对同一批次的节点生效,即需为每一批次的节点增加 label 并指定不同的值来进行标识。

4. 给需要部署的工作负载的 Pod 指定节点亲和性,指定部署在这一批节点上,同时也指定 Pod 反亲和,将 Pod 在这批节点中尽量打散调度。YAML 示例如下:

affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: "placement-set-uniq"operator: Invalues:- "rack1"podAntiAffinity:preferredDuringSchedulingIgnoredDuringExecution:- weight: 100podAffinityTerm:labelSelector:matchExpressions:- key: appoperator: Invalues:- nginxtopologyKey: kubernetes.io/hostname

使用 PodDisruptionBudget 避免驱逐导致服务不可用

驱逐节点是一种有损操作,该操作的过程如下:

1. 封锁节点(设置为不可调度,避免新的 Pod 调度上来)。

2. 删除该节点上的 Pod。

3. ReplicaSet 控制器检测到 Pod 减少,会重新创建一个 Pod,调度到新的节点上。

该过程是先删除再创建,并非滚动更新。因此在更新过程中,如果一个服务的所有副本都在被驱逐的节点上,则可能导致该服务不可用。通常,节点被驱逐导致服务不可用的情况会有以下两种:

1. 服务存在单点故障,所有副本都在同一个节点,驱逐该节点时可能造成服务不可用。 针对此情况,可参考 使用反亲和性避免单点故障 进行处理。

2. 服务在多个节点,但这些节点被同时驱逐,造成该服务涉及的所有副本同时被删,可能造成服务不可用。 针对此情况,可通过配置 PDB(PodDisruptionBudget)来避免所有副本同时被删除。示例如下:

保证驱逐时 zookeeper 至少有两个副本可用。

apiVersion: policy/v1beta1kind: PodDisruptionBudgetmetadata:name: zk-pdbspec:minAvailable: 2selector:matchLabels:app: zookeeper

保证驱逐时 zookeeper 最多有一个副本不可用,相当于逐个删除并在其它节点重建。

apiVersion: policy/v1beta1kind: PodDisruptionBudgetmetadata:name: zk-pdbspec:maxUnavailable: 1selector:matchLabels:app: zookeeper

使用 preStopHook 和 readinessProbe 保证服务平滑更新不中断

如果服务不做配置优化,默认情况下更新服务期间可能会产生部分流量异常,请参考以下步骤进行部署。

服务更新场景

通常情况下,服务更新场景会包含以下几种:

手动调整服务的副本数量。

手动删除 Pod 触发重新调度。

驱逐节点(主动或被动驱逐,会先删除 Pod 并在其它节点重建)。

触发滚动更新(例如,修改镜像 tag 升级程序版本)。

HPA(HorizontalPodAutoscaler)自动对服务进行水平伸缩。

VPA(VerticalPodAutoscaler)自动对服务进行垂直伸缩。

服务更新过程连接异常的原因

滚动更新时,Service 对应的 Pod 会被创建或销毁,Service 对应的 Endpoint 也会新增或移除相应的

Pod IP:Port,kube-proxy 会根据 Service 的 Endpoint 中的 Pod IP:Port 列表更新节点上的转发规则,而 kube-proxy 更新节点转发规则的动作并不是及时的。 转发规则更新不及时这一现象的出现,主要由于 Kubernetes 的设计理念中各个组件的逻辑是解耦的,它们各自使用 Controller 模式,listAndWatch 感兴趣的资源并做出相应的行为,使得从 Pod 创建或销毁到 Endpoint 更新再到节点上的转发规则更新的整个过程是异步的。

当转发规则没有及时更新时,服务更新期间就有可能发生部分连接异常。以下通过分析 Pod 创建和销毁到规则更新期间的两种情况,寻找服务更新期间部分连接异常发生的原因:

情况一:Pod 被创建,但启动速度较慢,Pod 还未完全启动就被 Endpoint Controller 加入到 Service 对应 Endpoint 的

Pod IP:Port 列表,kube-proxy watch 到更新也同步更新了节点上的 Service 转发规则(iptables/ipvs),如果此时有请求就可能被转发到还没完全启动完全的 Pod,此时 Pod 还无法正常处理请求,就会导致连接被拒绝。 情况二:Pod 被销毁,但是从 Endpoint Controller watch 到变化并更新 Service 对应 Endpoint 再到 kube-proxy 更新节点转发规则这期间是异步的,存在时间差,在这个时间差内 Pod 可能已经完全被销毁了,但转发规则还未更新,就会造成新的请求依旧还能被转发到已经被销毁的 Pod,导致连接被拒绝。

平滑更新

针对 情况一,可以给 Pod 中的 container 添加 readinessProbe(就绪检查)。通常是容器完全启动后监听一个 HTTP 端口,kubelet 发送就绪检查探测包,若正常响应则说明容器已经就绪,并将容器状态修改为 Ready。当 Pod 中所有容器都 Ready 时,该 Pod 才会被 Endpoint Controller 加入 Service 对应 Endpoint 中的

IP:Port 列表,kube-proxy 再更新节点转发规则,完成更新后即使立刻有请求被转发到的新的 Pod,也能够确保正常处理连接,避免连接异常。 针对 情况二,可以给 Pod 中的 container 添加 preStop hook,使 Pod 真正销毁前先 sleep 等待一段时间,留出时间给 Endpoint controller 和 kube-proxy 更新 Endpoint 和转发规则,这段时间 Pod 处于 Terminating 状态,即便在转发规则更新完全之前有请求被转发到 Terminating 的 Pod,依然可以被正常处理,因为 Pod 还在 sleep 没有被真正销毁。

Yaml 示例如下:

apiVersion: extensions/v1beta1kind: Deploymentmetadata:name: nginxspec:replicas: 1selector:matchLabels:component: nginxtemplate:metadata:labels:component: nginxspec:containers:- name: nginximage: "nginx"ports:- name: httphostPort: 80containerPort: 80protocol: TCPreadinessProbe:httpGet:path: /healthzport: 80httpHeaders:- name: X-Custom-Headervalue: AwesomeinitialDelaySeconds: 15timeoutSeconds: 1lifecycle:preStop:exec:command: ["/bin/bash", "-c", "sleep 30"]

文档反馈