Data Lake Compute (DLC) delivers agile, high-efficiency data lake analytics and computing services. Built on a serverless architecture, it is ready to use out of the box. You can complete data processing, multi-source data federated computing, and other data tasks with standard SQL syntax—effectively reducing the costs of building and using data analytics services, and boosting enterprise data agility.

Data Lake Compute

A next-gen cloud-native agile data lake analysis service.

Data Lake Compute leverages a big data analysis architecture with separated storage and computing. It enables fast and flexible deployments based on big data component containerization and implements unlimited expansions on top of cloud object storage. Its advanced cloud-native elastic model fits virtually any type of business to reduce your costs. As a cost-effective and highly elastic cloud data lake solution, it helps you unify data assets and maximize performance for agile and innovative business applications.

Typical Use Cases

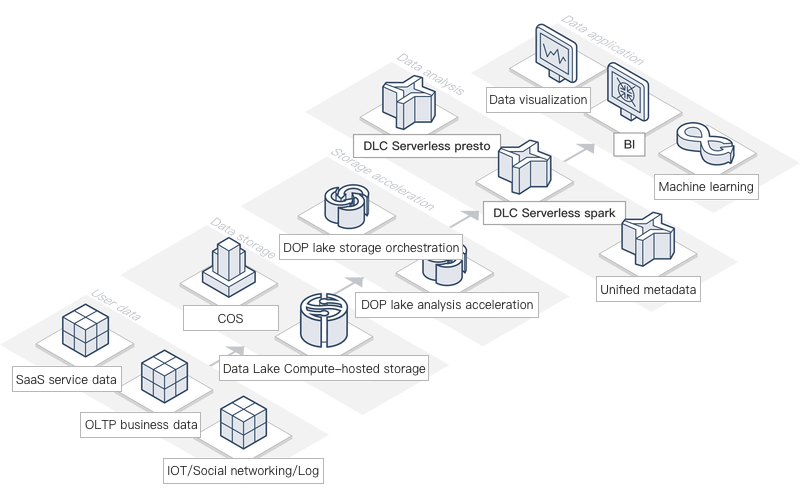

Batch Log Query

Unlike the typical practice of storing enterprise log data as JSON and text files, you can store data in COS and then use standard SQL statements through Data Lake Compute to batch query and analyze massive amounts of data, with data reports generated quickly. In this way, Data Lake Compute visualizes your data and boosts your productivity. With a few simple configurations, you can also import cloud-based log service data into a data lake for agile analysis.

Service Benefits

- Cost-effective: Data Lake Compute is pay-as-you-go, allowing you to precisely control costs through its cloud-native data lake architecture with separated storage and computing.

- Easy-to-use: You can easily get started with Data Lake Compute for faster queries through the unified SQL syntax.

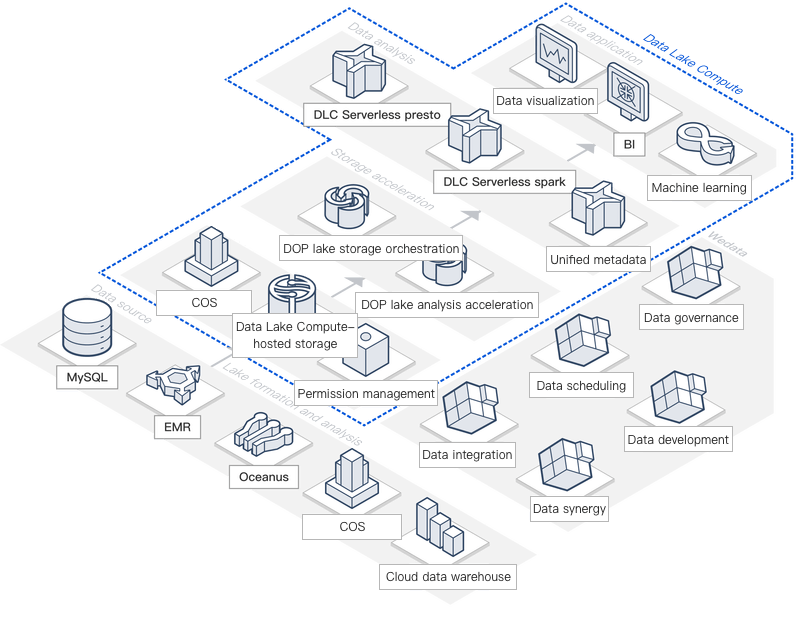

Data Lake Compute is a new data architecture with closed-loop big data analysis that is lightweight, agile, easy-to-use, and cost-effective. It has a unified metadata management view that allows you to break through data silos. It combines the strengths of many cloud-based big data services to accommodate real-time and offline data analysis scenarios and comprehensively solve a wide range of data problems. Moreover, with convenient and swift data flows, it features many of the capabilities and advantages of different cloud services, making it an ideal option for enterprises setting up a data middleend.

Typical Use Cases

- Unified Metadata View

Data Lake Compute enables you to unify all of your different metadata views into one. In this way, you can manage and use metadata from different sources in a centralized manner, build your metadata center with agility, and switch between products and versions seamlessly. Specifically, you can easily reuse the same metadata across products.

- Agile and Versatile Data Analysis

In the big data ecosystem, Presto excels in performing interactive analysis while Spark does well in ETL tasks. Data Lake Compute provides unified syntax and lightweight clustering capabilities, so the same data can go seamlessly between engines in different scenarios. It also works with WeData so data can be imported from and exported to dozens of products and data sources, such as TencentDB and CLS. This makes the most out of the strengths of each product through convenient data flows.

Service Benefits

- Out-of-the-box: Unnecessary Ops tasks and costs are saved.

- Metadata management: Multiple data sources are supported to unify metadata management and break through data silos.

- Full coverage: Data Lake Compute comprehensively covers data analysis and application scenarios, specifically, data integration, synergy, scheduling, development, and governance.

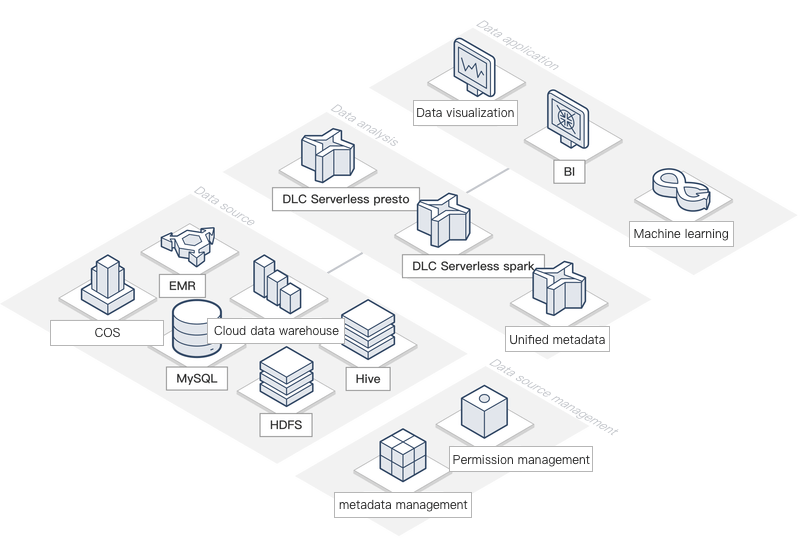

Data Lake Compute helps you seamlessly transition from database to big data scenarios, where you can query and analyze multi-source heterogeneous data in the cloud from object storage, database, and other services. Its unified data view and standard SQL capabilities speed up federated data query and analysis, breaking down data silos while fully tapping into the value of data.

Typical Use Cases

Cross-Business Federated Data Query

Enterprise departments and business lines often use different data architectures for their specific business systems. This means business data is dispersed in different storage systems, for example, transaction data in relational databases, active data in Redis, and historical records in object storage. With Data Lake Compute, you can align and analyze heterogeneous data from multiple sources to utilize your cross-business data more quickly.

Service Benefits

- Out-of-the-box: There is no need to set up data transfer pipelines, so unnecessary Ops and costs are saved.

- Secure and efficient: The permission management system is unified and refined to the column level, making queries super fast.

- Easy-to-use: Cross-business analysis can be easily implemented without programming language adaptation.

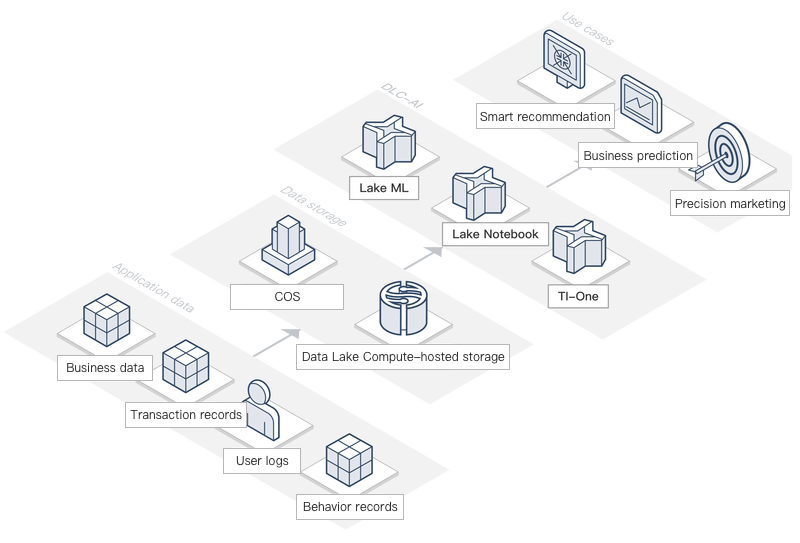

AI scenarios, including machine learning and deep learning. Connected to a wealth of AI capabilities and platforms, Data Lake Compute readily supports a multitude of machine learning capabilities and delivers comprehensive solutions to various smart data lake analysis applications. It opens up multiple industry databases free of charge so that you can perform data analysis without data acquisition and cleansing. It also provides strong BI capabilities to help you gain data insights through predictive analysis.

Typical Use Cases

Business Growth Empowered by Data

Data Lake Compute offers native machine learning capabilities based on a sophisticated machine learning platform to provide a complete smart analysis solution. It helps solve your real-world business issues, such as smart recommendation and recall policies, and empower your business growth. Machine learning scenarios are often susceptible to problems like large data volumes, slow model training, and poor algorithm results. With this solution, you can enjoy out-of-the-box machine learning algorithm models to create data-driven models and predict business outcomes. You can also use its BI capabilities for efficient business analysis and improved operational efficiency.

Service Benefits

- Ease of use: The service is seamlessly connected to Tencent Cloud's machine learning platform, giving you access to a wealth of models and APIs.

- Data standardization: Unified data management and governance provide more standardized data for data operations.

Data Lake Compute (DLC) offers pay-as-you-go billing based on scanned data volume or resource usage, with optional monthly subscription available. For details, refer to Billing Overview.