Traffic Throttling

Last updated:2026-01-23 17:52:23

Overview

Tencent Cloud TDMQ for RocketMQ provides messaging services for various online businesses with requirements for large scale, low latency, and high availability. Clients establish persistent connections with RocketMQ clusters through SDKs to send and receive messages, consuming computing, storage, and network bandwidth resources of the cluster nodes. To provide high-quality messaging services, we need to manage the cluster load under high concurrency and heavy traffic to guarantee system stability and reliability. Therefore, the server limits the maximum converted number of messages per second (transactions per second, TPS) that a client can send and consume based on the cluster specifications. For specific calculation rules, see Billing Overview - Computing Specifications. To achieve both isolation and flexibility, the TPS quotas for sending and consuming messages are not shared. Additionally, custom quota ratios are supported (the default ratio is 1:1).

Description of Traffic Throttling Behavior

TDMQ for RocketMQ employs a fail-fast traffic throttling policy. This means when a client's request rate reaches the upper limit, the server immediately responds with an error. Online businesses are typically sensitive to the response time. The fail-fast approach allows clients to detect traffic throttling events promptly and intervene accordingly, preventing the deterioration of end-to-end duration for business messages.

For a basic cluster with a 1,000 TPS specification, assuming the send-to-receive TPS quota ratio is 1:1, the traffic throttling behavior is described in the following table.

Description | Traffic Throttling for Message Sending | Traffic Throttling for Message Consumption |

Traffic Throttling Scenario | All sending clients connected to this cluster can send a maximum total of 500 converted messages per second. When the sending rate reaches the limit, any excess sending requests will fail. | All consumption clients connected to this cluster can consume a maximum total of 500 converted messages per second. When the consumption rate reaches the limit, the consumption delay for messages will increase. |

SDK Log Keywords When Traffic Throttling Is Triggered | Rate of message sending reaches limit, please take a control or upgrade the resource specification. | Rate of message receiving reaches limit, please take a control or upgrade the resource specification. |

SDK Retry Mechanism When Traffic Throttling Is Triggered | SDKs of different protocols handle this differently: 5.x SDK retries on message sending based on an exponential backoff policy. The maximum number of retries can be customized during producer initialization, with a default value of 2. If a sending request still fails after reaching the maximum number of retries, an exception will be thrown. 4.x SDK directly throws an exception and does not perform any retry. | The SDK's message pulling thread will automatically back off and retry. |

Client Use Cases

Planning Clusters

The purpose of traffic throttling for TDMQ for RocketMQ clusters is to ensure service stability and reliability, preventing issues such as increased service response time and decreased request success rates when the cluster is under high load, thereby avoiding business impact. Therefore, proper cluster planning is crucial when you access TDMQ for RocketMQ. We recommend that you:

Evaluate your business TPS thoroughly based on current scale and future trend projections. If your business traffic is fluctuating, use the peak TPS as the benchmark. Furthermore, we recommend that you reserve a portion of the TPS quota (for example, 30%) during evaluation to handle potential traffic spikes.

For businesses with high stability requirements, use multiple RocketMQ clusters to enhance isolation. For example, you can isolate core business linkages (such as trading systems) from non-core linkages (such as logging systems), and separate the production environment from development and test environments.

Monitoring Load

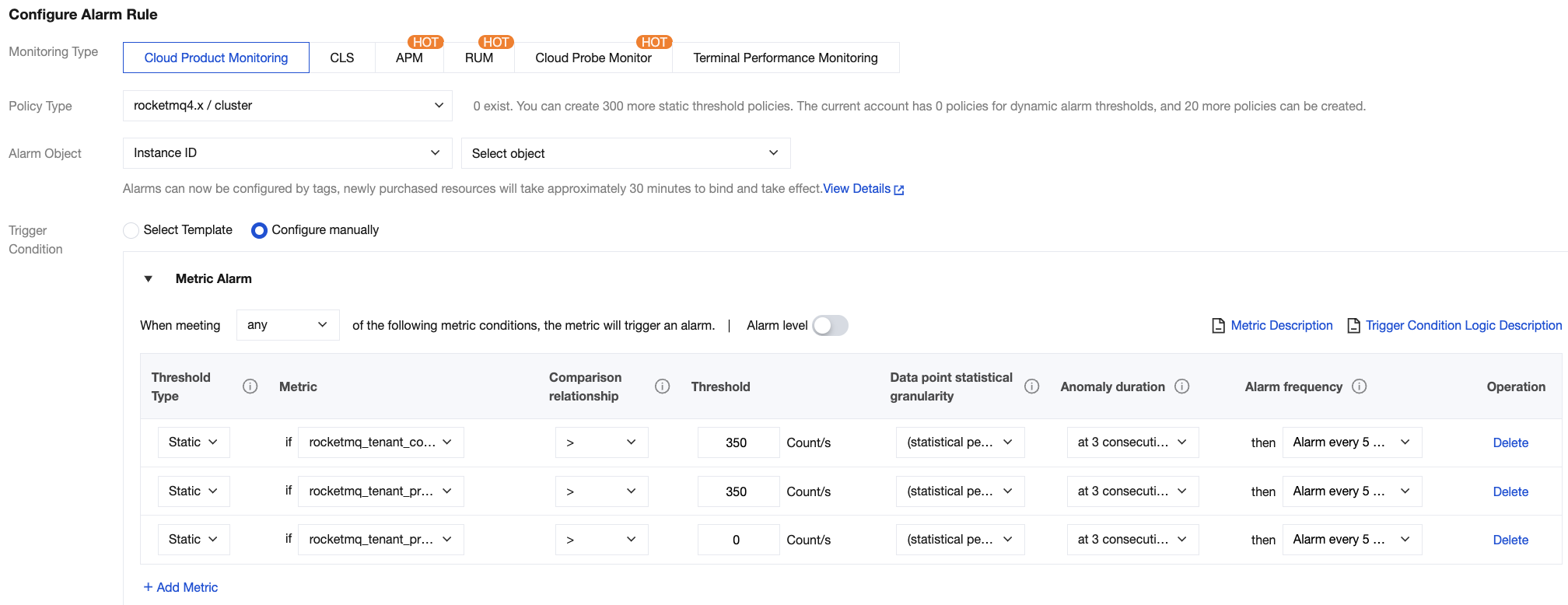

You can utilize the monitoring and alarm capability of the TDMQ for RocketMQ console to observe cluster load in real time. This allows you to identify TPS level risks in advance and perform timely specification upgrades to ensure sufficient resources and avoid triggering traffic throttling. For specific operations, see Monitoring and Alarms. Recommended alarm policies are as follows:

Trigger an alarm when the sending and consumption TPS levels exceed 70% of capacity, prompting an evaluation for specification upgrade.

Trigger an alarm when sending traffic throttling occurs, warning of potential message sending failures.

Example

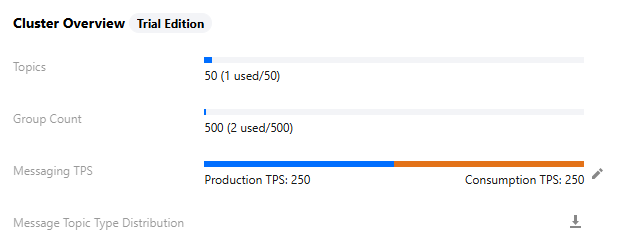

Taking a basic cluster with a 1,000 TPS specification as an example, the TPS alarm policy is as follows:

Code Exception Handling

When your business code sends messages through the RocketMQ SDK, it needs to catch exceptions, including traffic throttling errors, and preserve necessary context information for manual intervention to restore business. SDKs of different protocols have different retry mechanisms. The sample code is as follows:

4.x SDK does not automatically retry on traffic throttling errors. Therefore, business code needs to catch and handle exceptions. The sample code is as follows:

import java.io.UnsupportedEncodingException;import java.nio.charset.StandardCharsets;import org.apache.rocketmq.acl.common.AclClientRPCHook;import org.apache.rocketmq.acl.common.SessionCredentials;import org.apache.rocketmq.client.exception.MQBrokerException;import org.apache.rocketmq.client.exception.MQClientException;import org.apache.rocketmq.client.producer.DefaultMQProducer;import org.apache.rocketmq.client.producer.SendResult;import org.apache.rocketmq.common.message.Message;import org.apache.rocketmq.common.message.MessageClientIDSetter;import org.apache.rocketmq.logging.InternalLogger;import org.apache.rocketmq.logging.InternalLoggerFactory;public class ProducerExample {private static final InternalLogger log = InternalLoggerFactory.getLogger(ProducerExample.class);public static void main(String[] args) throws MQClientException, InterruptedException, UnsupportedEncodingException {String nameserver = "Your_Nameserver";String accessKey = "Your_Access_Key";String secretKey = "Your_Secret_Key";String topicName = "Your_Topic_Name";String producerGroupName = "Your_Producer_Group_Name";// Instantiate a message producer.DefaultMQProducer producer = new DefaultMQProducer(producerGroupName, // Producer group name.new AclClientRPCHook(new SessionCredentials(accessKey, secretKey)) // Access control list (ACL) permissions. You can obtain the accessKey and secretKey from the Cluster Permissions page in the console.);// Set the NameServer address. You can obtain the address from the cluster details page in the console.producer.setNamesrvAddr(nameserver);// Start the producer instance.producer.start();// Create a message instance and set the topic and message content.Message message = new Message(topicName, "Your_Biz_Body".getBytes(StandardCharsets.UTF_8));// Maximum number of message sending attempts. Configure the limit based on your business scenario.final int maxAttempts = 3;// Retry interval. Configure the interval based on your business scenario.final int retryIntervalMillis = 200;// Send messages.int attempt = 0;do {try {SendResult sendResult = producer.send(message);log.info("Send message successfully, {}", sendResult);break;} catch (Throwable t) {attempt++;if (attempt >= maxAttempts) {// The maximum number of attempts is reached.log.warn("Failed to send message finally, run out of attempt times, attempt={}, maxAttempts={}, msgId={}",attempt, maxAttempts, MessageClientIDSetter.getUniqID(message), t);// Log the failed messages (or log them to other business systems, such as databases).log.warn(message.toString());break;}int waitMillis;if (t instanceof MQBrokerException && ((MQBrokerException) t).getResponseCode() == 215 /* FLOW_CONTROL */) {// Traffic throttling exception. Use backoff retry.waitMillis = (int) Math.pow(2, attempt - 1) * retryIntervalMillis; // Retry interval: 200 ms, 400 ms, ......} else {// Other exceptions.waitMillis = retryIntervalMillis;}log.warn("Failed to send message, will retry after {}ms, attempt={}, maxAttempts={}, msgId={}",waitMillis, attempt, maxAttempts, MessageClientIDSetter.getUniqID(message), t);try {Thread.sleep(waitMillis);} catch (InterruptedException ignore) {}}}while (true);producer.shutdown();}}

5.x SDK automatically retries on message sending exceptions. You can customize the maximum number of retries in the business code. The sample code is as follows:

import java.io.IOException;import java.nio.charset.StandardCharsets;import org.apache.rocketmq.client.apis.ClientConfiguration;import org.apache.rocketmq.client.apis.ClientException;import org.apache.rocketmq.client.apis.ClientServiceProvider;import org.apache.rocketmq.client.apis.SessionCredentialsProvider;import org.apache.rocketmq.client.apis.StaticSessionCredentialsProvider;import org.apache.rocketmq.client.apis.message.Message;import org.apache.rocketmq.client.apis.producer.Producer;import org.apache.rocketmq.client.apis.producer.SendReceipt;import org.slf4j.Logger;import org.slf4j.LoggerFactory;public class ProducerExample {private static final Logger log = LoggerFactory.getLogger(ProducerExample.class);public static void main(String[] args) throws ClientException, IOException {String nameserver = "Your_Nameserver";String accessKey = "Your_Access_Key";String secretKey = "Your_Secret_Key";String topicName = "Your_Topic_Name";// ACL permissions. You can obtain the accessKey and secretKey from the Cluster Permissions page in the console.SessionCredentialsProvider sessionCredentialsProvider = new StaticSessionCredentialsProvider(accessKey, secretKey);ClientConfiguration clientConfiguration = ClientConfiguration.newBuilder().setEndpoints(nameserver) // Set the NameServer address. You can obtain the address from the cluster details page in the console..setCredentialProvider(sessionCredentialsProvider).build();// Start the producer instance.ClientServiceProvider provider = ClientServiceProvider.loadService();Producer producer = provider.newProducerBuilder().setClientConfiguration(clientConfiguration).setTopics(topicName) // Pre-declare the topic for message sending. It is recommended to set this parameter..setMaxAttempts(3) // Maximum number of message sending attempts. Configure the limit based on your business scenario..build();// Create a message instance and set the topic and message content.byte[] body = "Your_Biz_Body".getBytes(StandardCharsets.UTF_8);final Message message = provider.newMessageBuilder().setTopic(topicName).setBody(body).build();try {final SendReceipt sendReceipt = producer.send(message);log.info("Send message successfully, messageId={}", sendReceipt.getMessageId());} catch (Throwable t) {log.warn("Failed to send message", t);// Log the failed messages (or log them to other business systems, such as databases).log.warn(message.toString());}producer.close();}}

FAQs

Will Message Loss Occur After Traffic Throttling Is Triggered?

For message sending, when the traffic throttling limit is reached, the server does not store the affected message. The client needs to catch the exception and perform downgrade processing. For message consumption, traffic throttling results in increased consumption delay, but any message that has already been successfully sent will not be lost.

Why Is the TPS on the Monitoring Page Higher than the Number of Messages?

Transactions per second (TPS) represents the number of converted messages. If your business uses advanced messages (such as ordered, delayed, or transactional messages) or if the message body is relatively large, a single business message may be counted as multiple converted messages. For specific conversion logic, see TPS Calculation Rules. Additionally, the metric for the number of messages reports the per-second average over one minute, while the TPS metric reports the per-second peak value within the minute.

Does Occasional Brief Consumption Traffic Throttling in a Cluster Have Any Impact?

Generally, there is no impact. Operations such as client restarts, server restarts, or scaling out topic queues in the console may trigger brief consumption traffic throttling due to a sudden backlog in the consumer group. This condition typically recovers quickly once stability is restored.

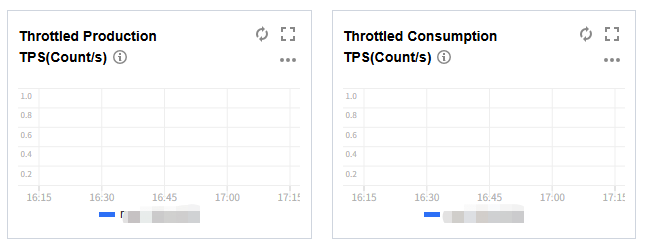

How to Determine Whether Traffic Throttling Has Occurred in a Cluster?

In addition to identifying exceptions thrown by the SDK's message sending API or information recorded in SDK logs, you can also check the Throttled Production TPS and Throttled Consumption TPS metrics on the Monitoring Dashboard in the TDMQ for RocketMQ console.

Was this page helpful?

You can also Contact Sales or Submit a Ticket for help.

Yes

No

Feedback