Intelligent Customer Service

Last updated: 2025-12-10 11:10:54

Scenario Introduction

Intelligent voice customer service is a customer service system that uses artificial intelligence (AI) and Automatic Speech Recognition (ASR) technology to achieve automated interaction and problem-solving. Prior to the advent of AI large models, intelligent customer service primarily leveraged natural language processing and machine learning algorithms to understand customer intentions, and relied on predefined rules and knowledge bases to provide answers. With the development of Large Language Models (LLMs), more and more intelligent customer services have integrated the capabilities of large models. LLM technology enables intelligent voice customer service to better understand the context of conversations, thus achieving coherent conversations.

The introduction of Real-Time Communication (RTC) technology brings real-time communication capabilities to intelligent voice customer service. This means that intelligent customer service can respond more quickly to customer needs, providing instant feedback and solutions. At the same time, Tencent RTC also supports group calls, screen sharing, and other features, further enhancing the efficiency and quality of customer service.

Implementation Solution

To implement a comprehensive intelligent customer service scenario, multiple modules are typically involved, including Real-Time Communication (RTC), Conversational AI, LLM, and TTS. The key actions and features of each module are shown in the table below:

Feature | Application in AI Intelligent Customer Service Scenarios |

RTC | Streaming transmission technology ensures the continuity and stability of voice and video data, reduces latency and jitter, and delivers a high-quality experience comparable to human customer service calls. Users can engage in more natural conversations with the intelligent customer service system, similar to talking with a real customer service agent. This interactive experience can significantly improve user satisfaction. |

Conversational AI | Tencent Conversational AI enables businesses to flexibly connect with multiple large language models and build real-time audio and video interactions between AI and users. Powered by global low-delay transmission of Tencent Real-Time Communication (Tencent RTC), Conversational AI achieves voice conversation latency as low as 1 second and delivers natural and realistic conversation effects, making integration convenient and ready to use out of the box. |

LLM | LLM technology enables intelligent voice customer service to better understand the context of conversations, achieving coherent conversations. LLM can capture semantic and contextual information in conversations, identify user intentions, and associate the content of the previous conversation with the current one. |

TTS | The integration of third-party TTS is supported. By introducing personalized training data to a model or adjusting model parameters, TTS can generate voice output that meets specific requirements. Intelligent voice customer service can offer different voice styles based on user preferences or the needs of specific scenarios. |

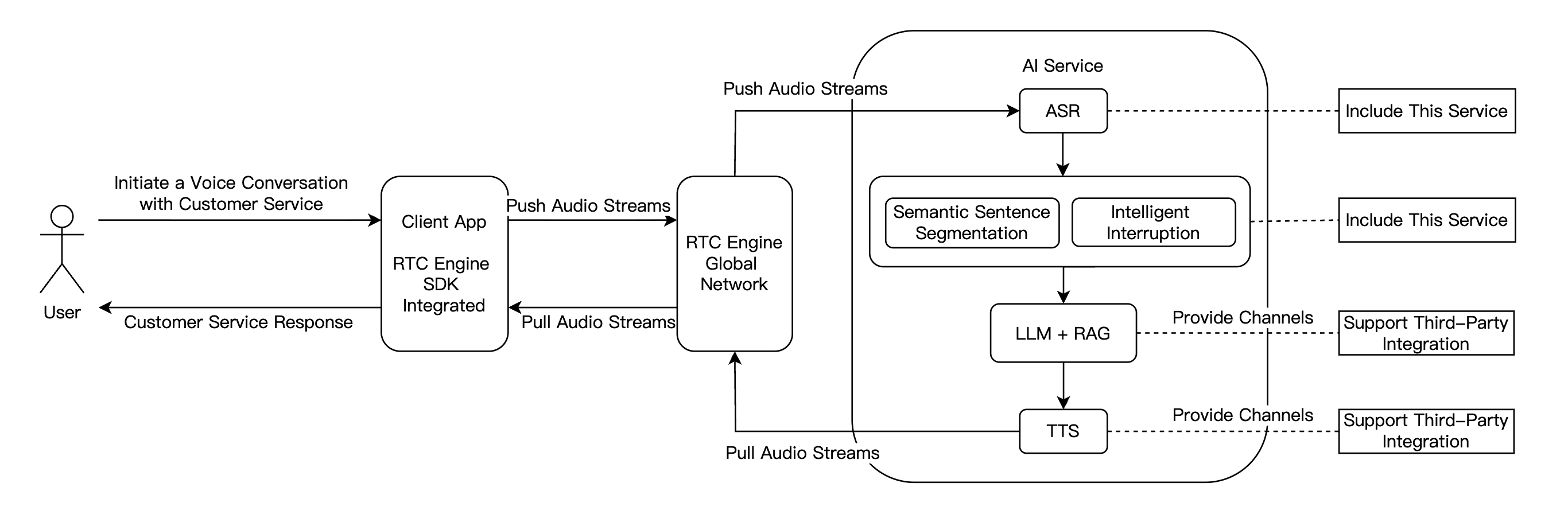

Solution Architecture

Prerequisites

Preparing LLM

Conversational AI supports any LLM that complies with the OpenAI standard protocol, as well as LLM application development platforms such as Tencent Cloud Agent Development Platform (ADP), Dify, and Coze. For supported platforms, see Large Language Model Configuration.

Using RAG

In intelligent customer service scenarios, businesses need to upload their own knowledge collections, including various documents and Q&A materials. This requires the use of LLM and RAG to enhance retrieval capabilities. Developers can implement OpenAI API-compatible large model interfaces in their own business backend and send large model requests encapsulating contextual logic to third-party large models.

Note:

Using RAG, Function Call, or other capabilities of an LLM may increase the LLM first-token duration, leading to higher AI response latency. For latency-sensitive scenarios, SystemPrompt is recommended as an alternative to the RAG feature.

Preparing TTS

Use Tencent Cloud TTS:

To use the TTS feature, you need to activate the TTS service for your application.

Go to Account Information to obtain AppID.

Go to API Key Management to obtain SecretId and SecretKey. SecretKey can only be viewed during key creation. Please save SecretKey promptly.

Go to Timbre List to obtain adjustable timbre.

Use third-party or custom TTS: For currently supported TTS configurations, see Text-to-Speech Configuration.

Preparing RTC Engine

Note:

Conversational AI calls may incur usage fees. For more information, see Billing of Conversational AI Service.

Integration Steps

Advanced Features

Configurations of advanced features, including far-field voice suppression, conversation latency optimization, AI conversation subtitle and AI status reception, interruption latency optimization, server callbacks, and on-cloud recording, are supported.

FAQs

Supporting Products for the Solution

System Level | Product Name | Application Scenarios |

Access Layer | Provides low-latency, high-quality real-time audio and video interaction solutions, serving as the foundational capability for audio and video call scenarios. | |

Cloud Services | Enables real-time audio and video interactions between AI and users and develops Conversational AI capabilities tailored to business scenarios. | |

LLM | Serves as the brain of intelligent customer services and offers multiple agent development frameworks such as LLM+RAG, Workflow, and Multi-agent. | |

Data Storage | Provides storage services for audio recording files and audio slicing files. |

Was this page helpful?

You can also Contact Sales or Submit a Ticket for help.

Yes

No

Feedback