在 TKE 上使用自定义指标进行弹性伸缩

最后更新时间:2025-05-29 19:08:42

操作场景

容器服务 TKE 基于 Custom Metrics API 支持许多用于弹性伸缩的指标,涵盖 CPU、内存、硬盘、网络以及 GPU 相关的指标,覆盖绝大多数的 HPA 弹性伸缩场景,详细列表请参见 自动伸缩指标说明。针对例如基于业务单副本 QPS 大小来进行自动扩缩容等复杂场景,可通过安装 prometheus-adapter 来实现自动扩缩容。而 Kubernetes 提供 Custom Metrics API 与 External Metrics API 来对 HPA 指标进行扩展,让用户能够根据实际需求进行自定义。prometheus-adapter 支持以上两种 API,在实际环境中,使用 Custom Metrics API 即可满足大部分场景。本文将介绍如何通过 Custom Metrics API 实现使用自定义指标进行弹性伸缩。

前提条件

已创建1.14或以上版本的 TKE 集群,详情请参见 创建集群。

已购买 Prometheus 实例,并完成实例和 TKE 集群的关联,详情请参见 创建 Prometheus 实例、关联集群。

已安装 Helm。

操作步骤

暴露监控指标

本文以 Golang 业务程序为例,该示例程序暴露了

httpserver_requests_total 指标,并记录 HTTP 的请求,通过该指标可以计算出业务程序的 QPS 值。示例如下:package mainimport ("github.com/prometheus/client_golang/prometheus""github.com/prometheus/client_golang/prometheus/promhttp""net/http""strconv")var (HTTPRequests = prometheus.NewCounterVec(prometheus.CounterOpts{Name: "httpserver_requests_total",Help: "Number of the http requests received since the server started",},[]string{"status"},))func init() {prometheus.MustRegister(HTTPRequests)}func main() {http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {path := r.URL.Pathcode := 200switch path {case "/test":w.WriteHeader(200)w.Write([]byte("OK"))case "/metrics":promhttp.Handler().ServeHTTP(w, r)default:w.WriteHeader(404)w.Write([]byte("Not Found"))}HTTPRequests.WithLabelValues(strconv.Itoa(code)).Inc()})http.ListenAndServe(":80", nil)}

部署业务程序

将前面的程序打包成容器镜像,然后部署到集群,例如使用 Deployment 部署:

apiVersion: apps/v1kind: Deploymentmetadata:name: httpservernamespace: httpserverspec:replicas: 1selector:matchLabels:app: httpservertemplate:metadata:labels:app: httpserverspec:containers:- name: httpserverimage: ccr.ccs.tencentyun.com/tkedemo/custom-metrics-demo:v1.0.0imagePullPolicy: Always---apiVersion: v1kind: Servicemetadata:name: httpservernamespace: httpserverlabels:app: httpserverannotations:prometheus.io/scrape: "true"prometheus.io/path: "/metrics"prometheus.io/port: "http"spec:type: ClusterIPports:- port: 80protocol: TCPname: httpselector:app: httpserver

Prometheus 采集业务监控

1. 如果您已完成 Prometheus 监控实例与 TKE 集群的关联,可直接登录 容器服务控制台,选择左侧导航栏的 Prometheus 监控。

2. 选择监控实例,在数据采集> 集成容器服务页面,找到目标实例,单击右侧的数据采集配置,进入数据采集配置列表页。

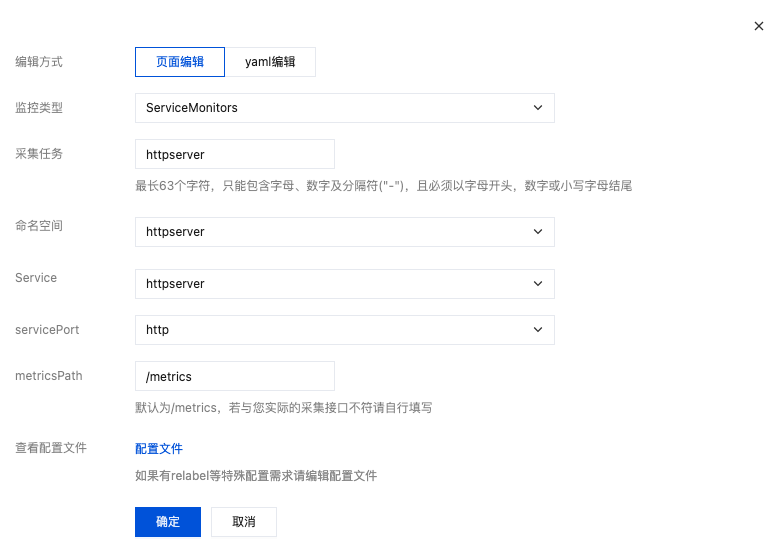

3. 单击新建自定义监控,为已部署的业务程序配置新的采集规则。单击确定,如下图所示:

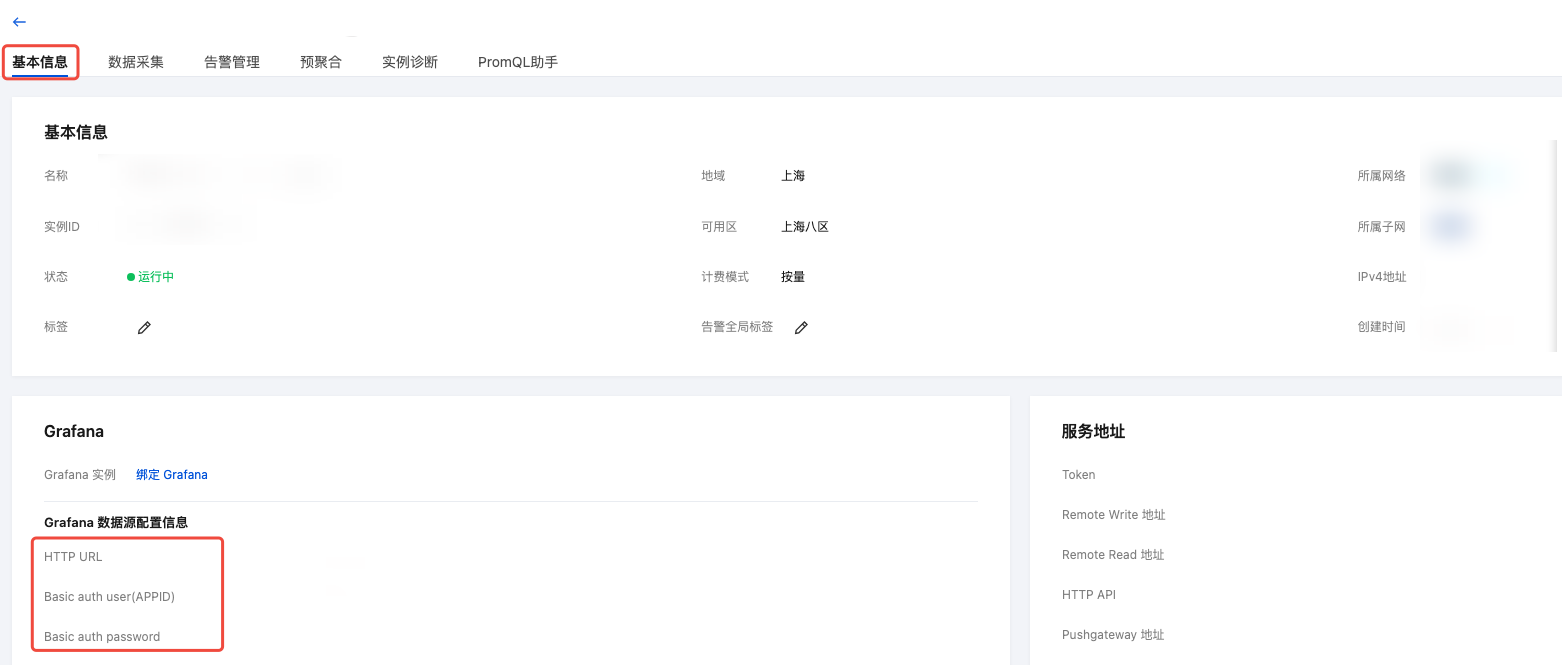

4. 单击左上角基本信息页面,获取访问 Prometheus API 的地址(HTTP URL)和鉴权账号信息(Basic auth user 和 Basic auth password),后续安装 prometheus-adapter 时使用,如下图所示:

安装 prometheus-adapter

1. 使用 Helm 安装 prometheus-adapter,安装前请确定并配置自定义指标。按照上文 暴露监控指标 中的示例,在业务中使用

httpserver_requests_total 指标来记录 HTTP 请求,因此可以通过如下的 PromQL 计算出每个业务 Pod 的 QPS 监控。示例如下:sum(rate(http_requests_total[2m])) by (pod)

2. 将其转换为 prometheus-adapter 的配置,创建

values.yaml,内容如下:rules:default: falsecustom:- seriesQuery: 'httpserver_requests_total'resources:template: <<.Resource>>name:matches: "httpserver_requests_total"as: "httpserver_requests_qps" # PromQL 计算出来的 QPS 指标metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>}[1m])) by (<<.GroupBy>>)prometheus:url: http://127.0.0.1 # 替换上一步获取到的 Prometheus API 的地址(HTTP URL) (不写端口)port: 9090extraArguments:- --prometheus-header=Authorization=Basic {token}#其中{token}为您从控制台获取的 Basic auth user:Basic auth password 字符串的 base64 编码

3. 执行以下 Helm 命令安装 prometheus-adapter,示例如下:

注意:

安装前需要删除 TKE 已经注册的 Custom Metrics API,删除命令如下:

kubectl delete apiservice v1beta1.custom.metrics.k8s.io

helm repo add prometheus-community https://prometheus-community.github.io/helm-chartshelm repo update# Helm 3helm install prometheus-adapter prometheus-community/prometheus-adapter -f values.yaml# Helm 2# helm install --name prometheus-adapter prometheus-community/prometheus-adapter -f values.yaml

4. 添加 prometheus 认证鉴权参数。

当前社区提供的 chart 中没有暴露认证鉴权相关的入参,会导致认证鉴权失败无法正常连接 TMP 服务,为了解决这个问题,您可以查看 社区文档。解决方案要求您手动修改 Prometheus Adapter deployment,在 adapter 启动参数中添加

--prometheus-header=Authorization=Basic {token},其中{token} 为您从控制台获取的 Basic auth user:Basic auth password 的 base64 编码。测试验证

若安装正确,执行以下命令,可以查看到 Custom Metrics API 返回配置的 QPS 相关指标。示例如下:

$ kubectl get --raw /apis/custom.metrics.k8s.io/v1beta1{"kind": "APIResourceList","apiVersion": "v1","groupVersion": "custom.metrics.k8s.io/v1beta1","resources": [{"name": "jobs.batch/httpserver_requests_qps","singularName": "","namespaced": true,"kind": "MetricValueList","verbs": ["get"]},{"name": "pods/httpserver_requests_qps","singularName": "","namespaced": true,"kind": "MetricValueList","verbs": ["get"]},{"name": "namespaces/httpserver_requests_qps","singularName": "","namespaced": false,"kind": "MetricValueList","verbs": ["get"]}]}

执行以下命令,可以查看到 Pod 的 QPS 值。示例如下:

说明

下述示例 QPS 为500m,表示 QPS 值为0.5。

$ kubectl get --raw /apis/custom.metrics.k8s.io/v1beta1/namespaces/httpserver/pods/*/httpserver_requests_qps{"kind": "MetricValueList","apiVersion": "custom.metrics.k8s.io/v1beta1","metadata": {"selfLink": "/apis/custom.metrics.k8s.io/v1beta1/namespaces/httpserver/pods/%2A/httpserver_requests_qps"},"items": [{"describedObject": {"kind": "Pod","namespace": "httpserver","name": "httpserver-6f94475d45-7rln9","apiVersion": "/v1"},"metricName": "httpserver_requests_qps","timestamp": "2020-11-17T09:14:36Z","value": "500m","selector": null}]}

测试 HPA

假如设置每个业务 Pod 的平均 QPS 达到50时将触发扩容,最小副本为1个,最大副本为1000个,则配置示例如下:

apiVersion: autoscaling/v2beta2kind: HorizontalPodAutoscalermetadata:name: httpservernamespace: httpserverspec:minReplicas: 1maxReplicas: 1000scaleTargetRef:apiVersion: apps/v1kind: Deploymentname: httpservermetrics:- type: Podspods:metric:name: httpserver_requests_qpstarget:averageValue: 50type: AverageValue

执行以下命令对业务进行压测,观察是否自动扩容。示例如下:

# 使用 wrk 或者其他 http 压测工具对 httpserver 服务进行压测。$ kubectl proxy --port=8080 > /dev/null 2>&1 &$ wrk -t12 -c3000 -d60s http://localhost:8080/api/v1/namespaces/httpserver/services/httpserver:http/proxy/test$ kubectl get hpa -n httpserverNAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGEhttpserver Deployment/httpserver 35266m/50 1 1000 2 50m# 观察 hpa 的扩容情况$ kubectl get pods -n httpserverNAME READY STATUS RESTARTS AGEhttpserver-7f8dffd449-pgsb7 0/1 ContainerCreating 0 4shttpserver-7f8dffd449-wsl95 1/1 Running 0 93shttpserver-7f8dffd449-pgsb7 1/1 Running 0 4s

若扩容正常,则说明已实现 HPA 基于业务自定义指标进行弹性伸缩。

文档反馈