应用设置概述

最后更新时间:2025-12-02 10:03:13

创建应用后,进入应用设置页面,可对应用模型、知识库、输出配置等进行设置。本文以标准模式应用为例,详细介绍应用配置相关操作。

基础设置

设置完成后,设置页将随应用模式的不同有所区别,具体请参考下表:

配置内容 | 标准模式 | 单工作流模式 | Multi-Agent 模式 |

基础设置 | 应用名称、应用头像和开场白。不同模式保持一致。 | | |

应用设置 | 不同模式的应用设置保持独立,模式切换时设置内容不继承。 | 不同模式的应用设置保持独立,模式切换时设置内容不继承。 单工作流模式不支持提示词、插件等设置项。 | 不同模式的应用设置保持独立,模式切换时设置内容不继承。 Multi-Agent 模式与标准模式设置项范围基本一致,但是具体可选范围有差异。 |

应用图标和名称设置完成并发布后,将展示在用户端界面窗口中。

模型设置

模型设置相关功能如下:

模型服务:腾讯云智能体开发平台新用户将自动获得一定量的免费额度,可通过选择不同种类的模型进行免费应用调试;根据测试结果,您进一步 购买 和使用。

上下文轮数:设置输入给大模型作为 prompt 的上下文对话历史轮数。轮数越多,多轮对话的相关性越高,但消耗的 tokens 也越多。

参数设置:

温度:控制生成文本的随机性和多样性。较高的值将使输出更加随机和富有创造性,适合诗歌创作等场景。较低的值将使输出更加集中和确定,适合代码生成等场景。

top_p:控制模型生成文本的多样性。top_p 是一种核采样方法,模型会考虑累计概率达到 top_p 阈值的最可能词汇。

最大输出长度:限制生成模型生成文本的最大长度。有助于控制 API 调用的成本和响应时间,并防止生成过长的无意义文本。

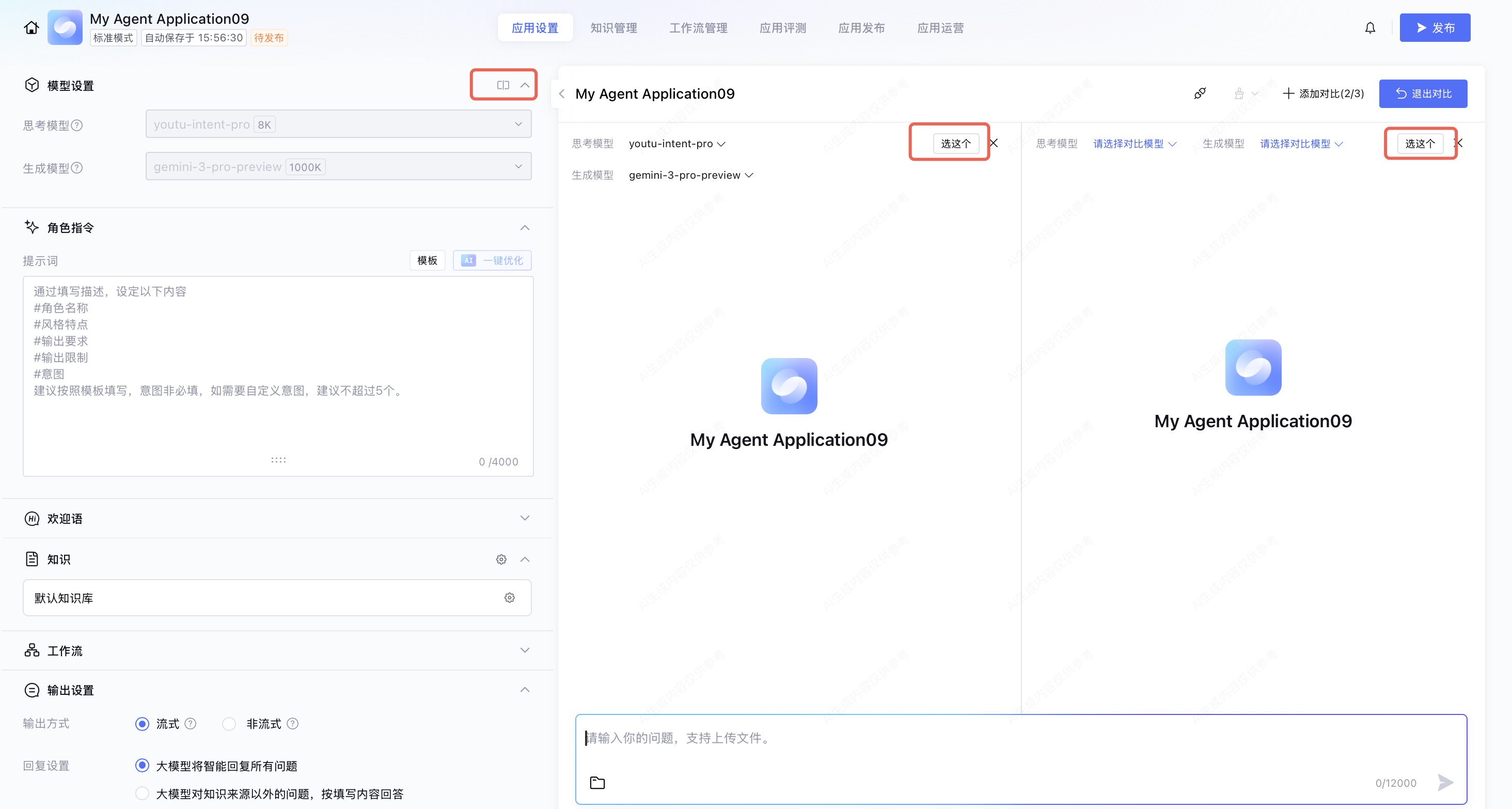

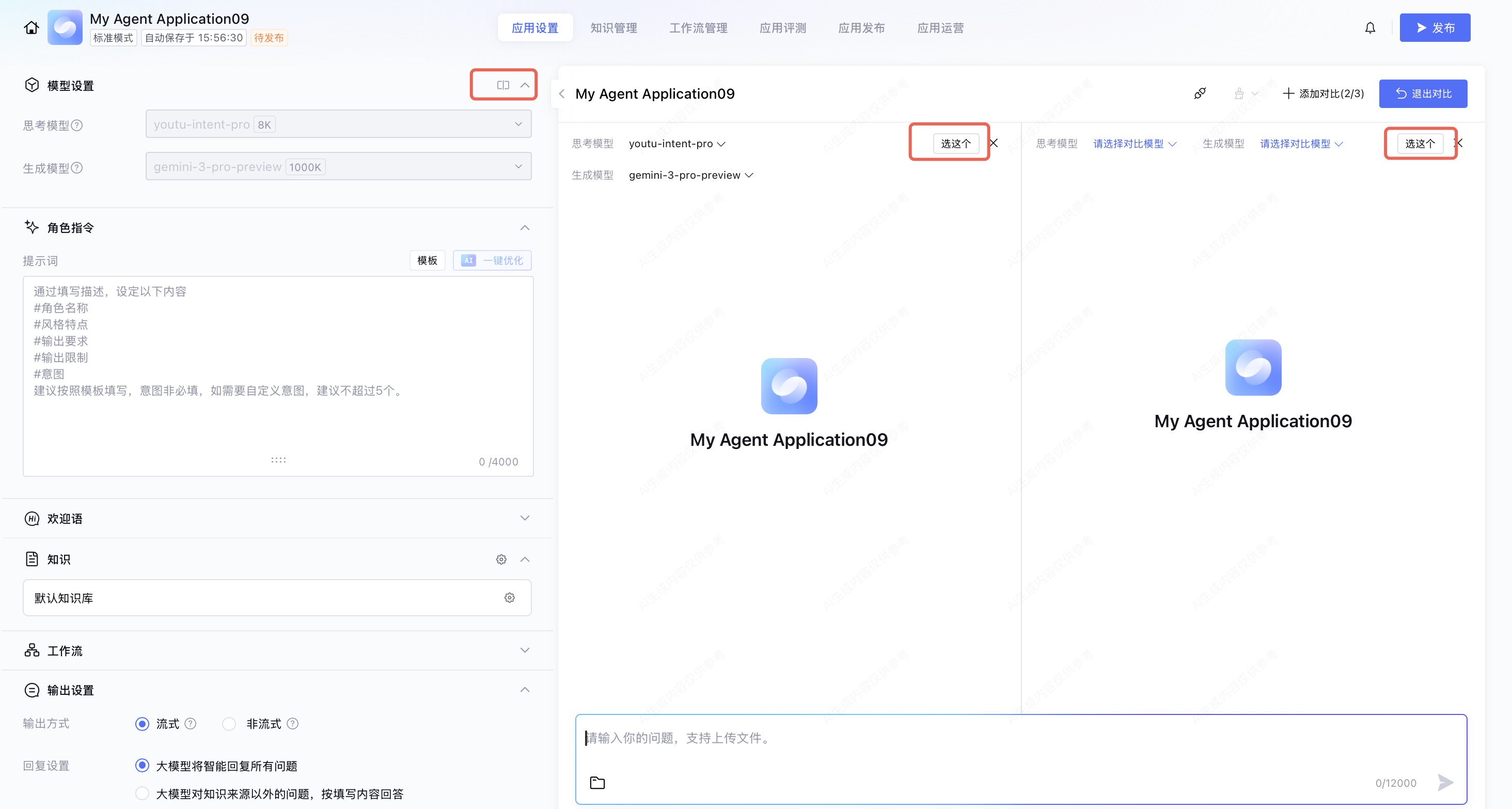

模型对比调试

标准模式下及 Multi-Agent 模式单 Agent 场景下,支持“多模型对比调试”。

您可以同时对比不同模型对于相同问题的回答效果,最多支持同时添加3个模型。每个模型支持调节具体参数,在对比中,若发现效果较好的模型,点击选这个即可选中,在退出对比并保存后选中模型将生效。退出对比时,若选择放弃并退出则选中模型不会生效。

角色指令

用户提问后,应用将以“角色指令”中定义的任务角色给出回答。可以参照所给填写,限定模型回复的语种、语气等,目前腾讯云智能体开发平台已支持中英文问答输出。

模板:设定好的角色指令格式模板,建议按照模板填写,指令遵循效果更佳。编写指令后也可以点击模板 > 保存为模板 将编写好的指令存为模板。

AI 一键优化:初步完成角色设定后,可单击一键优化对角色设定内容进行优化,模型将基于已输入的内容优化设定,能够使模型更好地完成对应要求。

注意:

AI 一键优化功能将消耗用户的 tokens 资源。

欢迎语

填写欢迎语后,将在客户端侧首页显示,支持插入应用级变量显示。可以使用 AI 一键优化生成欢迎语。

知识库

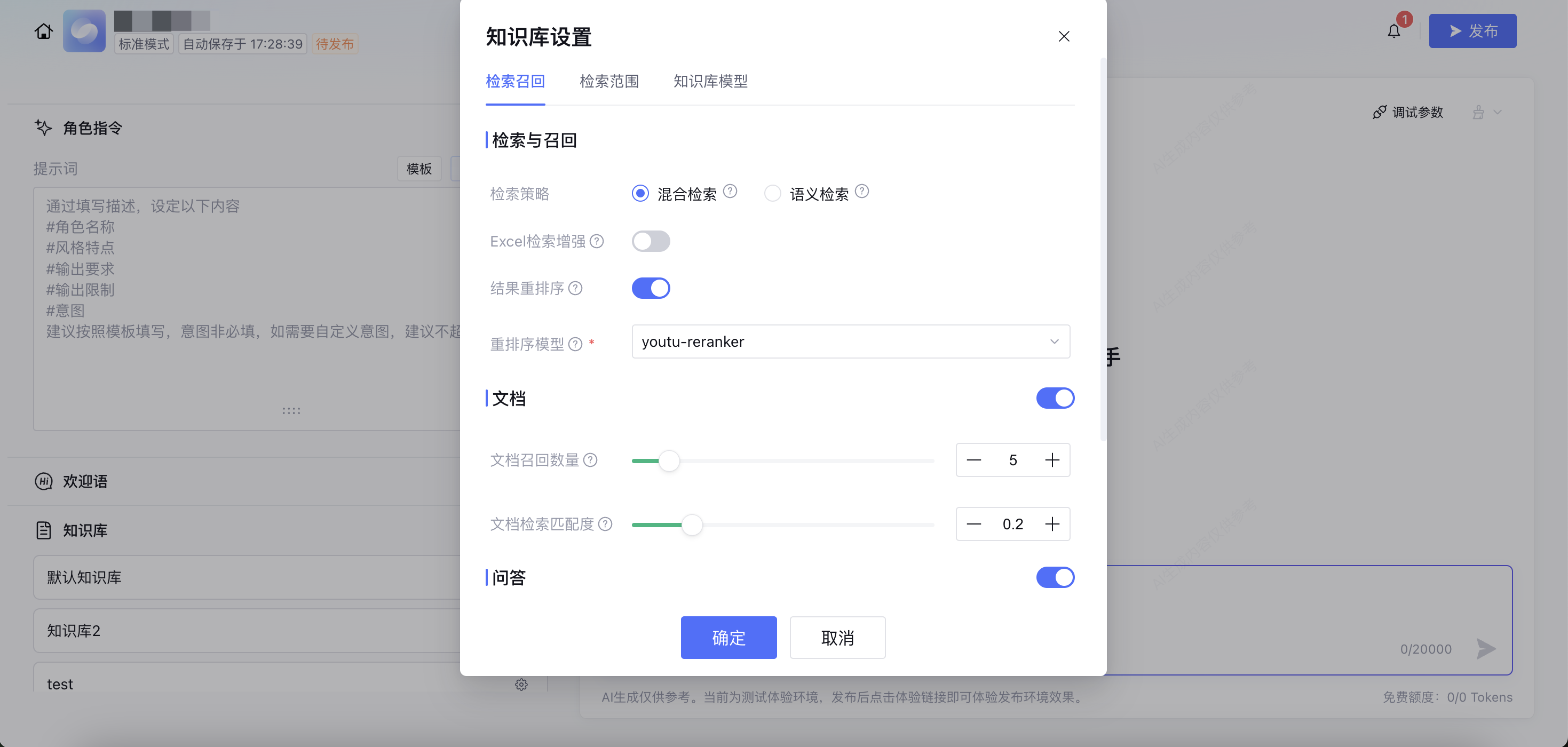

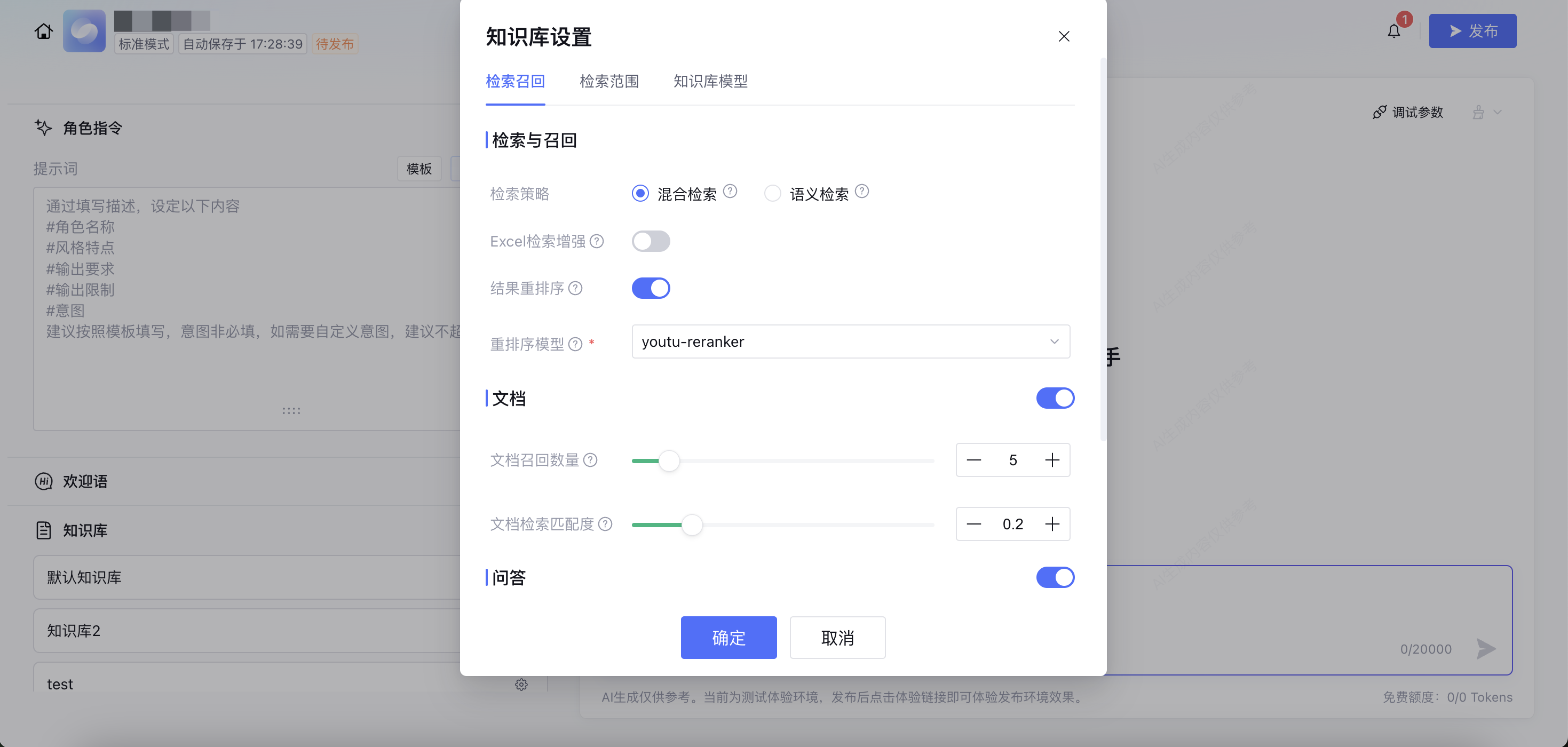

知识库下支持回显该应用下默认知识库及引用知识库。并支持对每个知识库分别设置:检索召回、检索范围、知识库模型。

1. 检索召回:

检索策略:可选择混合检索、语义检索策略。

混合检索:同时执行关键词检索和向量检索,推荐在需要对字符串和语义关联的场景下使用,综合效果更优。

语义检索:推荐在 query 与文本切片重叠词汇少,需要语义匹配的场景。

Excel 检索增强:开启情况下,支持基于自然语言对 Excel 表格进行查询和计算,但可能影响应用回复耗时。

结果重排序:开启情况下,可选择重排序模型。在检索召回后的结果重排过程中,通过分析用户问题,重新调整切片顺序,使与用户问题相似度最高的内容排在前面。平台提供2个预置重排序模型,也可前往模型广场配置第三方重排序模型。

文档:开启情况下,大模型将基于您构建的文档库回答问题,可选择直接上传文件,或上传网页,大模型将解析、学习您上传的文档。文档相关内容可查看 文档概述。

文档召回数量:检索返回的最高匹配度的 N 个文档片段作为输入提供给大模型进行阅读理解。

文档检索匹配度:根据设置的匹配度,将找到的文本片段返回给大模型,作为回复参考。值越低,意味着更多的片段被召回,但也可能影响准确性,低于匹配度的内容将不会被召回。

问答:开启情况下,大模型将基于您构建的问答库回答问题,可选择直接上传文件批量导入问答、手动录入问答内容、从文档库的文件中自动生成问答。问答相关内容可查看 问答。

问答库答案回复:可选择直接回复和润色后回复。

直接回复:检测到的问题相似度高于参考值时,使用答案直接回复。

润色后回复:检测到答案后,将对答案润色后回复。

问答召回数量:检索返回的最高匹配度的 N 个问答作为输入提供给大模型进行阅读理解。

问答检索匹配度:根据设置的匹配度,将找到的问答内容返回给大模型,作为回复参考。值越低,意味着更多的片段被召回,但也可能影响准确性,低于匹配度的内容将不会被召回。

数据库:开启情况下,大模型将基于您接入的第三方数据库回答问题。

2. 检索范围:实现不同用户咨询回复给出不同知识范围的答案。详情可查看 知识库检索相关设置。

3. 知识库模型:支持单独设置知识库问答生成模型、知识库 Schema 生成模型。

联网搜索

开启联网搜索后,支持结合联网信息,给用户提供更实时、更丰富的知识回复。

工作流

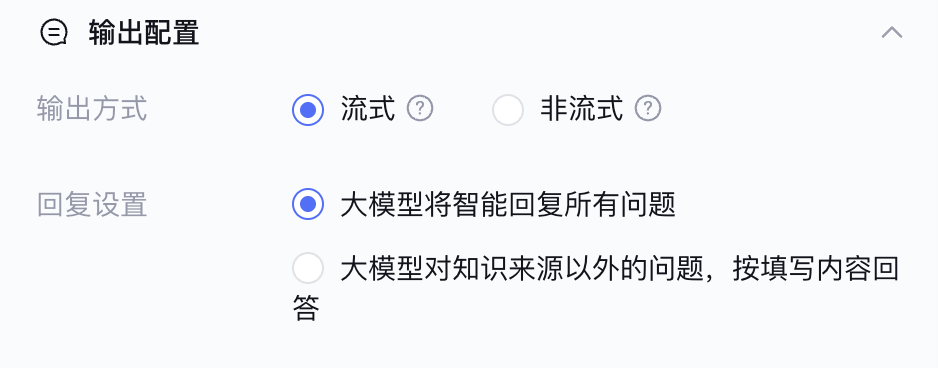

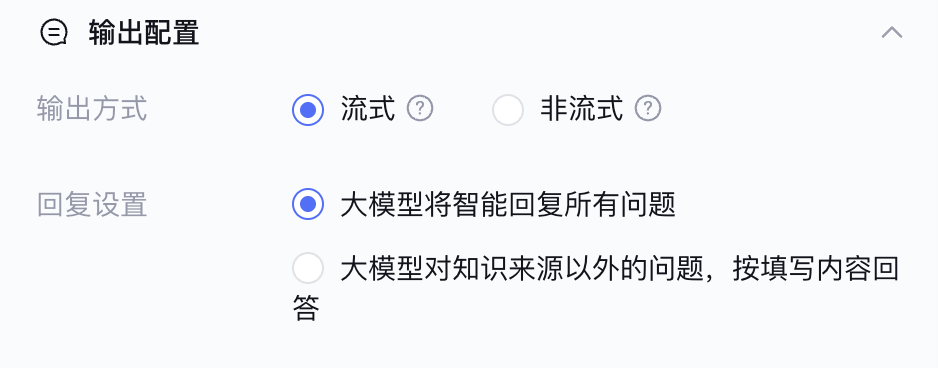

输出设置

输出方式:支持选择答案通过流式或非流式的形式输出,流式即逐字输出,非流式即答案完整生成后一次性输出。

回复设置:支持设置使用大模型智能回复所有问题或对知识来源以外的问题,按填写内容保守回答。

变量与记忆

变量

系统变量:应用运行时的变量。不支持用户自定义或修改现有变量。

环境变量:用于保存 API 密钥、用户密码等敏感信息。可自定义添加参数,单击新建,进入环境变量新建,支持填写默认值。

API 参数:表示您在调用腾讯云智能体开发平台 API 时,通过 custom_variables 字段(该参数的使用详情请参见 对话端接口文档(HTTP SSE)、对话端接口文档(WebSocket))传入工作流的变量,在工作流中引用该变量执行后续业务逻辑。可自定义添加参数,单击新建,进入 API 参数新建,支持填写默认值。

应用变量:可在应用全局范围内被“读取和修改”,在工作流之间、Agent 之间进行流转的全局变量。支持用户手动修改。

长期记忆

记忆设置支持配置长期记忆的时效,可选范围为1~999天。默认为30天,超出时效的记忆内容将被删除。

记忆测试内容展示所有记忆时效范围内的记忆内容,支持对内容进行修改、删除和清空所有记忆内容的操作。

高级设置

同义词设置

可导入业务场景下的专有名词,对于 query 中的同义词,检索前将统一替换为知识库中知识的统一名称,提高检索的准确率。

对话效果高级设置

对话效果高级设置中,支持模型上下文改写。

模型上下文改写:若打开开关,大模型将结合历史问答,补全当前问题。

意图达成优先级

在通常情况下,您无需设置意图达成优先级。

如您在平台设置的问答和工作流存在高度相似,可能会影响大模型对于调用方式的判断准确性,此时您可以设置合理优先级。

应用端用户权限

文档反馈