Automatic Installation of Tesla Driver During Instance Creation - Linux (Recommended)

Last updated:2025-06-09 12:04:55

Automatic Installation of Tesla Driver During Instance Creation - Linux (Recommended)

Last updated: 2025-06-09 12:04:55

Application Scenario

To ensure the Cloud GPU Service to work properly, the correct Data Center Operating System software must be installed in advance. For NVIDIA series GPUs, the following two levels of software packages need to be installed:

The hardware driver that drives the GPU to work.

The libraries required by upper-level applications.

For user's convenience, the purchase page offers multiple methods for installing GPU drivers along with associated CUDA and cuDNN. When creating a GPU instance, you can select different methods based on your business requirements to complete the driver deployment.

Installing Methods

Installing Method | Description | Link |

Method 1: Automatically Installing Driver After Selecting a Public Image | During the image selection on the purchase page, select a public image and check the option to automatically install the GPU driver in the backend. | |

Method 2: Installing GPU Driver Using a Script | In the custom data text box on the purchase page, enter the driver auto-installation script. |

Method 1: Automatically Installing Driver After Selecting a Public Image

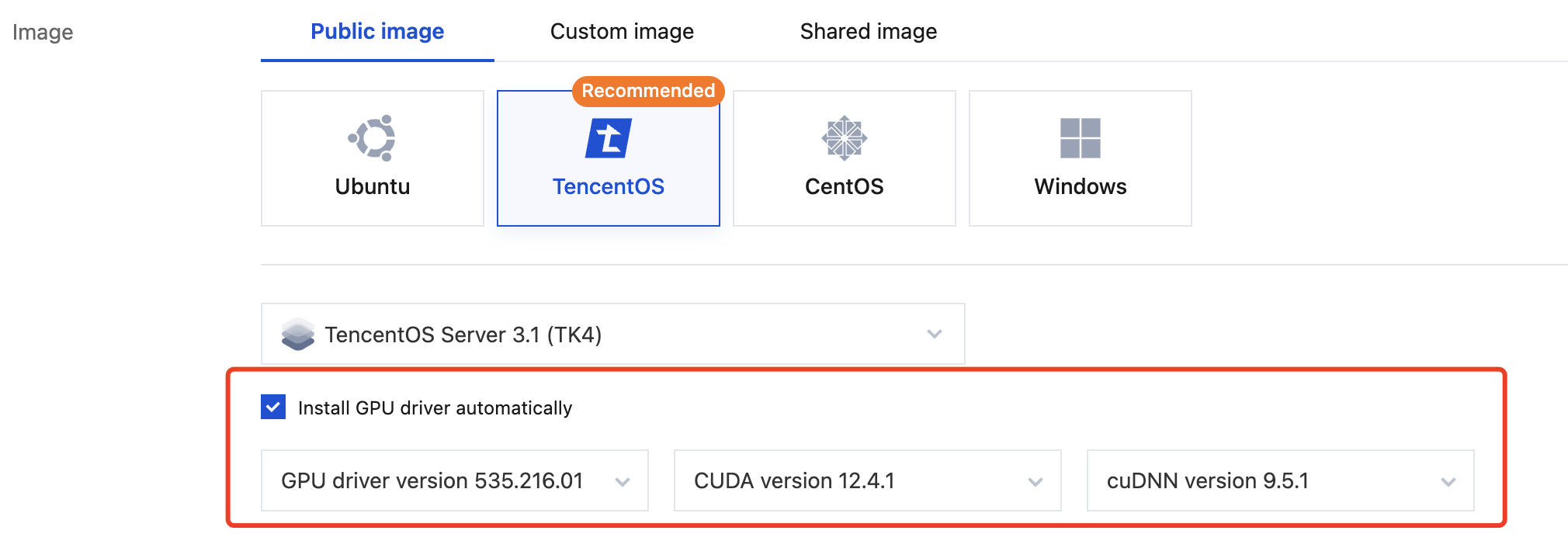

1. During instance creation on the purchase page of a CVM, select a CentOS or Ubuntu image in the image selection step.

2. After you select the image, the Install GPU driver automatically option will appear. Once it is selected, you can choose the desired CUDA and cuDNN versions as needed, as shown in the figure below:

Note:

Only certain image versions for compute-optimized instances support automatic Tesla driver installation, as displayed on the purchase page.

3. After creation, go to the console, locate the instance, and wait approximately 10 minutes for the driver installation to complete.

4. See Logging In To Linux Instance (Web Shell) to log in to the instance.

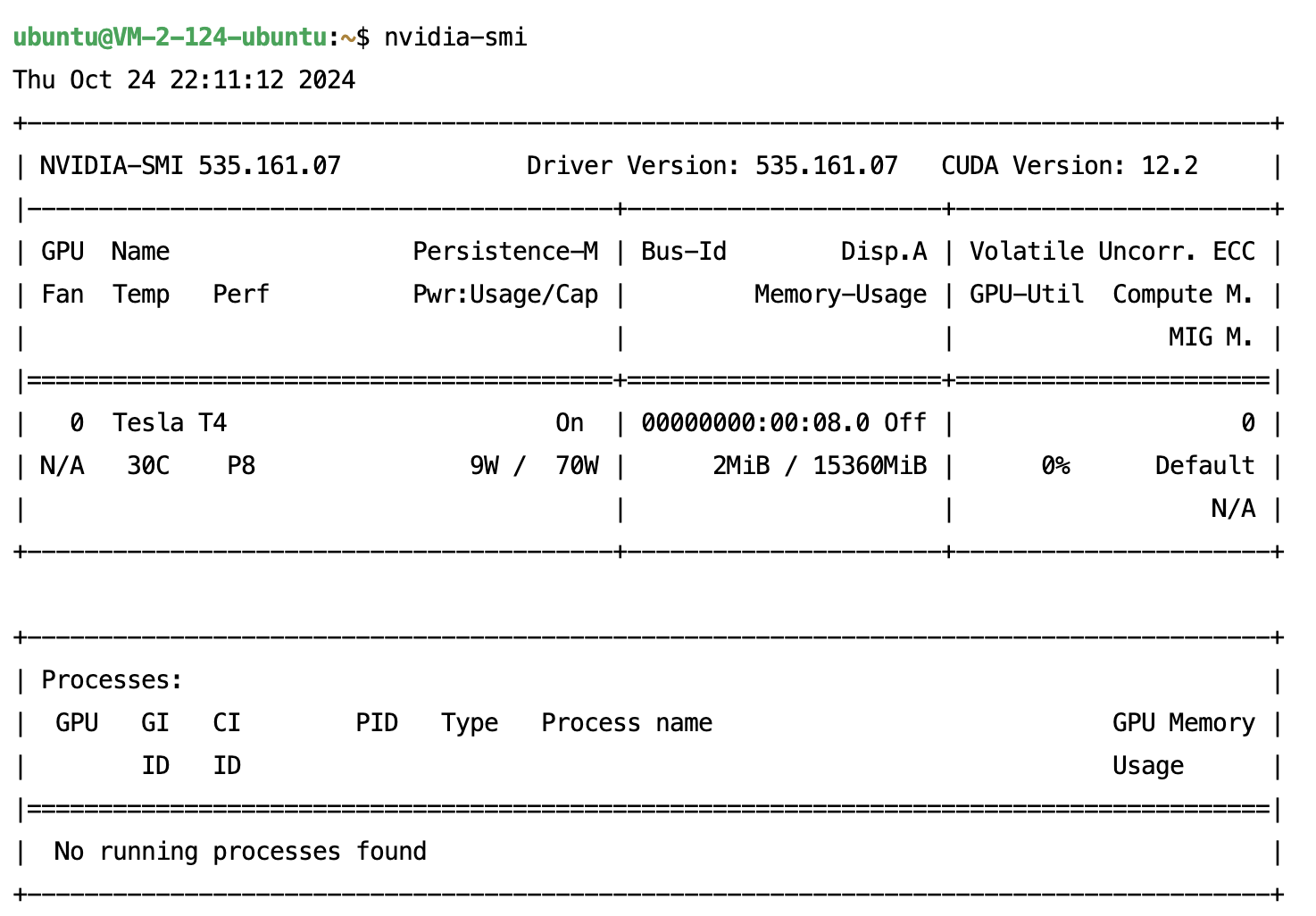

5. Execute the following command to verify whether the driver has been successfully installed.

nvidia-smi

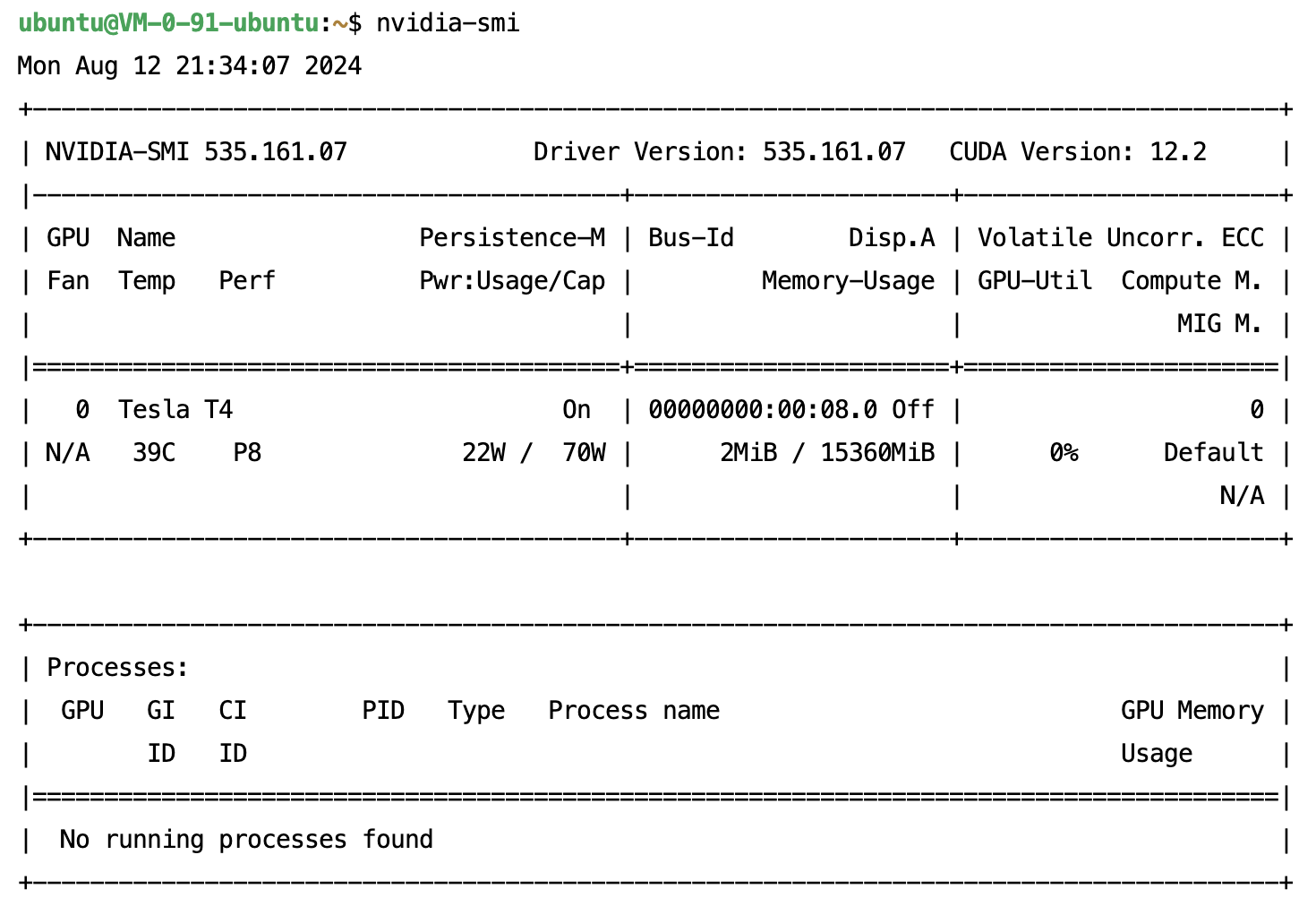

If the returned information is similar to the GPU information in the figure below, it indicates that the driver has been successfully installed.

Method 2: Installing GPU Driver Using a Script

Directions

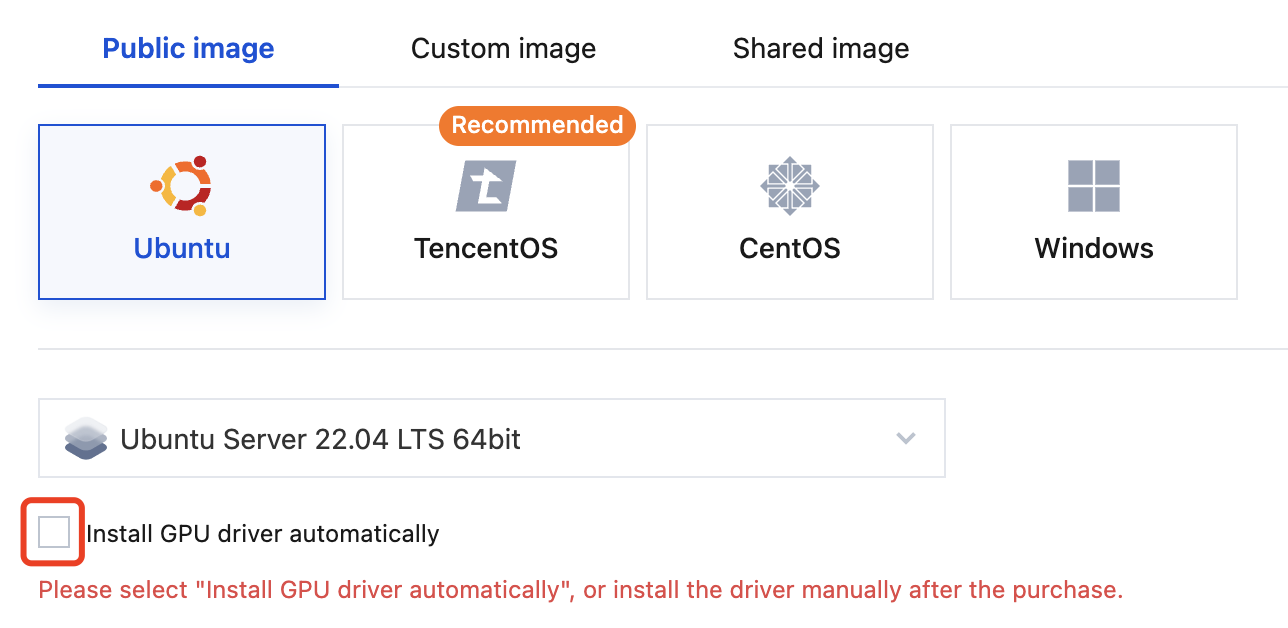

1. On the CVM purchase page, select a GPU instance, and during the image selection step, do not select the option for automatic GPU driver installation in the backend.

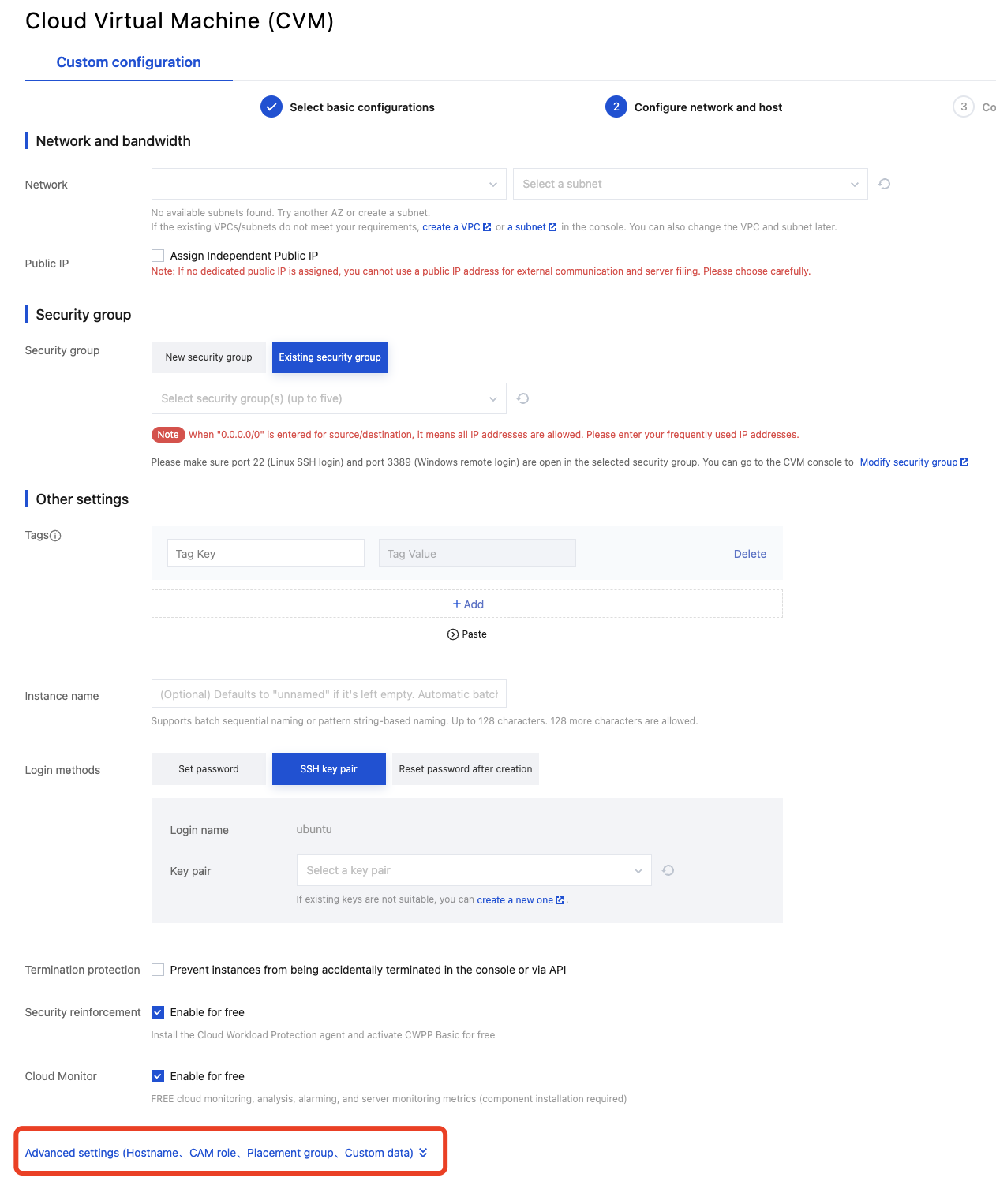

2. In the Configure network and host step, click Advanced settings under Other settings, as shown in the figure below:

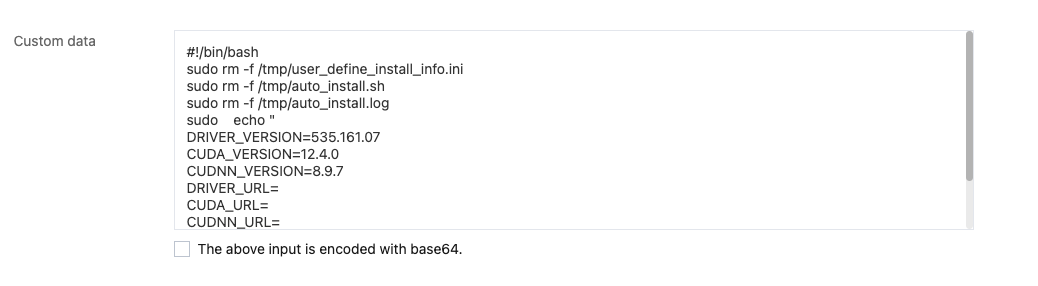

3. In the Advanced settings section, enter the driver auto-installation script in the Custom data text box, as shown in the figure below:

The complete script is provided below. See Parameter Description to update the parameters, and then copy the entire script into the custom data field:

#!/bin/bashsudo rm -f /tmp/user_define_install_info.inisudo rm -f /tmp/auto_install.shsudo rm -f /tmp/auto_install.logsudo echo "DRIVER_VERSION=535.161.07CUDA_VERSION=12.4.0CUDNN_VERSION=8.9.7DRIVER_URL=CUDA_URL=CUDNN_URL=" > /tmp/user_define_install_info.inisudo wget https://mirrors.tencentyun.com/install/GPU/auto_install.sh -O /tmp/auto_install.sh && sudo chmod +x /tmp/auto_install.sh && sudo /tmp/auto_install.sh > /tmp/auto_install.log 2>&1 &

4. Follow the instructions on the interface to complete the creation of the CVM.

5. Log in to the instance and enter the command

ps aux | grep auto_install to check the backend process running the auto-install script.6. After waiting for 10–20 minutes, run the following command to verify whether the driver is installed successfully.

nvidia-smi

If the returned information is similar to the GPU information in the figure below, it indicates that the driver has been successfully installed.

Enter

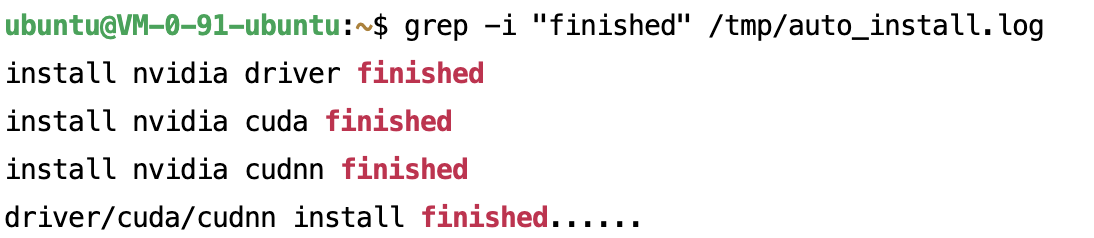

grep -i “finished” /tmp/auto_install.log to check the installation records for CUDA and cuDNN.

Parameter Description

When using the automatic driver installation script, you can either specify the driver version number for installation or provide the corresponding driver download URL.

Based on the created instance specifications and image, adjust the corresponding Tesla driver, CUDA, and cuDNN library version parameters within the supported combination range:

DRIVER_VERSION=535.161.07CUDA_VERSION=12.4.0CUDNN_VERSION=8.9.7DRIVER_URL=CUDA_URL=CUDNN_URL=

Note:

Only certain Linux images for NVIDIA compute-optimized instances support Tesla driver installation scripts.

It is recommended to select the latest versions of the Tesla driver, CUDA, and cuDNN libraries.

After the instance is created, executing the script takes approximately 10–20 minutes.

The supported combinations of models, images, Tesla drivers, CUDA, and cuDNN are as follows:

Note:

The table below lists some selected instance types categorized as Cloud Bare Metal (CBM) and Hyper Computing Cluster.

Instance Type | Public Image | Tesla Driver Version | CUDA Driver Version | cuDNN Version |

GT4, PNV4, GN10Xp, GN10X, GN8, GN7, BMG5t, BMG5v, HCCPNV4h, HCCG5v, HCCG5vm, HCCPNV4sn, and HCCPNV5v | TencentOS Server 3.1 (TK4) Ubuntu Server 22.04 LTS 64-bit Ubuntu Server 20.04 LTS 64-bit | 550.90.07 | 12.4.0 | 8.9.7 |

GT4, PNV4, GN10Xp, GN10X, GN8, GN7, BMG5t, BMG5v, HCCPNV4h, HCCG5v, HCCG5vm, HCCPNV4sn, HCCPNV4sne, and HCCPNV5v | TencentOS Server 3.1 (TK4) TencentOS Server 2.4 (TK4) Ubuntu Server 22.04 LTS 64-bit Ubuntu Server 20.04 LTS 64-bit CentOS 7.x 64-bit CentOS 8.x 64-bit | 535.183.06 535.161.07 | 12.4.0 | 8.9.7 |

| | 535.183.06 535.161.07 | 12.2.2 | 8.9.4 |

GT4, PNV4, GN10Xp, GN10X, GN8, GN7, BMG5t, BMG5v, HCCPNV4h, HCCG5v, HCCG5vm, HCCPNV4sn, HCCPNV4sne, and HCCPNV5v | TencentOS Server 3.1 (TK4) TencentOS Server 2.4 (TK4) Ubuntu Server 20.04 LTS 64-bit Ubuntu Server 18.04 LTS 64-bit CentOS 7.x 64-bit CentOS 8.x 64-bit | 525.105.17 | 12.0.1 | 8.8.0 |

| GT4, PNV4, GN10Xp, GN10X, GN8, GN7, BMG5t, BMG5v, HCCPNV4h, HCCG5v, HCCG5vm, HCCPNV4sn, and HCCPNV4sne | 470.182.03 | 11.4.3 | 8.2.4 |

Based on the created instance type and image, see NVIDIA Driver, CUDA, and cuDNN official documentation to specify the corresponding combination of Tesla Driver, CUDA, and cuDNN library versions. After downloading, save them as instance-accessible URL addresses and fill in the parameters:

DRIVER_VERSION=CUDA_VERSION=CUDNN_VERSION=#Ensure the instance can successfully download the installation packages from the provided URLs.DRIVER_URL=http://mirrors.tencentyun.com/install/GPU/NVIDIA-Linux-x86_64-535.161.07.runCUDA_URL=http://mirrors.tencentyun.com/install/GPU/cuda_12.4.0_550.54.14_linux.run#It is recommended to use installation packages in tar.xz or tgz format for cuDNN.CUDNN_URL=http://mirrors.tencentyun.com/install/GPU/cudnn-linux-x86_64-8.9.7.29_cuda12-archive.tar.xz

Note:

If any of the parameters DRIVER_URL, CUDA_URL, or CUDNN_URL are specified, the DRIVER_VERSION, CUDA_VERSION, and CUDNN_VERSION parameters will be ignored.

Only NVIDIA compute-optimized instance Linux images support the Tesla driver installation script. There may be risks of incompatibility among card types, images, GPU drivers, CUDA, and cuDNN library installation packages. It is recommended to install the driver using the method of specifying the driver version number.

If a non-Tencent Cloud intranet download address is filled in, Public Network Fee will be generated, and the download time will tend to be longer.

Was this page helpful?

You can also Contact Sales or Submit a Ticket for help.

Yes

No

Feedback