PostgreSQL Exporter 接入

最后更新时间:2025-11-07 14:49:59

操作场景

在使用 PostgreSQL 过程中都需要对 PostgreSQL 运行状态进行监控,以便了解 PostgreSQL 服务是否运行正常,排查 PostgreSQL 故障问题原因, Prometheus 监控服务提供了基于 Exporter 的方式来监控 PostgreSQL 运行状态,并提供了开箱即用的 Grafana 监控大盘。本文介绍如何部署Exporter 以及实现 PostgreSQL Exporter 告警接入等操作。

说明:

接入方式

方式一:一键安装(推荐)

操作步骤

1. 登录 Prometheus 监控服务控制台。

2. 在实例列表中,选择对应的 Prometheus 实例。

3. 进入实例详情页,选择数据采集 > 集成中心。

4. 在集成中心找到并单击 PostgreSQL,即会弹出一个安装窗口,在安装页面填写指标采集名称和地址等信息,并单击保存即可。

配置说明

参数 | 说明 |

名称 | 集成名称,命名规范如下: 名称具有唯一性。 名称需要符合下面的正则:'^[a-z0-9]([-a-z0-9]*[a-z0-9])?(\\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*$'。 |

用户名 | |

密码 | PostgreSQL 的密码。 |

地址 | PostgreSQL 的连接地址。 |

标签 | 给指标添加自定义 Label。 |

方式二:自定义安装

说明:

前提条件

在 Prometheus 监控服务控制台,选择对应的 Prometheus 实例,选择数据采集 > 集成容器服务,然后找到对应容器集群完成关联集群操作。可参见指引 关联集群。

操作步骤

步骤1:Exporter 部署

1. 登录容器服务控制台。

2. 在左侧菜单栏中选择集群。

3. 单击需要获取集群访问凭证的集群 ID/名称,进入该集群的管理页面。

4. 执行以下 使用 Secret 管理 PostgreSQL 密码 > 部署 PostgreSQL Exporter > 获取指标 步骤完成 Exporter 部署。

使用 Secret 管理 PostgreSQL 密码

1. 在左侧菜单中选择工作负载 > Deployment,进入 Deployment 页面。

2. 在页面右上角单击 YAML 创建,创建 YAML 配置,配置说明如下:

使用 Kubernetes 的 Secret 来管理密码并对密码进行加密处理,在启动 PostgreSQL Exporter 的时候直接使用 Secret Key,需要调整对应的

password,YAML 配置示例如下:apiVersion: v1kind: Secretmetadata:name: postgres-testtype: OpaquestringData:username: postgrespassword: you-guess #对应 PostgreSQL 密码

部署 PostgreSQL Exporter

在 Deployment 管理页面,单击新建,选择对应的命名空间来进行部署服务。可以通过控制台的方式创建,如下以 YAML 的方式部署 Exporter,YAML 配置示例如下(复制下面的内容,可根据实际业务调整相应的参数)。

apiVersion: apps/v1kind: Deploymentmetadata:name: postgres-test # 根据业务需要调整成对应的名称,建议加上 PG 实例的信息namespace: postgres-test # 根据业务需要调整成对应的名称,建议加上 PG 实例的信息labels:app: postgresapp.kubernetes.io/name: postgresqlspec:replicas: 1selector:matchLabels:app: postgresapp.kubernetes.io/name: postgresqltemplate:metadata:labels:app: postgresapp.kubernetes.io/name: postgresqlspec:containers:- name: postgres-exporterimage: ccr.ccs.tencentyun.com/rig-agent/postgres-exporter:v0.8.0args:- "--web.listen-address=:9187" # export 开启的端口- "--log.level=debug" # 日志级别env:- name: DATA_SOURCE_USERvalueFrom:secretKeyRef:name: postgres-test # 对应上一步中的 Secret 的名称key: username # 对应上一步中的 Secret Key- name: DATA_SOURCE_PASSvalueFrom:secretKeyRef:name: postgres-test # 对应上一步中的 Secret 的名称key: password # 对应上一步中的 Secret Key- name: DATA_SOURCE_URIvalue: "x.x.x.x:5432/postgres?sslmode=disable" # 对应的连接信息ports:- name: http-metricscontainerPort: 9187

说明:

上述示例将 Secret 中的用户名密码传给了环境变量

DATA_SOURCE_USER 和 DATA_SOURCE_PASS,使用户无法查看到明文的用户名密码。您还可以用 DATA_SOURCE_USER_FILE/DATA_SOURCE_PASS_FILE 从文件读取用户名密码,或使用 DATA_SOURCE_NAME 将用户名密码也放在连接串里,例如 postgresql://login:password@hostname:port/dbname。参数说明

参数 | 参数说明 |

sslmode | 是否使用 SSL,支持的值如下: - disable:不使用 SSL。 - require:总是使用(跳过验证)。 - verify-ca:总是使用(检查服务端提供的证书是不是由一个可信的 CA 签发)。 - verify-full:总是使用(检查服务端提供的证书是不是由一个可信的 CA 签发,并且检查 hostname 是不是被证书所匹配)。 |

| |

| |

| |

| |

fallback_application_name | 一个备选的 application_name。 |

connect_timeout | 最大连接等待时间,单位秒。0值等于无限大。 |

sslcert | 证书文件路径。文件数据格式必须是 PEM。 |

sslkey | 私钥文件路径。文件数据格式必须是 PEM。 |

sslrootcert | root 证书文件路径。文件数据格式必须是 PEM。 |

参数 | 参数说明 | 环境变量 |

--web.listen-address | 监听地址,默认9487。 | PG_EXPORTER_WEB_LISTEN_ADDRESS |

--web.telemetry-path | 暴露指标的路径,默认 /metrics。 | PG_EXPORTER_WEB_TELEMETRY_PATH |

--extend.query-path | PG_EXPORTER_EXTEND_QUERY_PATH | |

--disable-default-metrics | 只使用通过 queries.yaml 提供的指标。 | PG_EXPORTER_DISABLE_DEFAULT_METRICS |

--disable-settings-metrics | 不抓取 pg_settings 相关的指标。 | PG_EXPORTER_DISABLE_SETTINGS_METRICS |

--auto-discover-databases | 是否自动发现 Postgres 实例上的数据库。 | PG_EXPORTER_AUTO_DISCOVER_DATABASES |

--dumpmaps | 打印内部的指标信息,除了 debug 不要使用,方便排查自定义 queries 相关的问题。 | - |

--constantLabels | 自定义标签,通过 key=value 的形式提供,多个标签对使用 , 分隔。 | PG_EXPORTER_CONSTANT_LABELS |

--exclude-databases | 需要排除的数据库,仅在 --auto-discover-databases 开启的情况下有效 | PG_EXPORTER_EXCLUDE_DATABASES |

--log.level | 日志级别 debug/info/warn/error/fatal。 | PG_EXPORTER_LOG_LEVEL |

获取指标

通过

curl http://exporter:9187/metrics 无法获取 Postgres 实例运行时间。我们可以通过自定义一个 queries.yaml 来获取该指标:1. 创建一个包含

queries.yaml 的 ConfigMap。2. 将 ConfigMap 作为 Volume 挂载到 Exporter 某个目录下面。

3. 通过

--extend.query-path 来使用 ConfigMap,将上述的 Secret 以及 Deployment 进行汇总,汇总后的 YAML 如下所示。# 注意: 以下 document 创建一个名为 postgres-test 的 Namespace,仅作参考apiVersion: v1kind: Namespacemetadata:name: postgres-test# 以下 document 创建一个包含用户名密码的 Secret---apiVersion: v1kind: Secretmetadata:name: postgres-test-secretnamespace: postgres-testtype: OpaquestringData:username: postgrespassword: you-guess# 以下 document 创建一个包含自定义指标的 queries.yaml---apiVersion: v1kind: ConfigMapmetadata:name: postgres-test-configmapnamespace: postgres-testdata:queries.yaml: |pg_postmaster:query: "SELECT pg_postmaster_start_time as start_time_seconds from pg_postmaster_start_time()"master: truemetrics:- start_time_seconds:usage: "GAUGE"description: "Time at which postmaster started"# 以下 document 挂载了 Secret 和 ConfigMap ,定义了部署 Exporter 相关的镜像等参数---apiVersion: apps/v1kind: Deploymentmetadata:name: postgres-testnamespace: postgres-testlabels:app: postgresapp.kubernetes.io/name: postgresqlspec:replicas: 1selector:matchLabels:app: postgresapp.kubernetes.io/name: postgresqltemplate:metadata:labels:app: postgresapp.kubernetes.io/name: postgresqlspec:containers:- name: postgres-exporterimage: wrouesnel/postgres_exporter:latestargs:- "--web.listen-address=:9187"- "--extend.query-path=/etc/config/queries.yaml"- "--log.level=debug"env:- name: DATA_SOURCE_USERvalueFrom:secretKeyRef:name: postgres-test-secretkey: username- name: DATA_SOURCE_PASSvalueFrom:secretKeyRef:name: postgres-test-secretkey: password- name: DATA_SOURCE_URIvalue: "x.x.x.x:5432/postgres?sslmode=disable"ports:- name: http-metricscontainerPort: 9187volumeMounts:- name: config-volumemountPath: /etc/configvolumes:- name: config-volumeconfigMap:name: postgres-test-configmap

4. 执行

curl http://exporter:9187/metrics 命令后,即可通过自定义的 queries.yaml 查询到 Postgres 实例启动时间指标。示例如下:# HELP pg_postmaster_start_time_seconds Time at which postmaster started# TYPE pg_postmaster_start_time_seconds gaugepg_postmaster_start_time_seconds{server="x.x.x.x:5432"} 1.605061592e+09

步骤2:添加采集任务

当 Exporter 运行起来之后,需要进行以下操作配置 Prometheus 监控服务发现并采集监控指标:

1. 登录 Prometheus 监控服务控制台,选择对应 Prometheus 实例进入管理页面。

2. 选择数据采集 > 集成容器服务,选择已经关联的集群,通过数据采集配置 > 新建自定义监控 > YAML 编辑来添加采集配置。

3. 通过服务发现添加

PodMonitors 来定义 Prometheus 抓取任务,YAML 配置示例如下:apiVersion: monitoring.coreos.com/v1kind: PodMonitormetadata:name: postgres-exporter # 填写一个唯一名称namespace: cm-prometheus # 按量实例: 集群的 namespace; 包年包月实例(已停止售卖): namespace 固定,不要修改spec:namespaceSelector:matchNames:- postgres-testpodMetricsEndpoints:- interval: 30spath: /metricsport: http-metrics # 前面 Exporter 那个 Container 的端口名relabelings:- action: labeldropregex: __meta_kubernetes_pod_label_(pod_|statefulset_|deployment_|controller_)(.+)- action: replaceregex: (.*)replacement: postgres-xxxxxxsourceLabels:- instancetargetLabel: instanceselector:matchLabels:app: postgres

说明:

账号创建及授权

腾讯云 PostgreSQL

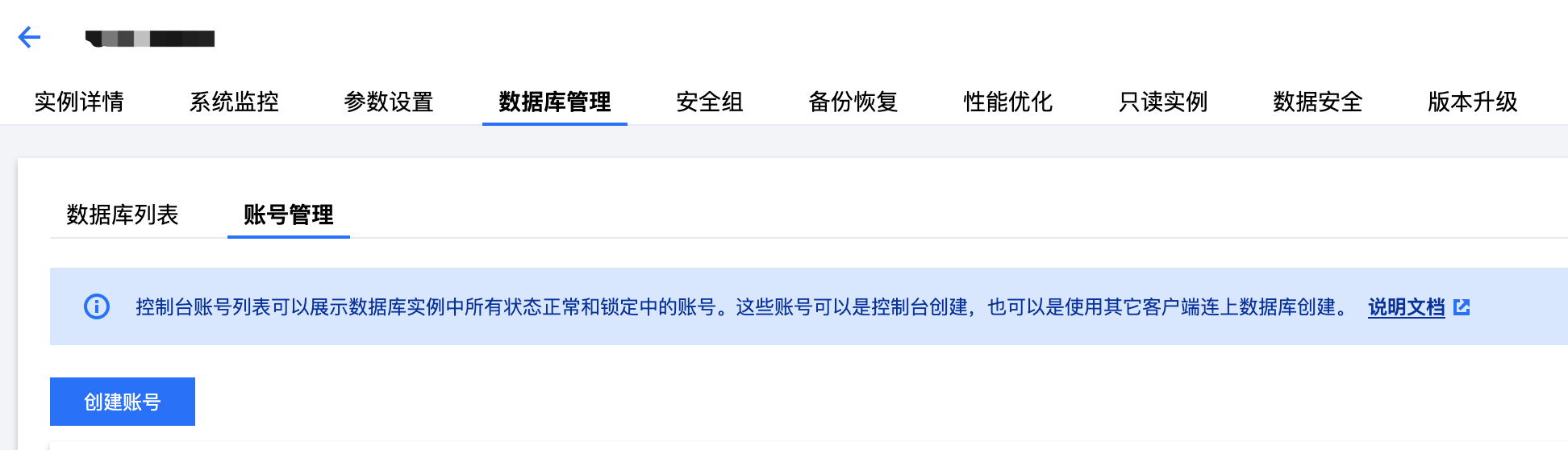

1. 登录 腾讯云 PostgreSQL 控制台,选择对应 PostgreSQL 实例进入管理页面。

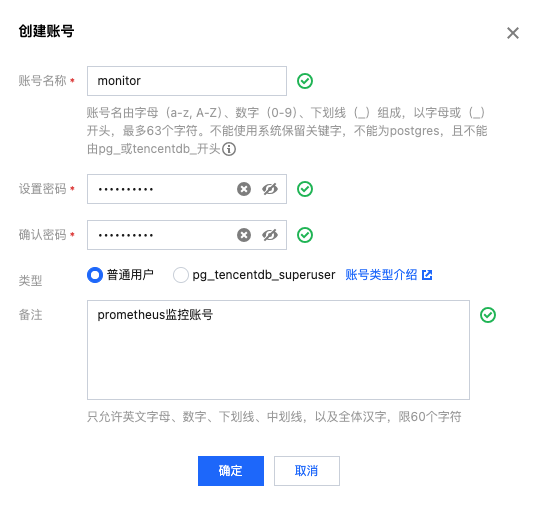

2. 在管理页面选择数据库管理 > 账号管理,然后单击创建账号。

3. 填写账号的名称、密码和相应的备注,账号类型选择普通用户,然后单击确定完成账号创建。

其他 PostgreSQL 服务

对于 PostgreSQL 10 及以上的版本,监控账号需要拥有 pg_monitor 预设角色的权限,pg_monitor 预设角色的具体权限请参见 官方文档。对于 PostgreSQL 10 以下的版本,监控账号需要通过由超级用户创建的带有 SECURITY DEFINER 的函数或视图,间接获得对 pg_stat_activity、pg_stat_replication、pg_stat_statements 监控视图的完整访问权限。

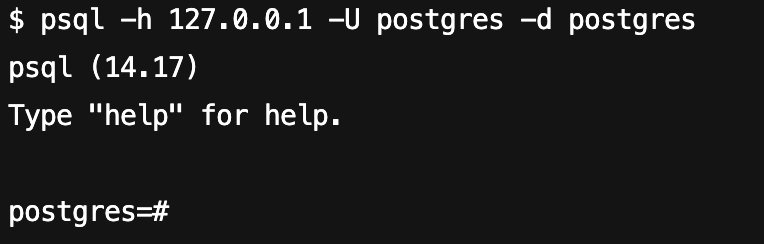

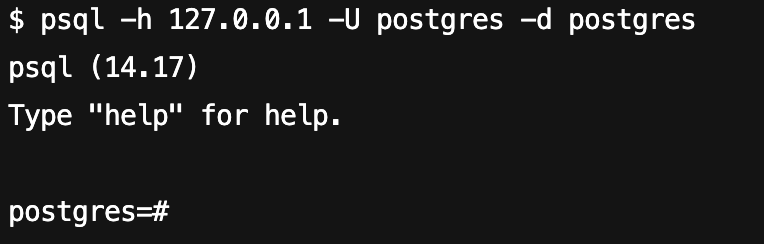

1. 在确保客户端机器与 PostgreSQL 数据库服务器连通的前提下,使用命令行工具 psql,使用超级权限账号 postgres 连接到 PostgreSQL 的 postgres 数据库。

psql -h <host> -p <port> -U <username> -d <database>

命令执行结果如下图所示:

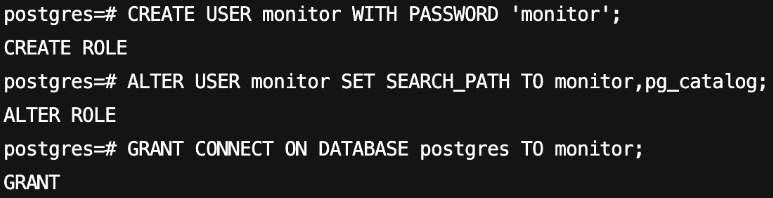

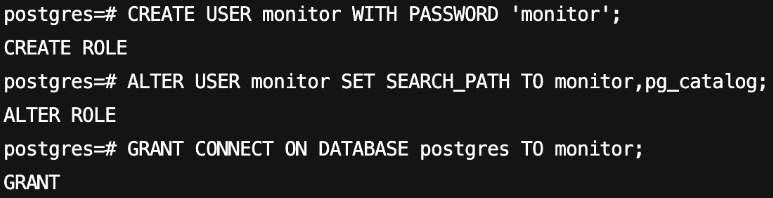

2. 创建一个 PostgreSQL 账号,并为该账号配置默认的数据库对象查找路径,同时授予其连接 PostgreSQL 数据库服务器的权限。以下示例展示了如何创建一个账号名和密码均为 monitor 的监控账号。

CREATE USER monitor WITH PASSWORD 'monitor';ALTER USER monitor SET SEARCH_PATH TO monitor,pg_catalog;GRANT CONNECT ON DATABASE postgres TO monitor;

命令执行结果如下图所示:

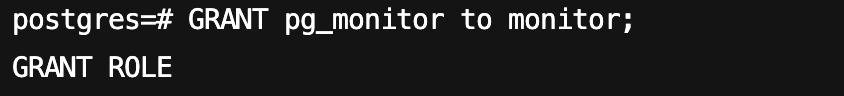

3. 将 pg_monitor 预设角色授予上一步创建的监控账号。

GRANT pg_monitor to monitor;

命令执行结果如下图所示:

1. 在确保客户端机器与 PostgreSQL 数据库服务器连通的前提下,使用命令行工具 psql,使用超级权限账号 postgres 连接到 PostgreSQL 的 postgres 数据库。

psql -h <host> -p <port> -U <username> -d <database>

命令执行结果如下图所示:

2. 创建一个 PostgreSQL 账号,并为该账号配置默认的数据库对象查找路径,同时授予其连接 PostgreSQL 数据库服务器的权限。以下示例展示了如何创建一个账号名和密码均为 monitor 的监控账号。

CREATE USER monitor WITH PASSWORD 'monitor';ALTER USER monitor SET SEARCH_PATH TO monitor,pg_catalog;GRANT CONNECT ON DATABASE postgres TO monitor;

命令执行结果如下图所示:

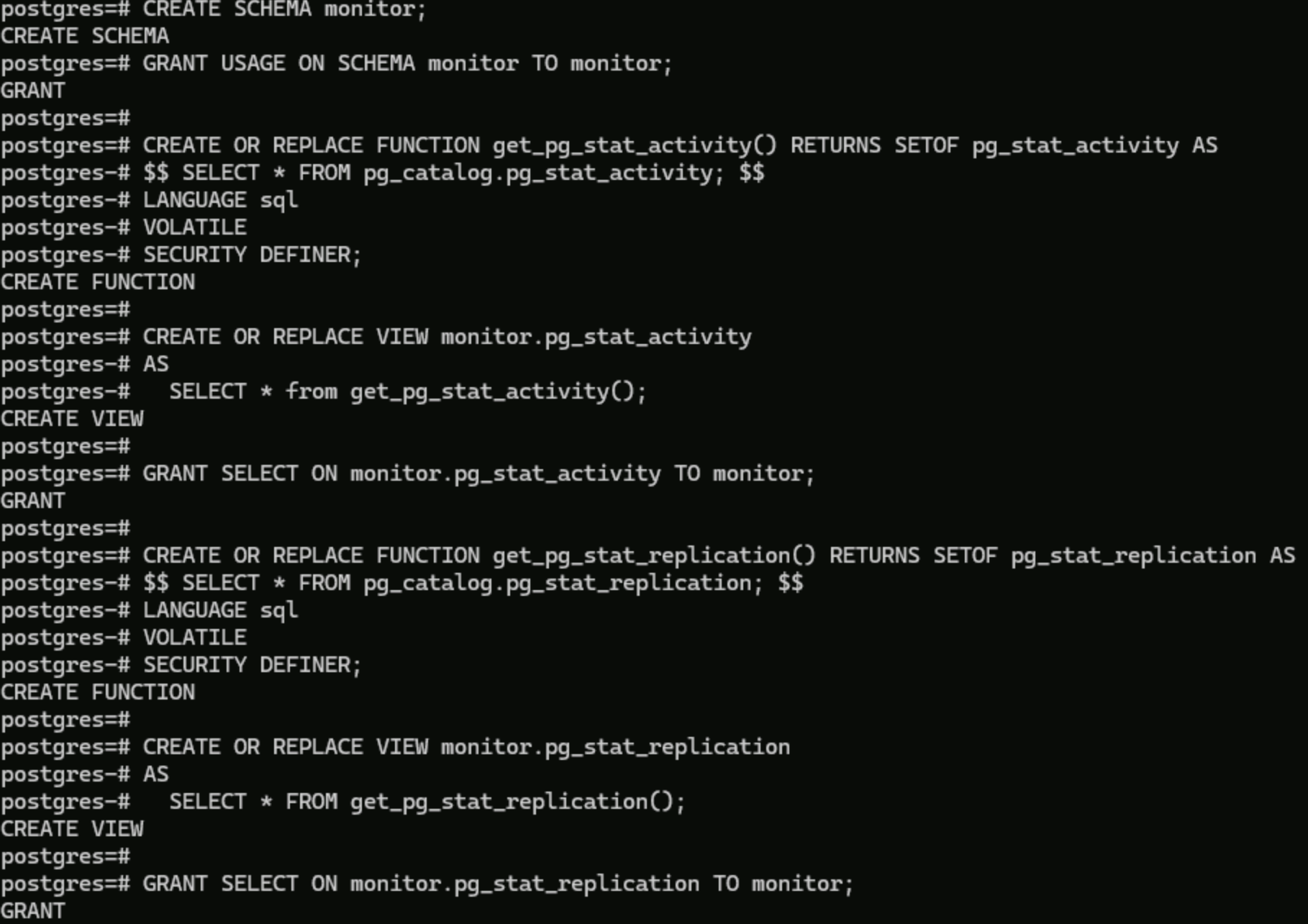

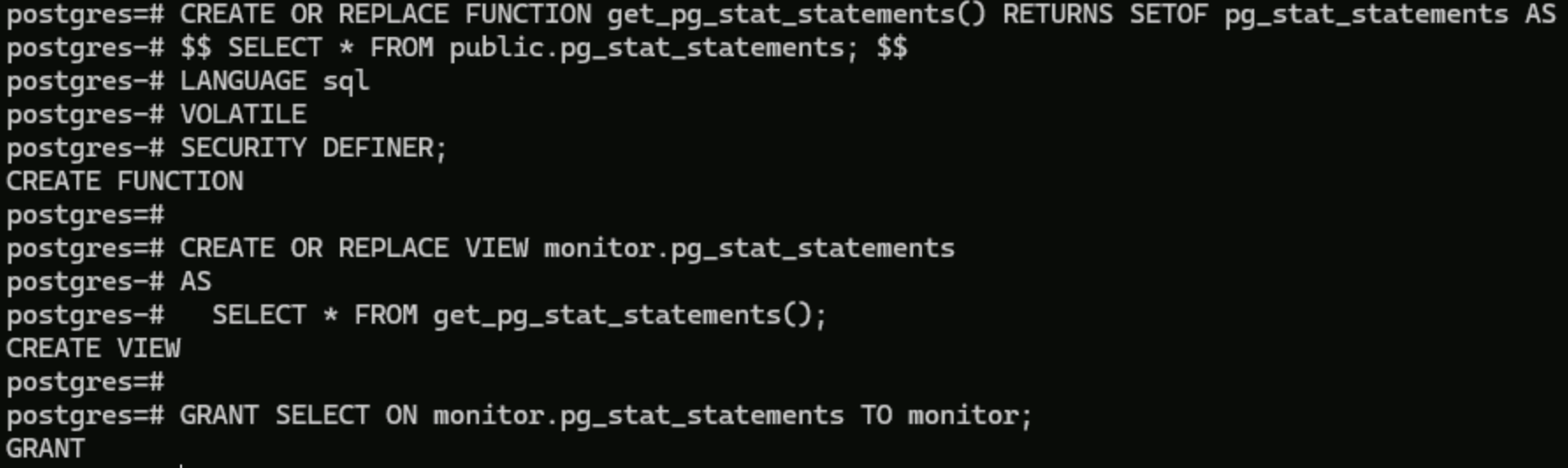

3. 鉴于 PostgreSQL 10以下版本没有预设的 pg_monitor 角色,因此需要为上一步创建的账号创建包含 pg_stat_activity、pg_stat_replication、pg_stat_statements 视图的专用监控 schema。

CREATE SCHEMA monitor;GRANT USAGE ON SCHEMA monitor TO monitor;CREATE OR REPLACE FUNCTION get_pg_stat_activity() RETURNS SETOF pg_stat_activity AS$$ SELECT * FROM pg_catalog.pg_stat_activity; $$LANGUAGE sqlVOLATILESECURITY DEFINER;CREATE OR REPLACE VIEW monitor.pg_stat_activityASSELECT * from get_pg_stat_activity();GRANT SELECT ON monitor.pg_stat_activity TO monitor;CREATE OR REPLACE FUNCTION get_pg_stat_replication() RETURNS SETOF pg_stat_replication AS$$ SELECT * FROM pg_catalog.pg_stat_replication; $$LANGUAGE sqlVOLATILESECURITY DEFINER;CREATE OR REPLACE VIEW monitor.pg_stat_replicationASSELECT * FROM get_pg_stat_replication();GRANT SELECT ON monitor.pg_stat_replication TO monitor;CREATE EXTENSION pg_stat_statements;CREATE OR REPLACE FUNCTION get_pg_stat_statements() RETURNS SETOF pg_stat_statements AS$$ SELECT * FROM public.pg_stat_statements; $$LANGUAGE sqlVOLATILESECURITY DEFINER;CREATE OR REPLACE VIEW monitor.pg_stat_statementsASSELECT * FROM get_pg_stat_statements();GRANT SELECT ON monitor.pg_stat_statements TO monitor;

创建 pg_stat_activity、pg_stat_replication 视图专用监控 schema 的命令执行结果如下图所示:

创建 pg_stat_statements 视图专用监控 schema 的命令执行结果如下图所示:

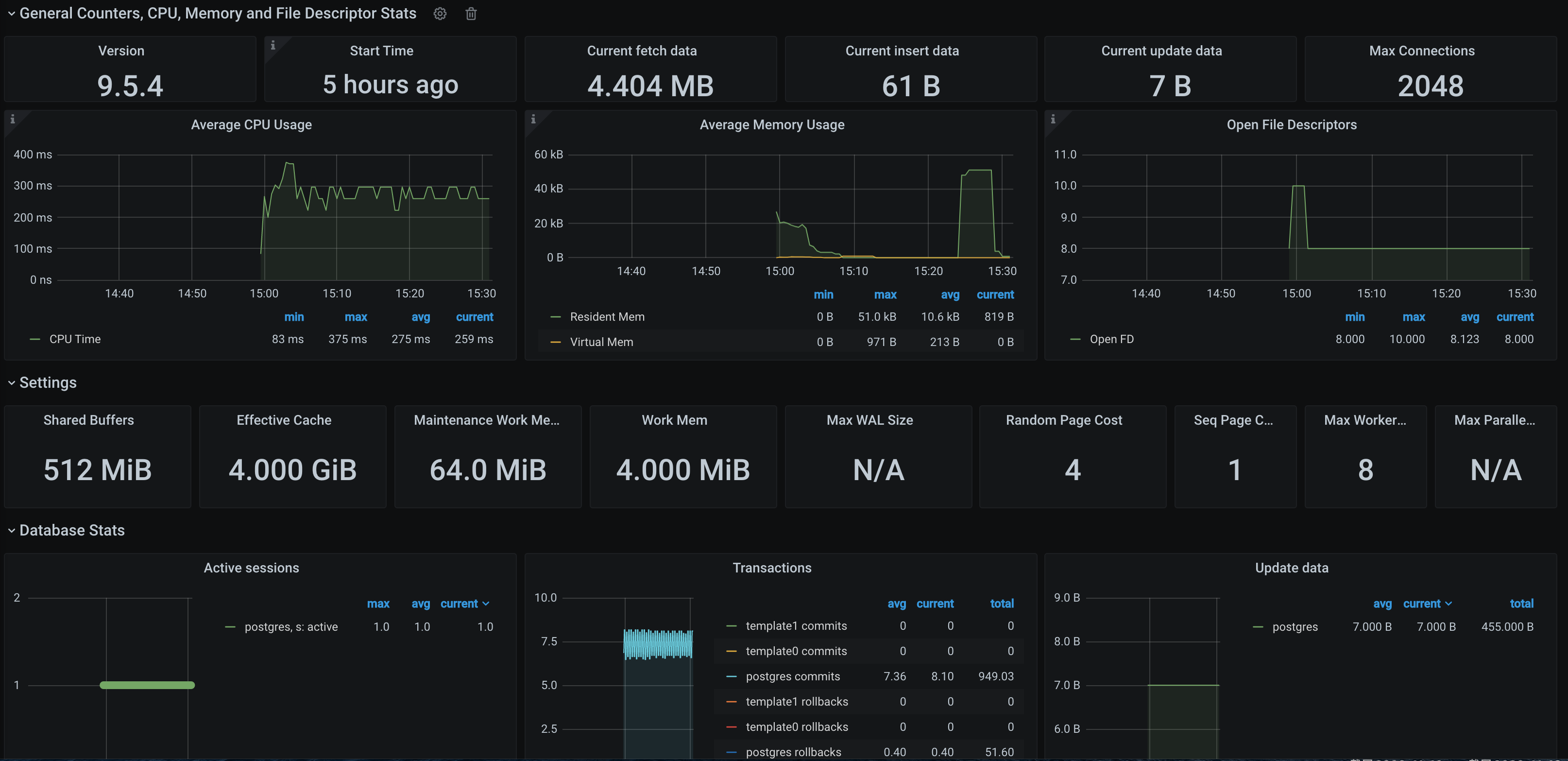

查看监控

说明:

前提条件

Prometheus 实例已绑定 Grafana 实例。

操作步骤

1. 登录 Prometheus 监控服务控制台,选择对应 Prometheus 实例进入管理页面。

2. 选择数据采集 > 集成中心,在集成中心页面找到 PostgreSQL 监控,选择 Dashboard 操作 > Dashboard 安装/升级来安装对应的 Grafana Dashboard。

3. 单击 实例 ID 右侧

4. 进入 Grafana,单击

配置告警

1. 登录 Prometheus 监控服务控制台,选择对应 Prometheus 实例进入管理页面。

2. 在告警管理 > 告警策略页面,可以添加相应的告警策略,详情请参见 新建告警策略。

说明:

后续 Prometheus 监控服务将提供更多 PostgreSQL 相关的告警模板。

文档反馈