Technology Architecture

Terakhir diperbarui:2025-09-19 17:35:32

Architecture Description

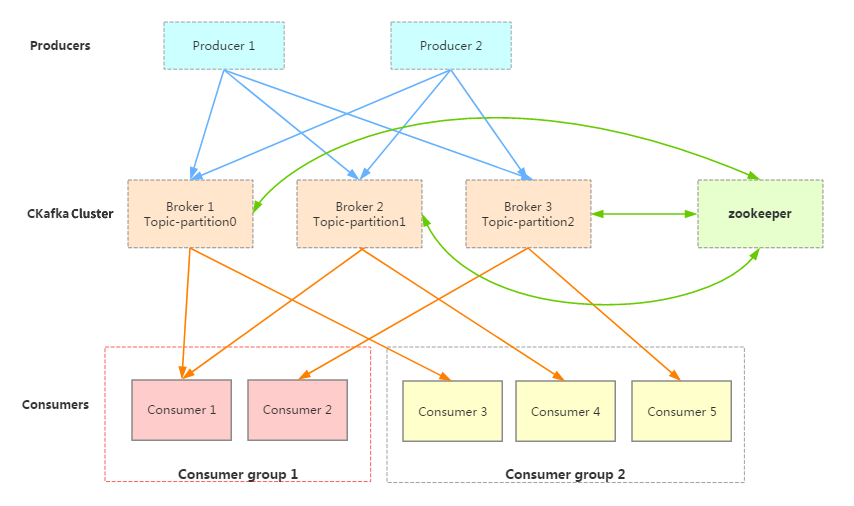

The architecture diagram of CKafka is as follows:

Producer

Producers of messages might generate messages such as those generated by web page activities, service logs, etc. Producers publish messages to the Broker cluster of CKafka in push model.

CKafka Cluster

Broker: Server that stores messages. Brokers support horizontal scaling. The more nodes there are, the higher the cluster throughput rate.

Partition: Partition. A Topic can have multiple partitions. Each Partition physically corresponds to a folder used to store the messages and index files in this Partition. A Partition can have multiple replicas to improve availability, but it will also increase storage and network overhead.

ZooKeeper: Responsible for cluster configuration management, leader election, fault tolerance, etc.

Consumer

Message consumers. A Consumer is divided into several Consumer groups. Consumers consume messages from the Broker in pull mode.

Architecture Principle Explanation

High-Throughput

In the CKafka, there is a process where a large amount of network data is persisted to disks and disk files are sent over the network. The performance of this process directly affects the overall throughput of CKafka, mainly achieved through the following points:

Efficient disk usage: Read and write data sequentially on the disk to improve disk utilization.

Writing messages: Messages are written to the page cache and flushed to disk by asynchronous threads.

Reading messages: Messages are directly transferred from the page cache to the socket and sent out.

When the corresponding data is not found in the page cache, disk I/O will occur. The messages will be loaded from the disk into the page cache and then sent directly from the socket.

Zero-Copy Mechanism of Broker: Use the sendfile system call to send data directly from page cache to network.

Reduce network overhead

Compress data to reduce network load.

Batch processing mechanism: The producer writes data to the Broker in batches, and the consumer pulls data from the Broker in batches.

Data Persistence

Data persistence of the CKafka is mainly implemented by the following principles:

Storage distribution of Partitions in a Topic

In the file storage of CKafka, there are multiple different Partitions in the same Topic. Each Partition physically corresponds to a folder, used to store messages and index files of that Partition. For example, if you create two Topics, Topic1 has 5 Partitions and Topic2 has 10 Partitions, then 5 + 10 = 15 folders will be generated on the entire cluster accordingly.

File storage method in a Partition

Physically, a Partition consists of multiple segments. Each segment has an equal size. Sequential read/write operations are performed. Expired segments are quickly deleted to improve disk usage.

Horizontal Expansion (Scale Out)

A topic can include multiple partitions, which can be distributed on one or multiple brokers.

A consumer can subscribe to one or more Partitions.

A producer is responsible for evenly distributing messages to the corresponding partitions.

Messages in partitions are sequential.

Consumer Group

CKafka does not delete consumed messages.

Every consumer should belong to a group.

Multiple consumers in the same Consumer Group do not consume the same Partition simultaneously.

Different groups can consume the same message at the same time, supporting diversified processing (for queue mode and release/subscription mode).

Apakah halaman ini membantu?

Anda juga dapat Menghubungi Penjualan atau Mengirimkan Tiket untuk meminta bantuan.

Ya

Tidak

masukan