Flume 接入 CKafka

最后更新时间:2024-05-31 12:08:45

Apache Flume 是一个分布式、可靠、高可用的日志收集系统,支持各种各样的数据来源(例如 HTTP、Log 文件、JMS、监听端口数据等),能将这些数据源的海量日志数据进行高效收集、聚合、移动,最后存储到指定存储系统中(例如 Kafka、分布式文件系统、Solr 搜索服务器等)。

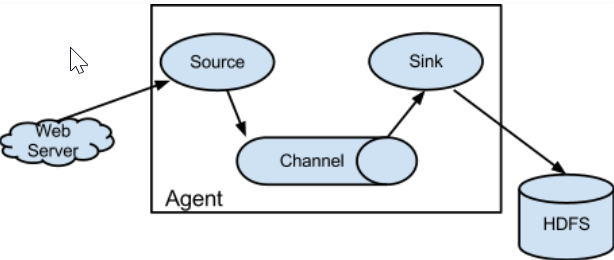

Flume 基本结构如下:

Flume 以 agent 为最小的独立运行单位。一个 agent 就是一个 JVM,单个 agent 由 Source、Sink 和 Channel 三大组件构成。

Flume 与 Kafka

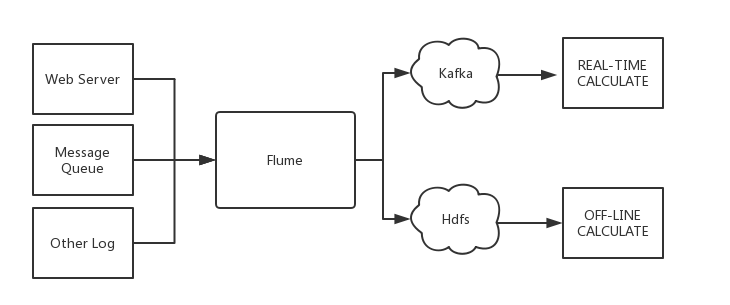

把数据存储到 HDFS 或者 HBase 等下游存储模块或者计算模块时需要考虑各种复杂的场景,例如并发写入的量以及系统承载压力、网络延迟等问题。Flume 作为灵活的分布式系统具有多种接口,同时提供可定制化的管道。

在生产处理环节中,当生产与处理速度不一致时,Kafka 可以充当缓存角色。Kafka 拥有 partition 结构以及采用 append 追加数据,使 Kafka 具有优秀的吞吐能力;同时其拥有 replication 结构,使 Kafka 具有很高的容错性。

所以将 Flume 和 Kafka 结合起来,可以满足生产环境中绝大多数要求。

Flume 接入开源 Kafka

准备工作

下载 Apache Flume (1.6.0以上版本兼容 Kafka)

下载 Kafka工具包 (0.9.x以上版本,0.8已经不支持)

确认 Kafka 的 Source、 Sink 组件已经在 Flume 中。

接入方式

Kafka 可作为 Source 或者 Sink 端对消息进行导入或者导出。

配置 kafka 作为消息来源,即将自己作为消费者,从 Kafka 中拉取数据传入到指定 Sink 中。主要配置选项如下:

配置项 | 说明 |

channels | 自己配置的 Channel |

type | 必须为:org.apache.flume.source.kafka.KafkaSource |

kafka.bootstrap.servers | Kafka Broker 的服务器地址 |

kafka.consumer.group.id | 作为 Kafka 消费端的 Group ID |

kafka.topics | Kafka 中数据来源 Topic |

batchSize | 每次写入 Channel 的大小 |

batchDurationMillis | 每次写入最大间隔时间 |

示例:

tier1.sources.source1.type = org.apache.flume.source.kafka.KafkaSourcetier1.sources.source1.channels = channel1tier1.sources.source1.batchSize = 5000tier1.sources.source1.batchDurationMillis = 2000tier1.sources.source1.kafka.bootstrap.servers = localhost:9092tier1.sources.source1.kafka.topics = test1, test2tier1.sources.source1.kafka.consumer.group.id = custom.g.id

配置 Kafka 作为内容接收方,即将自己作为生产者,推到 Kafka Server 中等待后续操作。主要配置选项如下:

配置项 | 说明 |

channel | 自己配置的 Channel |

type | 必须为:org.apache.flume.sink.kafka.KafkaSink |

kafka.bootstrap.servers | Kafka Broker 的服务器 |

kafka.topics | Kafka 中数据来源 Topic |

kafka.flumeBatchSize | 每次写入的 Bacth 大小 |

kafka.producer.acks | Kafka 生产者的生产策略 |

示例:

a1.sinks.k1.channel = c1a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSinka1.sinks.k1.kafka.topic = mytopica1.sinks.k1.kafka.bootstrap.servers = localhost:9092a1.sinks.k1.kafka.flumeBatchSize = 20a1.sinks.k1.kafka.producer.acks = 1

Flume 接入 CKafka

步骤1:获取 CKafka 实例接入地址

1. 登录 CKafka 控制台。

2. 在左侧导航栏选择实例列表,单击实例的“ID”,进入实例基本信息页面。

3. 在实例的基本信息页面的接入方式模块,可获取实例的接入地址。

步骤2:创建 Topic

1. 在实例基本信息页面,选择顶部 Topic 管理页签。

2. 在 Topic 管理页面,单击新建,创建一个名为 flume_test 的 Topic。

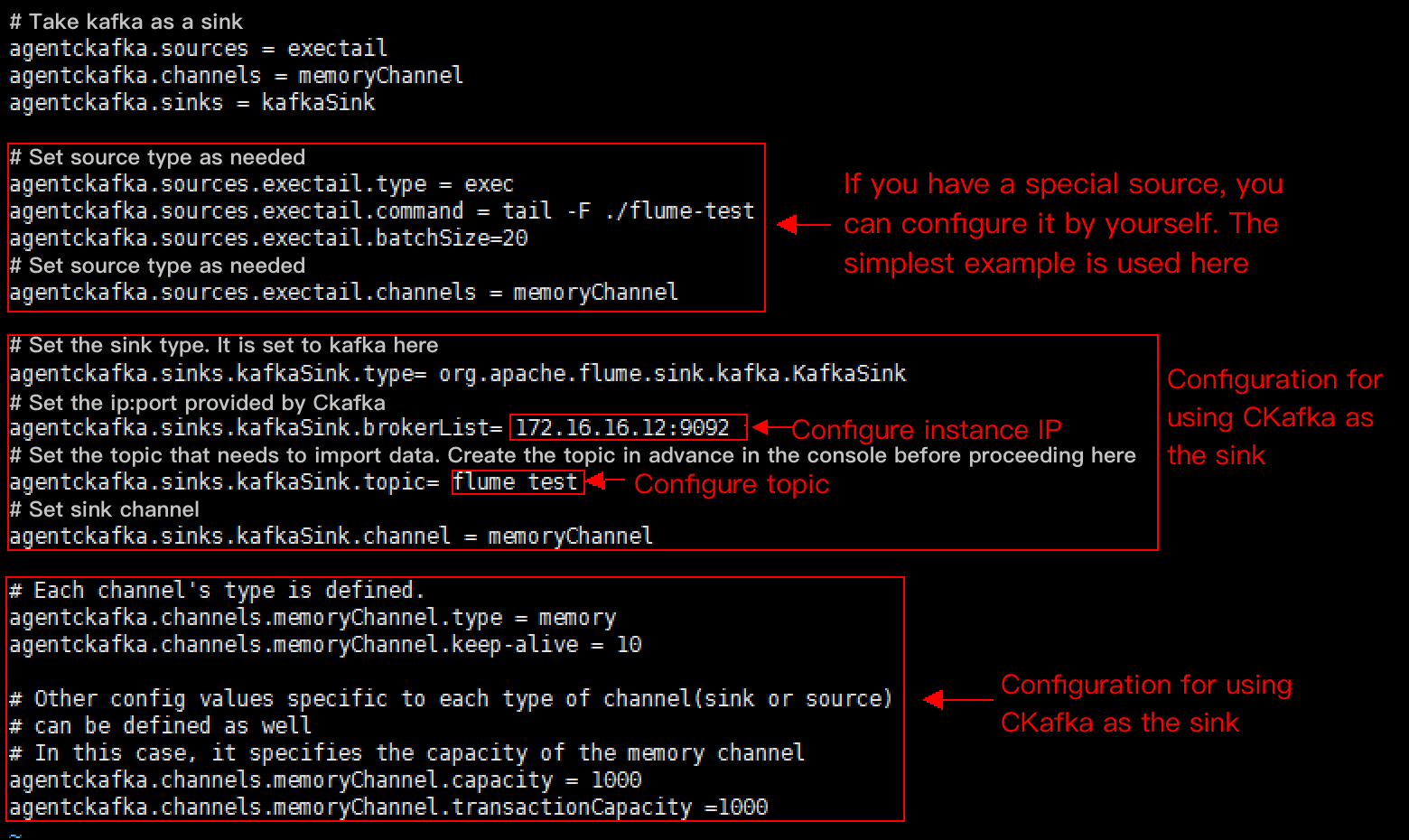

步骤3:配置 Flume

1. 下载 Apache Flume 工具包并解压。

2. 编写配置文件 flume-kafka-sink.properties,以下是一个简单的 Java 语言 Demo(配置在解压目录的 conf 文件夹下),若无特殊要求则将自己的实例 IP 与 Topic 替换到配置文件当中即可。本例使用的 source 为 tail -F flume-test ,即文件中新增的信息。

代码示例如下:

# 以kafka作为sink的demoagentckafka.source = exectailagentckafka.channels = memoryChannelagentckafka.sinks = kafkaSink# 设置source类型,根据不同需求而设置。若有特殊source可自行配置,此处使用最简单的例子agentckafka.sources.exectail.type = execagentckafka.sources.exetail.command = tail -F ./flume.testagentckafka.sources.exectail.batchSize = 20# 设置source channelagentckafka.sources.exectail.channels = memoryChannel# 设置sink类型,此处设置为kafkaagentckafka.sinks.kafkaSink.type = org.apache.flume.sink.kafka.KafkaSink# 此处设置ckafka提供的ip:portagentckafka.sinks.kafkaSink.brokerList = 172.16.16.12:9092 # 配置实例IP# 此处设置需要导入数据的topic,请先在控制台提前创建好topicagentckafka.sinks.kafkaSink.topic = flume test #配置topic# 设置sink channelagentckafka.sinks.kafkaSink.channel = memoryChannel# Channel使用默认配置# Each channel's type is defined.agentckafka.channels.memoryChannel.type = memoryagentckafka.channels.memoryChannel.keep-alive = 10# Other config values specific to each type of channel(sink or source) can be defined as well# In this case, it specifies the capacity of the memory channelagentckafka.channels.memoryChannel.capacity = 1000agentckafka.channels.memoryChannel.transactionCapacity = 1000

3. 执行如下命令启动 Flume。

./bin/flume-ng agent -n agentckafka -c conf -f conf/flume-kafka-sink.properties

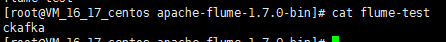

4. 写入消息到 flume-test 文件中,此时消息将由 Flume 写入到 CKafka。

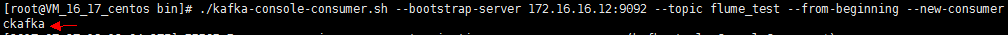

5. 启动 CKafka 客户端进行消费。

./kafka-console-consumer.sh --bootstrap-server xx.xx.xx.xx:xxxx --topic flume_test --from-beginning --new-consumer

说明:

bootstrap-server 填写刚创建的 CKafka 实例的接入地址,topic 填写刚创建的 Topic 名称。

可以看到刚才的消息被消费出来。

步骤1:获取 CKafka 实例接入地址

1. 登录 CKafka 控制台。

2. 在左侧导航栏选择实例列表,单击实例的“ID”,进入实例基本信息页面。

3. 在实例的基本信息页面的接入方式模块,可获取实例的接入地址。

步骤2:创建 Topic

1. 在实例基本信息页面,选择顶部 Topic 管理页签。

2. 在 Topic 管理页面,单击新建,创建一个名为 flume_test 的 Topic。

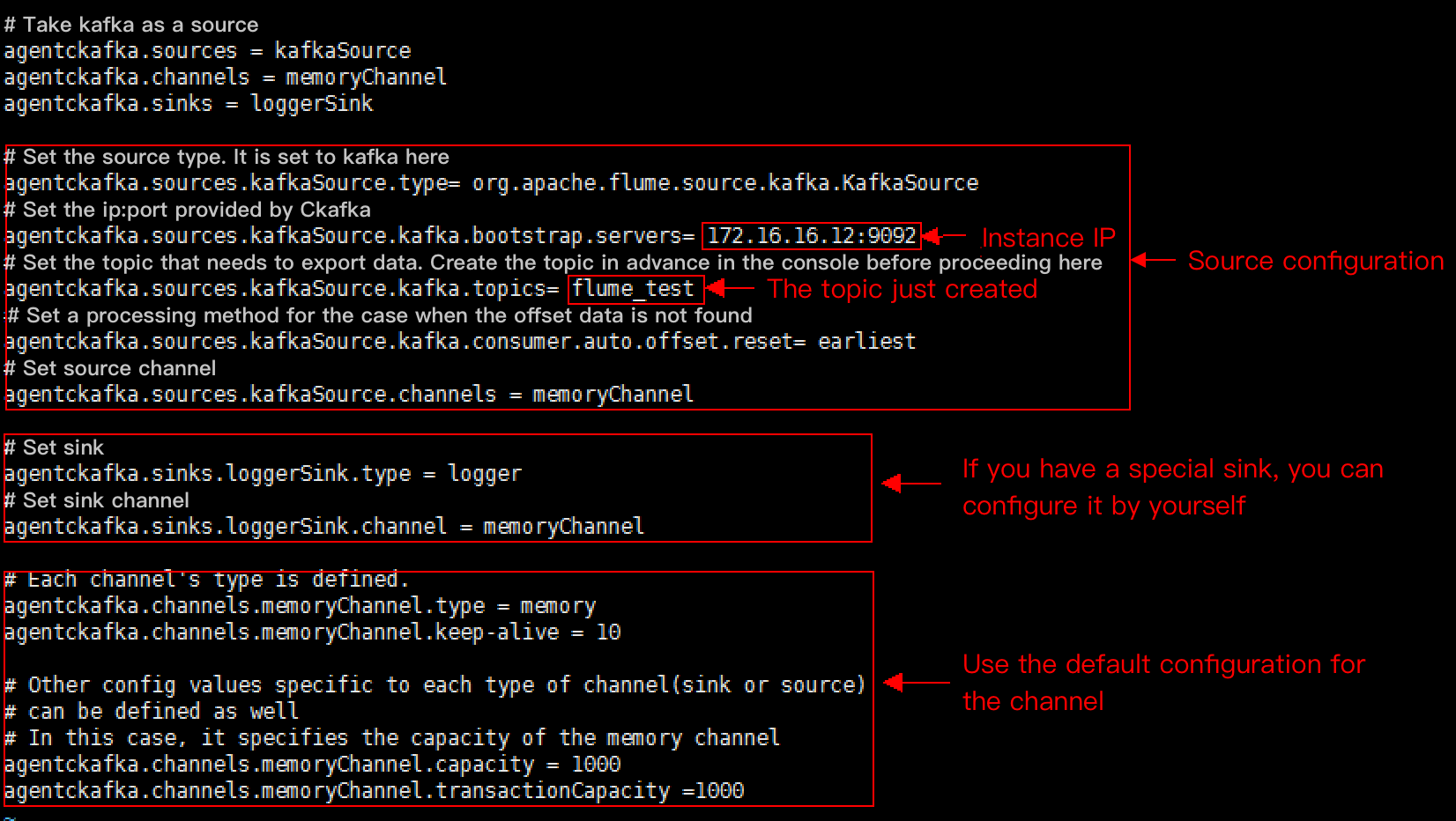

步骤3:配置 Flume

1. 下载 Apache Flume 工具包并解压 。

2. 编写配置文件 flume-kafka-source.properties,以下是一个简单的 Demo(配置在解压目录的 conf 文件夹下)。若无特殊要求则将自己的实例 IP 与 Topic 替换到配置文件当中即可。此处使用的 sink 为 logger。

3. 执行如下命令启动 Flume。

./bin/flume-ng agent -n agentckafka -c conf -f conf/flume-kafka-source.properties

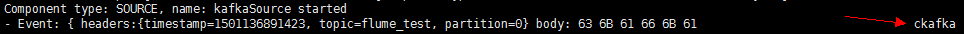

4. 查看 logger 输出信息(默认路径为

logs/flume.log)。

文档反馈