- Release Notes and Announcements

- Release Notes

- Announcements

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Security Vulnerability Fix Description

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Register node management

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

- Release Notes and Announcements

- Release Notes

- Announcements

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Security Vulnerability Fix Description

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Register node management

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

Directly connecting TKE to the CLB of pods based on the ENI

Terakhir diperbarui:2023-05-24 10:17:46

Overview

Kubernetes designs and provides two types of native resources at the cluster access layer, 'Service' and 'Ingress', which are responsible for the network access layer configurations of layer 4 and layer 7, respectively. The traditional solution is to create an Ingress- or LoadBalancer-type service to bind Tencent Cloud CLBs and open services to the public. In this way, user traffic is loaded on the NodePort of the user node, and then forwarded to the container network through the KubeProxy component. This solution has some limitations in business performance and capabilities.

To address these limitations, the Tencent Cloud TKE team provides a new network mode for users who use independent or managed clusters. that is, TKE directly connects to the CLB of pods based on the ENI. This mode provides enhanced performance and business capabilities. This document describes the differences between the two modes and how to use the direct connection mode.

Solution Comparison

| Comparison Item | Direct Connection | NodePort Forwarding | Local Forwarding |

|---|---|---|---|

| Performance | Zero loss | NAT forwarding and inter-node forwarding | Minor loss |

| Pod update | The access layer backend automatically synchronizes updates, so the update process is stable | The access layer backend NodePort remains unchanged | Services may be interrupted without update synchronization |

| Cluster dependency | Cluster version and VPC-CNI network requirements | - | - |

| Business capability restriction | Least restriction | Unable to obtain the source IP address or implement session persistence | Conditional session persistence |

Analysis of Problems with the Traditional Mode

Performance and features

In a cluster, KubeProxy forwards the traffic from user NodePort through NAT to the cluster network. This process has the following problems:

- NAT forwarding causes certain loss in request performance.

- NAT operations cause performance loss.

- The destination address of NAT forwarding may cause the traffic to be forwarded across nodes in a container network.

- NAT forwarding changes the source IP address of the request, so the client cannot obtain the source IP address.

- When the CLB traffic is concentrated on several NodePorts, the over-concentrated traffic will cause excessive SNAT forwarding by NodePorts, which will exhaust the traffic capacity of the port. This problem may also lead to conntrack insertion conflicts, resulting in packet loss and performance deterioration.

- Forwarding by

KubeProxyis random and does not support session persistence. - Each NodePort of

KubeProxyhas independent load-balancing capabilities. As such capabilities cannot be concentrated in one place, global load balancing is difficult to achieve.

To address the preceding problems, the technical suggestion previously provided to users was to adopt local forwarding to avoid the problems caused by KubeProxy NAT forwarding. However, due to the randomness of forwarding, session persistence remains unsupported when multiple replicas are deployed on a node. Moreover, when local forwarding coincides with rolling updates, services can be easily interrupted. This places higher requirements on the rolling update policies and downtime of businesses.

Service availability

When a service is accessed through NodePorts, the design of NodePorts is highly fault-tolerant. The CLB binds the NodePorts of all nodes in the cluster as the backend. When any node of the cluster accesses the service, the traffic will be randomly allocated to the workloads of the cluster. Therefore, the unavailability of NodePorts or pods does not affect the traffic access of the service.

Similar to local access, in cases where the backend of the CLB is directly connected to user pods, if the CLB cannot be promptly bound to the new pod when the service is processing a rolling update, the number of CLB backends of the service entry may be seriously insufficient or even exhausted as a result of rapid rolling updates. Therefore, when the service is processing a rolling update, the security and stability of the rolling update can be ensured if the CLB of the access layer is healthy.

CLB control plane performance

The control plane APIs of the CLB include APIs for creating, deleting, and modifying layer-4 and layer-7 listeners, creating and deleting layer-7 rules, and binding each listener or the rule backend. Most of these APIs are asynchronous APIs, which require the polling of request results, and API calls are time-consuming. When the scale of the user cluster is large, the synchronization of a large amount of access layer resources can impose high latency pressure on components.

Comparison of the New and Old Modes

Performance comparison

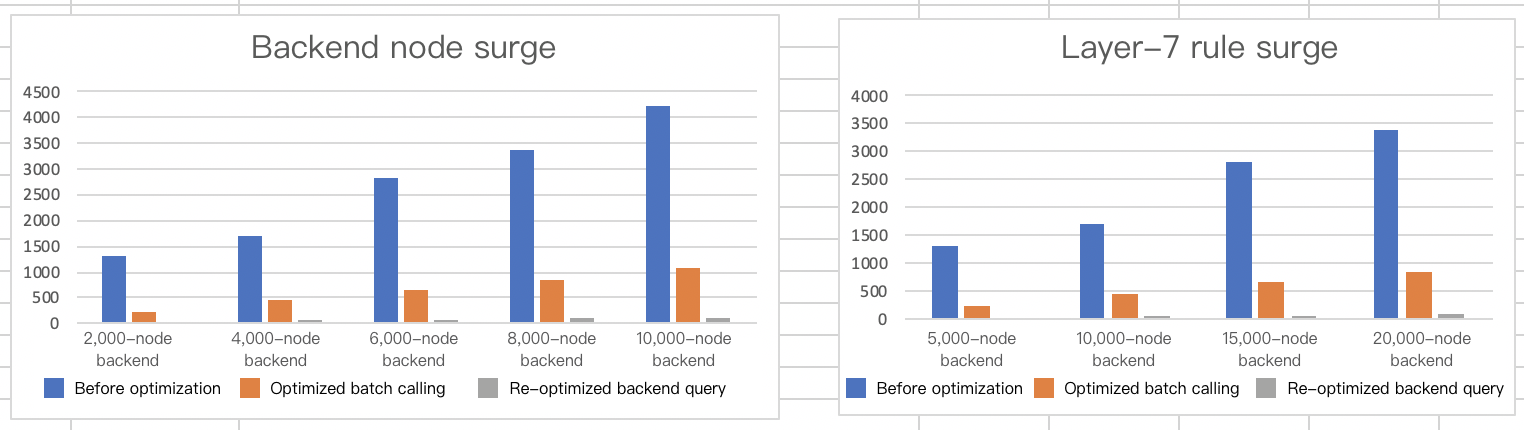

TKE has launched the direct pod connection mode, which optimizes the control plane of the CLB. In the overall synchronization process, this new mode mainly optimizes batch calls and backend instance queries where remote calls are relatively frequent. After the optimization, the performance of the control plane in a typical ingress scenario is improved by 95% to 97% compared with the previous version. At present, the synchronization time is mainly the waiting time of asynchronous APIs.

Backend node data surge

For cluster scaling, the relevant data is as follows:

| Layer-7 Rule Quantity | Cluster Node Quantity | Cluster Node Quantity (Update) | Performance Before Optimization (s) | Optimized Batch Calling Performance (s) | Re-optimized Backend Instance Query Performance (s) | Time Consumption Reduction (%) |

|---|---|---|---|---|---|---|

| 200 | 1 | 10 | 1313.056 | 227.908 | 31.548 | 97.597% |

| 200 | 1 | 20 | 1715.053 | 449.795 | 51.248 | 97.011% |

| 200 | 1 | 30 | 2826.913 | 665.619 | 69.118 | 97.555% |

| 200 | 1 | 40 | 3373.148 | 861.583 | 90.723 | 97.310% |

| 200 | 1 | 50 | 4240.311 | 1085.03 | 106.353 | 97.491% |

Layer-7 rule data surge

For first-time activation and deployment of services in the cluster, the relevant data is as follows:

| Layer-7 Rule Quantity | Layer-7 Rule Quantity (Update) | Cluster Node Quantity | Performance Before Optimization (s) | Optimized Batch Calling Performance (s) | Re-optimized Backend Instance Query Performance (s) | Time Consumption Reduction (%) |

|---|---|---|---|---|---|---|

| 1 | 100 | 50 | 1631.787 | 451.644 | 68.63 | 95.79% |

| 1 | 200 | 50 | 3399.833 | 693.207 | 141.004 | 95.85% |

| 1 | 300 | 50 | 5630.398 | 847.796 | 236.91 | 95.79% |

| 1 | 400 | 50 | 7562.615 | 1028.75 | 335.674 | 95.56% |

The following figure shows the comparison:

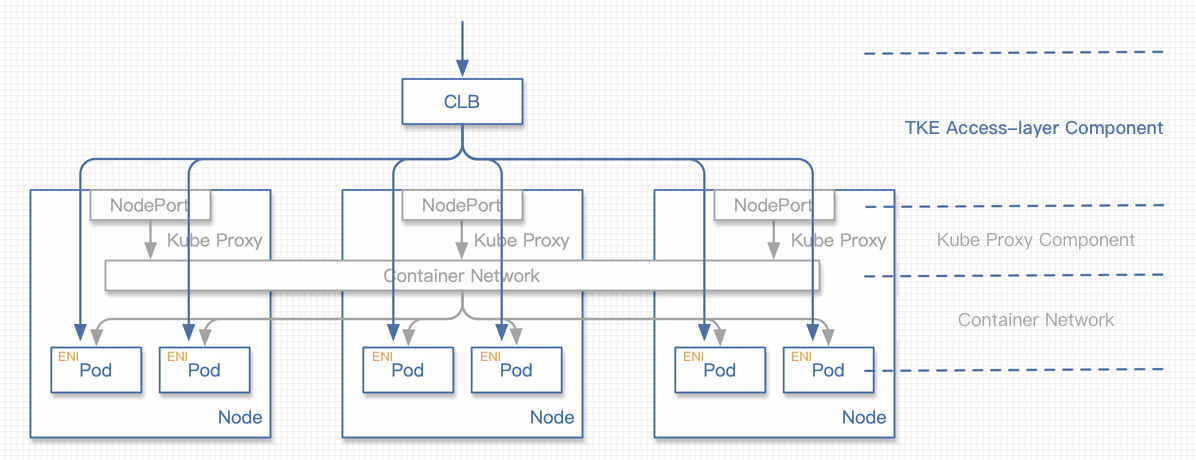

In addition to control plane performance optimization, the CLB can directly access the pods of the container network, which is the integral part of component business capabilities. This not only prevents the loss of NAT forwarding performance, but also eliminates the impact of NAT forwarding on the business features in the cluster. However, the support for optimal access to the container network remains unavailable when the project is launched.

The new mode integrates the feature that allows pods to have an ENI entry under the cluster CNI network mode in order to implement direct access to the CLB. CCN solutions are already available for implementing direct CLB backend access to the container network.

In addition to the capability of direct access, availability during rolling updates must be ensured. To implement this, we use the official feature ReadinessGate, which was officially released in version 1.12 and is mainly used to control the conditions of pods.

By default, a pod has three possible conditions: PodScheduled, Initialized, and ContainersReady. When the state of all pods is Ready, Pod Ready also becomes ready. However, in cloud-native scenarios, the status of pods needs to be determined in combination with other factors. ReadinessGate allows us to add fences for pod status determination so that the pod status can be determined and controlled by a third party, and the pod status can be associated with a third party.

CLB traffic comparison

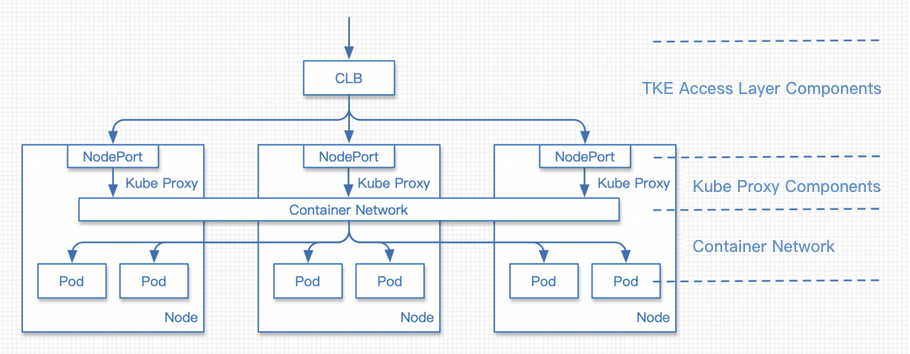

Traditional NodePort mode

The request process is as follows:

- The request traffic reaches the CLB.

- The request is forwarded by the CLB to the NodePort of a certain node.

- KubeProxy performs NAT forwarding for the traffic from the NodePort, with the destination address being the IP address of a random pod.

- The request reaches the container network and is then forwarded to the corresponding node based on the pod address.

- The request reaches the node to which the destination pod belongs and is then forwarded to the pod.

New direct pod connection mode

The request process is as follows:

- The request traffic reaches the CLB.

- The request is forwarded by the CLB to the ENI of a certain pod.

Differences between direct connection and local access

- There is little difference in terms of performance. When local access is enabled, traffic is not subject to NAT operations or cross-node forwarding, and only another route to the container network is added.

- The source IP address can be obtained correctly without NAT operations. The session persistence feature may be abnormal in this condition: when multiple pods exist on a node, traffic is randomly allocated to different pods. This mechanism may cause session persistence problems.

Introduction of ReadinessGate

Issues related to rolling updates

To introduce ReadinessGate, the cluster version must be 1.12 or later.

When users start the rolling update of an app, Kubernetes performs the rolling update according to the update policy. However, the identifications that it uses to determine whether a batch of pods has started only includes the statuses of the pods, but does not consider whether a health check is configured for the pods in the CLB and the pods have passed the check. If such pods cannot be scheduled in time when the access layer components experience a heavy load, the pods that have successfully completed the rolling update may not be providing services to external users, resulting in service interruption.

In order to associate the backend status of the CLB and rolling update, the new feature ReadinessGate, which was introduced in Kubernetes 1.12, was introduced into the TKE access-layer components. With this feature, only after the TKE access-layer components confirm that the backend binding is successful and the health check is passed, will the state of ReadinessGate be configured to enable the pods to enter the Ready state, thus facilitating the rolling update of the entire workload.

Using ReadinessGate in a cluster

Kubernetes clusters provide a service registration mechanism. With this mechanism, you only need to register your services to a cluster as MutatingWebhookConfigurations resources. When a pod is created, the cluster will deliver notifications to the configured callback path. At this time, the pre-creation operation can be performed for the pod, that is, ReadinessGate can be added to the pod.

Note:This callback process must be based on HTTPS. That is, the CA that issues requests must be configured in

MutatingWebhookConfigurations, and a certificate issued by the CA must be configured on the server.

Disaster recovery of the ReadinessGate mechanism

Service registration or certificates in user clusters may be deleted by users, but these system component resources should not be modified or destroyed by users. However, such problems will inevitably occur due to users’ exploration of clusters or improper operations.

The access layer components will check the integrity of the resources above during launch. If their integrity is compromised, the components will rebuild these resources to enhance the robustness of the system.

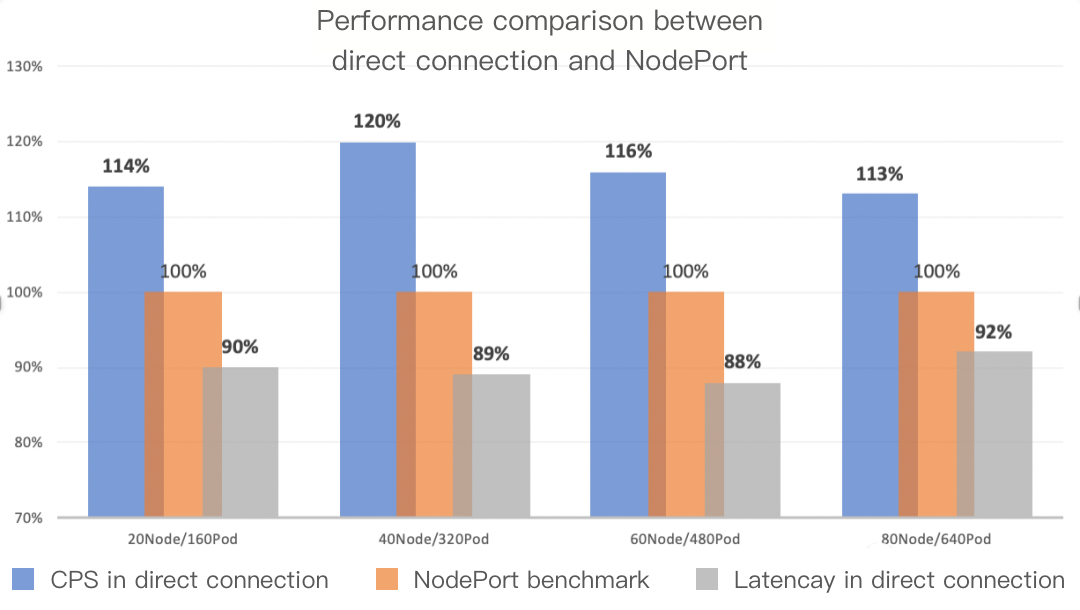

QPS and network latency comparison

Direct connection and NodePorts are the access layer solutions for service applications. In fact, the workloads deployed by users are the ultimate workers, and therefore the capabilities of user workloads directly determine the QPS and other metrics of services.

For these two access-layer solutions, we performed some comparative tests on network link latency under low workload pressure. The latency of direct connection on the network link of the access layer can be reduced by 10%, and traffic in the VPC network was greatly reduced. During the tests, the cluster size was gradually increased from 20 nodes to 80 nodes, and the wrk tool was used to test the network latency of the cluster. The comparison of QPS and network latency between direct connection and NodePorts is shown in the following figure:

KubeProxy design ideas

KubeProxy has some disadvantages, but based on the various features of CLB and VPC network, we have a more localized access layer solution. KubeProxy offers a universal and fault-tolerant design for the cluster access layer. It is basically applicable to clusters in all business scenarios. As an official component, this design is very appropriate.

New Mode Usage Guide

Prerequisites

- The Kubernetes version of the cluster is 1.12 or later.

- The VPC-CNI ENI mode is enabled for the cluster network mode.

- The workloads used by a service in direct connection mode adopts the VPC-CNI ENI mode.

Console operation instructions

- Log in to the TKE console.

- Refer to the steps of creating a service in the console and go to the "Create a Service" page to configure the service parameters as required.

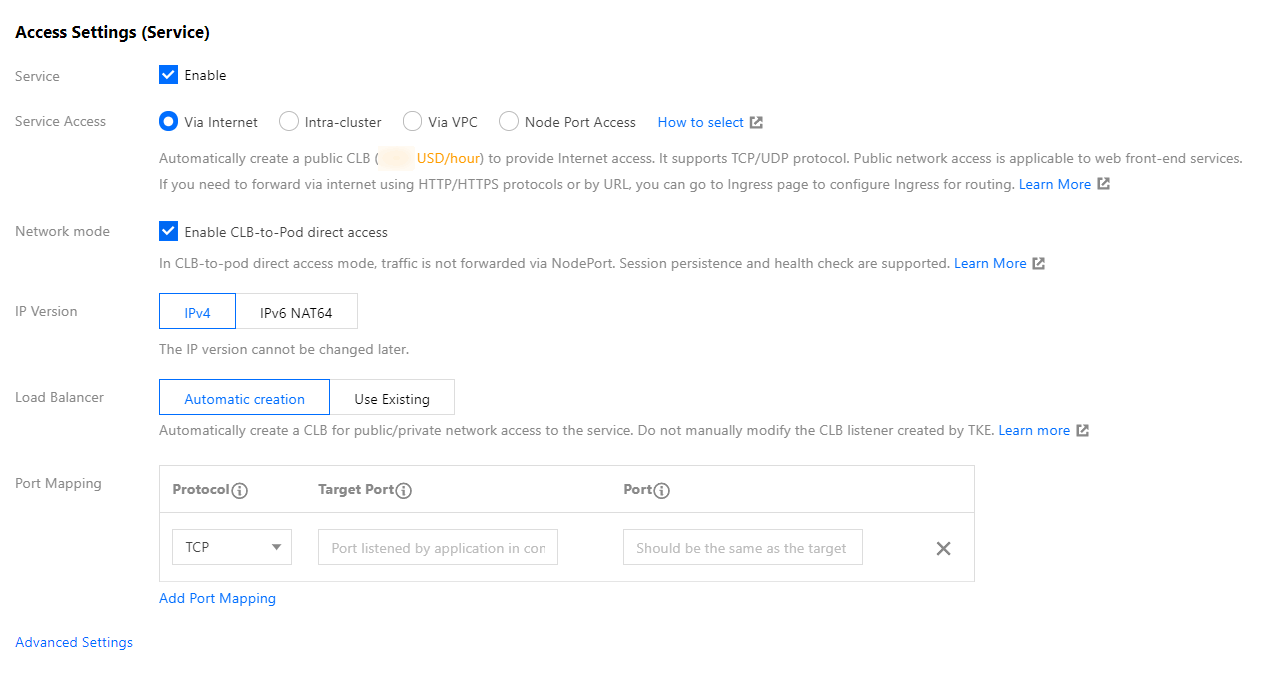

Configure the main parameters, as shown in the following figure:

- Service Access Mode: select Provide Public Network Access or VPC Access.

- Network Mode: select Direct CLB-Pod Connection Mode.

- Workload Binding: select Import Workload. In the window that appears, select the backend workload in VPC-CNI mode.

- Click Create Service to complete the creation process.

Kubectl operation instructions

Workload example: nginx-deployment-eni.yaml

Note:Note:

spec.template.metadata.annotationsdeclarestke.cloud.tencent.com/networks: tke-route-eni, meaning that the workload uses the VPC-CNI ENI mode.apiVersion: apps/v1 kind: Deployment metadata: labels: app: nginx name: nginx-deployment-eni spec: replicas: 3 selector: matchLabels: app: nginx template: metadata: annotations: tke.cloud.tencent.com/networks: tke-route-eni labels: app: nginx spec: containers: - image: nginx:1.7.9 name: nginx ports: - containerPort: 80 protocol: TCPService example: nginx-service-eni.yaml

Note:metadata.annotationsdeclaresservice.cloud.tencent.com/direct-access: "true", meaning that, when synchronizing the CLB, the service configures the access backend by using the direct connection method.apiVersion: v1 kind: Service metadata: annotations: service.cloud.tencent.com/direct-access: "true" labels: app: nginx name: nginx-service-eni spec: externalTrafficPolicy: Cluster ports: - name: 80-80-no port: 80 protocol: TCP targetPort: 80 selector: app: nginx sessionAffinity: None type: LoadBalancerDeploying Cluster

Note:In the deployment environment, you must first connect to a cluster (if you do not have a cluster, create one.) You can refer to the Help Document to configure kubectl to connect to a cluster.

➜ ~ kubectl apply -f nginx-deployment-eni.yaml deployment.apps/nginx-deployment-eni created ➜ ~ kubectl apply -f nginx-service-eni.yaml service/nginx-service-eni configured ➜ ~ kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-eni-bb7544db8-6ljkm 1/1 Running 0 24s 172.17.160.191 172.17.0.3 <none> 1/1 nginx-deployment-eni-bb7544db8-xqqtv 1/1 Running 0 24s 172.17.160.190 172.17.0.46 <none> 1/1 nginx-deployment-eni-bb7544db8-zk2cx 1/1 Running 0 24s 172.17.160.189 172.17.0.9 <none> 1/1 ➜ ~ kubectl get service -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.187.252.1 <none> 443/TCP 6d4h <none> nginx-service-eni LoadBalancer 10.187.254.62 150.158.221.31 80:32693/TCP 6d1h app=nginx

Summary

Currently, TKE uses ENI to implement the direct pod connection mode. We will further optimize this feature, including in the following respects:

- Implement direct pod connection under a common container network, without dependency on the VPC-ENI network mode.

- Support the removal of the CLB backend before pod deletion.

Comparison with similar solutions in the industry:

- AWS has a similar solution that implements direct pod connection through ENI.

- Google Kubernetes Engine (GKE) has a similar solution that integrates the Network Endpoint Groups (NEG) feature of Google Cloud Load Balancing (CLB) to implement direct connection to pods at the access layer.

References

- Service

- Ingress

- Strategy

- Pod readiness

- Preserving the client source IP

- How to Choose TKE Network Mode

- GlobalRouter VPC-CNI Mode Description

- Connecting to a Cluster

- Kubernetes Ingress with AWS ALB Ingress Controller

- GKE Container-native Load Balancing Through Standalone Zonal NEGs

Ya

Ya

Tidak

Tidak

Apakah halaman ini membantu?