- Release Notes and Announcements

- Release Notes

- Announcements

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Security Vulnerability Fix Description

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Register node management

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

- Release Notes and Announcements

- Release Notes

- Announcements

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Security Vulnerability Fix Description

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Register node management

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

Guide on Migrating Resources in a TKE Managed Cluster to an Serverless Cluster

Terakhir diperbarui:2022-12-13 18:23:37

Prerequisites

- A TKE managed cluster on v1.18 or later (cluster A) exists.

- A migration target TKE Serverless cluster on v1.20 or later (cluster B) is created. For how to create a TKE Serverless cluster, see Connecting to a Cluster.

- Both cluster A and cluster B need to share the same COS bucket as Velero backend storage. For how to configure a COS bucket, see Configuring COS.

- We recommend that Clusters A and B be under the same VPC, so that you can back up data in the PVC.

- Make sure that image resources can be pulled properly after migration. For how to configure an image repository in a TKE Serverless cluster, see Image Repository FAQs.

- Make sure that the Kubernetes versions of both clusters are compatible. We recommend you use the same version. If cluster A is on a lower version, upgrade it before migration.

Limitations

- After workloads with a fixed IP are enabled in a TKE cluster, their IPs will change after the migration to a TKE Serverless cluster. You can specify an IP to create a Pod in the Pod template, for example

eks.tke.cloud.tencent.com/pod-ip: "xx.xx.xx.xx". - TKE Serverless clusters with containerd as the container runtime are not compatible with images from Docker Registry v2.5 or earlier, or Harbor v1.10 or earlier.

- In a TKE Serverless cluster, each Pod comes with 20 GiB temporary disk space for image storage by default, which is created and terminated along the lifecycle of the Pod. If you need larger disk space, mount other types of volumes, such as PVC volumes, for data storage.

- When deploying DaemonSet workloads on a TKE Serverless cluster, you need to deploy them on business pods in sidecar mode.

- When deploying NodePort services on a TKE Serverless cluster, you cannot access the services through

NodeIP:Port. Instead, you need to useClusterIP:Portto access the services. - Pods deployed on a TKE Serverless cluster expose monitoring data via port 9100 by default. If your business Pod requires listening on port 9100, you can avoid conflicts by using other ports to collect monitoring data when creating a Pod. For example, you can configure as follows:

eks.tke.cloud.tencent.com/metrics-port: "9110". - In addition to the preceding limitations, other points for attention of TKE Serverless clusters are described here.

Migration Directions

The following describes how to migrate resources from TKE cluster A to TKE Serverless cluster B.

Configuring COS

For operation details, see Creating a bucket.

Downloading Velero

Download the latest version of Velero to the cluster environment. Velero v1.8.1 is used as an example in this document.

wget https://github.com/vmware-tanzu/velero/releases/download/v1.8.1/velero-v1.8.1-linux-amd64.tar.gzRun the following command to decompress the installation package, which contains Velero command lines and some sample files.

tar -xvf velero-v1.8.1-linux-amd64.tar.gzRun the following command to migrate the Velero executable file from the decompressed directory to the system environment variable directory, that is,

/usr/binin this document, as shown below:cp velero-v1.8.1-linux-amd64/velero /usr/bin/

Installing Velero in clusters A and B

Configure the Velero client and enable CSI.

velero client config set features=EnableCSIRun the following command to install Velero in clusters A and B and create Velero workloads as well as other necessary resource objects.

- Below is an example of using CSI for PVC backup:

velero install --provider aws \ --plugins velero/velero-plugin-for-aws:v1.1.0,velero/velero-plugin-for-csi:v0.2.0 \ --features=EnableCSI \ --features=EnableAPIGroupVersions \ --bucket <BucketName> \ --secret-file ./credentials-velero \ --use-volume-snapshots=false \ --backup-location-config region=ap-guangzhou,s3ForcePathStyle="true",s3Url=https://cos.ap-guangzhou.myqcloud.com

Note:TKE Serverless clusters do not support DaemonSet deployment, so none of the samples in this document support the restic add-on.

- If you don't need to back up the PVC, see the following installation sample:

./velero install --provider aws --use-volume-snapshots=false --bucket gtest-1251707795 --plugins velero/velero-plugin-for-aws:v1.1.0 --secret-file ./credentials-velero --backup-location-config region=ap-guangzhou,s3ForcePathStyle="true",s3Url=https://cos.ap-guangzhou.myqcloud.com

For installation parameters, see Using COS as Velero Storage to Implement Backup and Restoration of Cluster Resources or run the velero install --help command.

Other installation parameters are as described below:

| Parameter | Configuration |

|---|---|

| --plugins | Use the AWS S3 API-compatible add-on `velero-plugin-for-aws`; use the CSI add-on velero-plugin-for-csi to back up `csi-pv`. We recommend you enable it. |

| --features | Enable optional features:Enable the API group version feature. This feature is used for compatibility with different API group versions and we recommend you enable it.Enable the CSI snapshot feature. This feature is used to back up the CSI-supported PVC, so we recommend you enable it. |

| --use-restic | Velero supports the restic open-source tool to back up and restore Kubernetes storage volume data (hostPath volumes are not supported. For details, see here). It's used to supplement the Velero backup feature. During the migration to a TKE Serverless cluster, enabling this parameter will fail the backup. |

| --use-volume-snapshots=false | Disable the default snapshot backup of storage volumes. |

velero backup-location get

NAME PROVIDER BUCKET/PREFIX PHASE LAST VALIDATED ACCESS MODE DEFAULT

default aws <BucketName> Available 2022-03-24 21:00:05 +0800 CST ReadWrite true

At this point, you have completed the Velero installation. For more information, see Velero Documentation.

(Optional) Installing VolumeSnapshotClass in clusters A and B

Note:

- Skip this step if you don't need to back up the PVC.

- For more information on storage snapshot, see Backing up and Restoring PVC via CBS-CSI Add-on.

Check that you have installed the CBS-CSI add-on.

You have granted related permissions of CBS snapshot for

TKE_QCSRoleon the Access Management page of the console. For details, see CBS-CSI.Use the following YAML to create a VolumeSnapshotClass object, as shown below:

apiVersion: snapshot.storage.k8s.io/v1beta1 kind: VolumeSnapshotClass metadata: labels: velero.io/csi-volumesnapshot-class: "true" name: cbs-snapclass driver: com.tencent.cloud.csi.cbs deletionPolicy: DeleteRun the following command to check whether the VolumeSnapshotClass has been created successfully, as shown below:

$ kubectl get volumesnapshotclass NAME DRIVER DELETIONPOLICY AGE cbs-snapclass com.tencent.cloud.csi.cbs Delete 17m

(Optional) Creating sample resource for cluster A

Note:Skip this step if you don't need to back up the PVC.

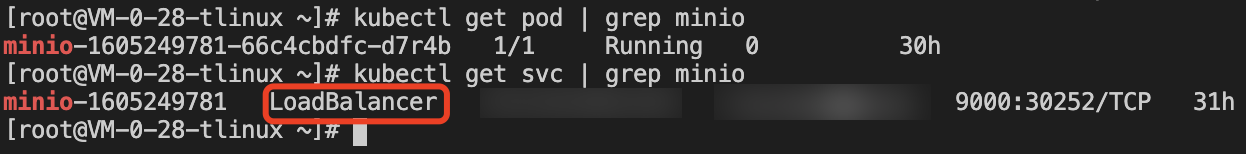

Deploy a MinIO workload with the PVC in a Velero instance in cluster A. Here, the cbs-csi dynamic storage class is used to create the PVC and PV.

Use

provisionerin the cluster to dynamically create the PV for thecom.tencent.cloud.csi.cbsstorage class. A sample PVC is as follows:apiVersion: v1 kind: PersistentVolumeClaim metadata: annotations: volume.beta.kubernetes.io/storage-provisioner: com.tencent.cloud.csi.cbs name: minio spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: cbs-csi volumeMode: FilesystemUse the Helm tool to create a MinIO testing service that references the above PVC. For more information on MinIO installation, see here. In this sample, a load balancer has been bound to the MinIO service, and you can access the management page by using a public network address.

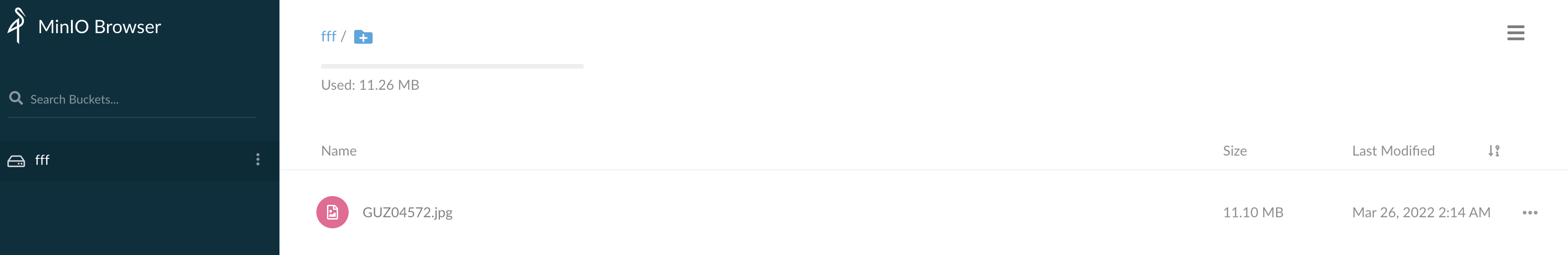

Log in to the MinIO web management page and upload the images for testing as shown below:

Backup and restoration

- To create a backup in cluster A, see Creating a backup in cluster A in the Cluster Migration directions.

- To perform a restoration in cluster B, see Performing a restoration in cluster B in the Cluster Migration directions.

- Verify the migration result:

- If you don't need to back up the PVC, see Verifying migration result in the Cluster Migration directions.

- If you need to back up the PVC, perform a verification as follows:

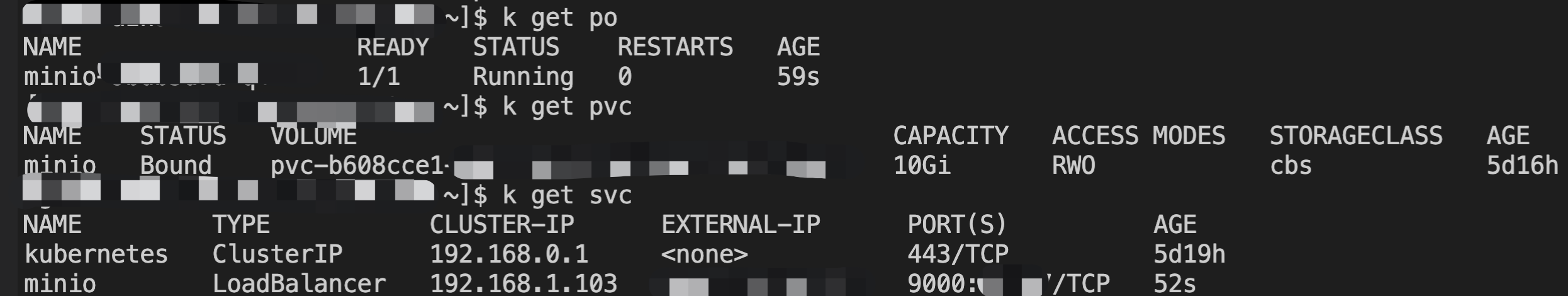

a. Run the following command to verify the resources in cluster B after migration. You can see that the Pods, PVC, and Service have been successfully migrated as shown below:

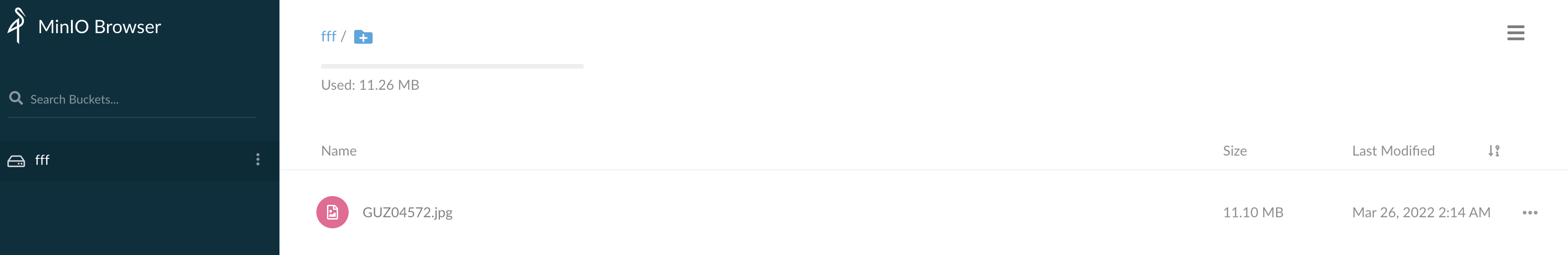

b. Log in to the MinIO service in cluster B. You can see that the images in the MinIO service are not lost, indicating that the persistent volume data has been successfully migrated as expected.

- Now, resource migration from the TKE cluster to the TKE Serverless cluster is completed.

After the migration is complete, run the following command to restore the backup storage locations of clusters A and B to read/write mode as shown below, so that the next backup task can be performed normally:kubectl patch backupstoragelocation default --namespace velero \

--type merge

--patch '{"spec":{"accessMode":"ReadWrite"}}'

Serverless Cluster FAQs

- Failed to pull an image: See Image Repository.

- Failed to perform a DNS query: This type of failure often takes the form of failing to pull a Pod image or deliver logs to a self-built Kafka cluster. For more information, see Customized DNS Service of Serverless Cluster.

- Failed to deliver logs to CLS: When you use a TKE Serverless cluster to deliver logs to CLS for the first time, you need to authorize the service as instructed in Enabling Log Collection.

- By default, up to 100 Pods can be created for each cluster. If you need to create more, see Default Quota.

- When Pods are frequently terminated and recreated, the

Timeout to ensure pod sandboxerror is reported: The add-ons in TKE Serverless cluster Pods communicate with the control plane for health checks. If the network remains disconnected for six minutes after Pod creation, the control plane will initiate the termination and recreation. In this case, you need to check whether the security group associated with the Pod has allowed access to the 169.254 route. - Pod port access failure/not ready:

- Check whether the service container port conflicts with the TKE Serverless cluster control plane port as instructed in Port Limits.

- If the Pod can be pinged succeeded, but the telnet failed, check the security group.

- When creating an instance, you can use the following features to speed up image pull: Mirror cache and Mirror reuse.

- Failed to dump business logs: After a TKE Serverless job business exits, the underlying resources are repossessed, and container logs can't be viewed by using the

kubectl logscommand, adversely affecting debugging. You can dump the business logs by delaying the termination or setting theterminationMessagefield as instructed in How to set container's termination message?. - The Pod restarts frequently, and the

ImageGCFailederror is reported: A TKE Serverless cluster Pod has 20 GiB disk size by default. If the disk usage reaches 80%, the TKE Serverless cluster control plane will trigger the container image repossession process to try to repossess the unused images and free up the space. If it fails to free up any space,ImageGCFailed: failed to garbage collect required amount of imageswill be reported to remind you that the disk space is insufficient. Common causes of insufficient disk space include:- The business has a lot of temporary output.

- The business holds deleted file descriptors, so some space is not freed up.

Ya

Ya

Tidak

Tidak

Apakah halaman ini membantu?