- Release Notes and Announcements

- Release Notes

- Announcements

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Security Vulnerability Fix Description

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Register node management

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

- Release Notes and Announcements

- Release Notes

- Announcements

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Security Vulnerability Fix Description

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Register node management

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

Overview

As DNS is the first step in service access in a Kubernetes cluster, its stability and performance are of great importance. How to configure and use DNS in a better way involves many aspects. This document describes the best practices of DNS.

Selecting the Most Appropriate CoreDNS Version

The following table lists the default CoreDNS versions deployed in TKE clusters on different versions.

Due to historical reasons, CoreDNS v1.6.2 may still be deployed in clusters on v1.18 or later. If the current CoreDNS version doesn't meet your requirements, you can manually upgrade it as follows:

Configuring an Appropriate Number of CoreDNS Replicas

1. The default number of CoreDNS replicas in TKE is 2, and

podAntiAffinity is configured to deploy the two replicas on different nodes.2. If your cluster has more than 80 nodes, we recommend you install NodeLocal DNSCache as instructed in Using NodeLocal DNS Cache in a TKE Cluster.

3. Generally, you can determine the number of CoreDNS replicas based on the QPS of business access to DNS, number of nodes, or total number of CPU cores. After you install NodeLocal DNSCache, we recommend you use up to ten CoreDNS replicas. You can configure the number of replicas as follows:

Number of replicas = min ( max ( ceil (QPS/10000), ceil (number of cluster nodes/8) ), 10 )

Example:

If the cluster has ten nodes and the QPS of DNS service requests is 22,000, configure the number of replicas to 3.

If the cluster has 30 nodes and the QPS of DNS service requests is 15,000, configure the number of replicas to 4.

If the cluster has 100 nodes and the QPS of DNS service requests is 50,000, configure the number of replicas to 10 (NodeLocal DNSCache has been deployed).

4. You can install the DNSAutoScaler add-on in the console to automatically adjust the number of CoreDNS replicas (smooth upgrade should be configured in advance). Below is its default configuration:

data:ladder: |-{"coresToReplicas":[[ 1, 1 ],[ 128, 3 ],[ 512,4 ],],"nodesToReplicas":[[ 1, 1 ],[ 2, 2 ]]}

Using NodeLocal DNSCache

NodeLocal DNSCache can be deployed in a TKE cluster to improve the service discovery stability and performance. It improves cluster DNS performance by running a DNS caching agent on cluster nodes as a DaemonSet.

For more information on NodeLocal DNSCache and how to deploy NodeLocal DNSCache in a TKE cluster, see Using NodeLocal DNS Cache in a TKE Cluster.

Configuring CoreDNS Smooth Upgrade

During node restart or CoreDNS upgrade, some CoreDNS replicas may be unavailable for a period of time. You can configure the following items to maximize the DNS service availability and implement smooth upgrade.

No configuration required in iptables mode

If kube-proxy adopts the iptables mode, kube-proxy clears conntrack entries after iptables rule synchronization, leaving no session persistence problem and requiring no configuration.

Configuring the session persistence timeout period of the IPVS UDP protocol in IPVS mode

If kube-proxy adopts the IPVS mode and the business itself doesn't provide the UDP service, you can reduce the session persistence timeout period of the IPVS UDP protocol to minimize the service unavailability.

1. If the cluster is on v1.18 or later, kube-proxy provides the

--ipvs-udp-timeout parameter with the default value of 0s, or the system default value 300s can be used. We recommend you specify --ipvs-udp-timeout=10s. Configure the kube-proxy DaemonSet as follows:spec:containers:- args:- --kubeconfig=/var/lib/kube-proxy/config- --hostname-override=$(NODE_NAME)- --v=2- --proxy-mode=ipvs- --ipvs-scheduler=rr- --nodeport-addresses=$(HOST_IP)/32- --ipvs-udp-timeout=10scommand:- kube-proxyname: kube-proxy

2. If the cluster is on v1.16 or earlier, kube-proxy doesn't support this parameter, and you can use the

ipvsadm tool to batch modify the information on nodes as follows:yum install -y ipvsadmipvsadm --set 900 120 10

3. After completing the configuration, verify the result as follows:

ipvsadm -L --timeoutTimeout (tcp tcpfin udp): 900 120 10

Note

After completing the configuration, you need to wait for five minutes before proceeding to the subsequent steps. If your business uses the UDP service, submit a ticket for assistance.

Configuring graceful shutdown for CoreDNS

You can configure

lameduck to make replicas that have already received a shutdown signal continue providing the service for a certain period of time. Configure the CoreDNS ConfigMap as follows. Below is only a part of the configuration of CoreDNS v1.6.2. For information about the configuration of other versions, see Manual Upgrade..:53 {health {lameduck 30s}kubernetes cluster.local. in-addr.arpa ip6.arpa {pods insecureupstreamfallthrough in-addr.arpa ip6.arpa}}

Configuring CoreDNS service readiness confirmation

After a new replica starts, you need to check its service readiness and add it to the backend list of the DNS service.

1. Open the

ready plugin and configure the CoreDNS ConfigMap as follows. Below is only a part of the configuration of CoreDNS v1.6.2. For information about the configuration of other versions, see Manual Upgrade..:53 {readykubernetes cluster.local. in-addr.arpa ip6.arpa {pods insecureupstreamfallthrough in-addr.arpa ip6.arpa}}

2. Add the

ReadinessProbe configuration for CoreDNS:readinessProbe:failureThreshold: 5httpGet:path: /readyport: 8181scheme: HTTPinitialDelaySeconds: 30periodSeconds: 10successThreshold: 1timeoutSeconds: 5

Configuring CoreDNS to Access Upstream DNS over UDP

If CoreDNS needs to communicate with the DNS server, it will use the client request protocol (UDP or TCP) by default. However, in TKE, the upstream service of CoreDNS is the DNS service in the VPC by default, which offers limited support for TCP. Therefore, we recommend you configure using UDP as follows (especially when NodeLocal DNSCache is installed):

.:53 {forward . /etc/resolv.conf {prefer_udp}}

Configuring CoreDNS to Filter HINFO Requests

As the DNS service in the VPC doesn't support DNS requests of the HINFO type, we recommend you configure as follows to filter such requests on the CoreDNS side (especially when NodeLocal DNSCache is installed):

.:53 {template ANY HINFO . {rcode NXDOMAIN}}

Configuring CoreDNS to Return "The domain name doesn't exist" for IPv6 AAAA Record Queries

If the business doesn't need to resolve IPv6 domain names, you can configure as follows to reduce the communication costs:

.:53 {template ANY AAAA {rcode NXDOMAIN}}

Note

Do not use this configuration in IPv4/IPv6 dual-stack clusters.

Configuring Custom Domain Name Resolution

Manual Upgrade

Upgrading to v1.7.0

1. Edit the

coredns ConfigMap.kubectl edit cm coredns -n kube-system

Modify the content as follows:

.:53 {template ANY HINFO . {rcode NXDOMAIN}errorshealth {lameduck 30s}readykubernetes cluster.local. in-addr.arpa ip6.arpa {pods insecurefallthrough in-addr.arpa ip6.arpa}prometheus :9153forward . /etc/resolv.conf {prefer_udp}cache 30reloadloadbalance}

2. Edit the

coredns Deployment.kubectl edit deployment coredns -n kube-system

Replace the image as follows:

image: ccr.ccs.tencentyun.com/tkeimages/coredns:1.7.0

Upgrading to v1.8.4

1. Edit the

coredns ClusterRole.kubectl edit clusterrole system:coredns

Modify the content as follows:

rules:- apiGroups:- '*'resources:- endpoints- services- pods- namespacesverbs:- list- watch- apiGroups:- discovery.k8s.ioresources:- endpointslicesverbs:- list- watch

2. Edit the

coredns ConfigMap.kubectl edit cm coredns -n kube-system

Modify the content as follows:

.:53 {template ANY HINFO . {rcode NXDOMAIN}errorshealth {lameduck 30s}readykubernetes cluster.local. in-addr.arpa ip6.arpa {pods insecurefallthrough in-addr.arpa ip6.arpa}prometheus :9153forward . /etc/resolv.conf {prefer_udp}cache 30reloadloadbalance}

3. Edit the

coredns Deployment.kubectl edit deployment coredns -n kube-system

Replace the image as follows:

image: ccr.ccs.tencentyun.com/tkeimages/coredns:1.8.4

Suggestions on Business Configuration

In addition to the best practices of the DNS service, you can also perform appropriate optimization configuration on the business side to improve the DNS user experience.

1. By default, a domain name in a Kubernetes cluster generally can be resolved after multiple resolution requests. By viewing

/etc/resolv.conf in a Pod, you will see that the default value of ndots is 5. For example, when the kubernetes.default.svc.cluster.local Service in the debug namespace is queried:The domain name has four dots (

.), so the system tries adding the first search to use kubernetes.default.svc.cluster.local.debug.svc.cluster.local for query, but cannot find the domain name.The system continues to use

kubernetes.default.svc.cluster.local.svc.cluster.local for query, but still cannot find the domain name.The system continues to use

kubernetes.default.svc.cluster.local.cluster.local for query, but still cannot find the domain name.The system tries using

kubernetes.default.svc.cluster.local without adding the extension. The query succeeds, and the responding ClusterIP is returned.2. The above simple Service domain name can be resolved successfully after four resolutions, and there are a large number of useless DNS requests in the cluster. Therefore, you need to set an appropriate

ndots value based on the access type configured for the business to reduce the number of queries:spec:dnsConfig:options:- name: ndotsvalue: "2"containers:- image: nginximagePullPolicy: IfNotPresentname: diagnosis

3. In addition, you can optimize the domain name configuration for your business to access Services:

The Pod should access a Service in the current namespace through

<service-name>.The Pod should access a Service in another namespace through

<service-name>.<namespace-name>.The Pod should access an external domain name through a fully qualified domain name (FQDN) with a dot (

.) added at the end to reduce useless queries.Related Content

Configuration description

errors

It outputs an error message.

health

It reports the health status and is used for health check configuration such as

livenessProbe. It listens on port 8080 by default and uses the path http://localhost:8080/health.Note

If there are multiple server blocks,

health can be configured only once or configured for different ports.com {whoamihealth :8080}net {erratichealth :8081}

lameduck

It is used to configure the graceful shutdown duration. It is implemented as follows: the hook executes

sleep when CoreDNS receives a shutdown signal to ensure that the service can continue to run for a certain period of time.ready

It reports the plugin status and is used for service readiness check configuration such as

readinessProbe. It listens on port 8181 by default and uses the path http://localhost:8181/ready.kubernetes

It is a Kubernetes plugin that can resolve Services in the cluster.

prometheus

It is a

metrics data API used to get the monitoring data. Its path is http://localhost:9153/metrics.forward(proxy)

It forwards requests failed to be processed to an upstream DNS server and uses the

/etc/resolv.conf configuration of the host by default.According to the configuration of

forward aaa bbb, the upstream DNS server list [aaa,bbb] is maintained internally.When a request arrives, an upstream DNS server will be selected from the

[aaa,bbb] list to forward the request according to the preset policy (random|round_robin|sequential, where random is the default policy). If forwarding fails, another server will be selected for forwarding, and regular health check will be performed on the failed server until it becomes healthy.If a server fails the health check multiple times (twice by default) in a row, its status will be set to

down, and it will be skipped in subsequent server selection.If all servers are down, the system randomly selects a server for forwarding.

Therefore, CoreDNS can intelligently switch between multiple upstream servers. As long as there is an available server in the forwarding list, the request can succeed.

cache

It is the DNS cache.

reload

It hotloads the Corefile. It will reload the new configuration in two minutes after the ConfigMap is modified.

loadbalance

It provides the DNS-based load balancing feature by randomizing the order of records in the answer.

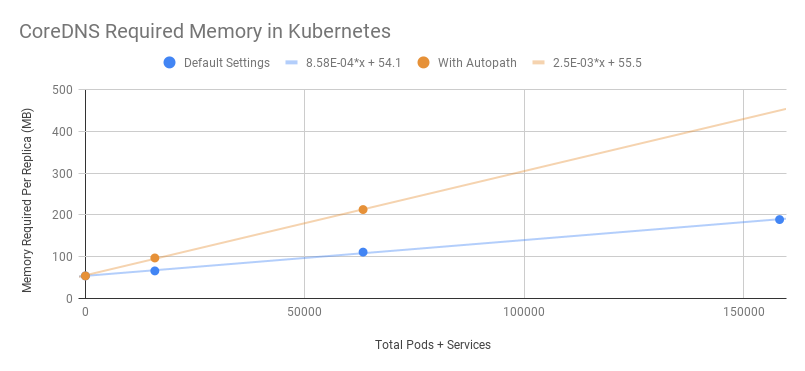

Resource usage of CoreDNS

It is subject to the number of Pods and Services in the cluster.

It is affected by the size of enabled cache.

It is affected by the QPS.

Below is the official data of CoreDNS:

MB required (default settings) = (Pods + Services) / 1000 + 54

It is subject to the QPS.

Below is the official data of CoreDNS:

Single-replica CoreDNS with a running node specification of two vCPUs and 7.5 GB MEM:

Query Type | QPS | Avg Latency (ms) | Memory Delta (MB) |

external | 6733 | 12.02 | +5 |

internal | 33669 | 2.608 | +5 |

Ya

Ya

Tidak

Tidak

Apakah halaman ini membantu?