- Release Notes and Announcements

- Release Notes

- Announcements

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Security Vulnerability Fix Description

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Register node management

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

- Release Notes and Announcements

- Release Notes

- Announcements

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Security Vulnerability Fix Description

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Register node management

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

Overview

This document introduces the log-related features of TKE, including log collection, storage, and query, and provides suggestions based on actual application scenarios.

Note:

- This document is only applicable to TKE clusters.

- For more information on how to enable log collection for a TKE cluster and its basic usage, see Log Collection.

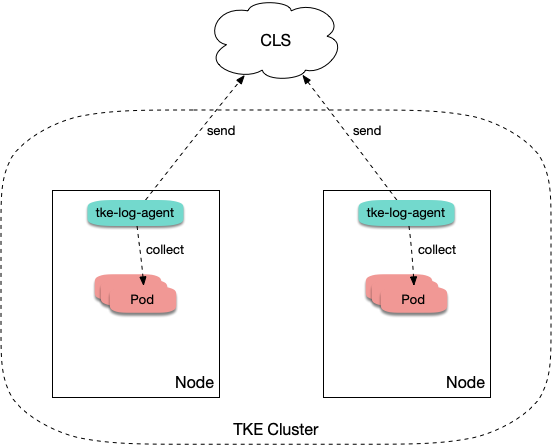

Architecture

After log collection is enabled for a TKE cluster, tke-log-agent is deployed on each node as a DaemonSet. According to the collection rules, tke-log-agent collects container logs from each node and reports them to CLS for storage, indexing and analysis. See the figure below:

Use Cases of Collection Types

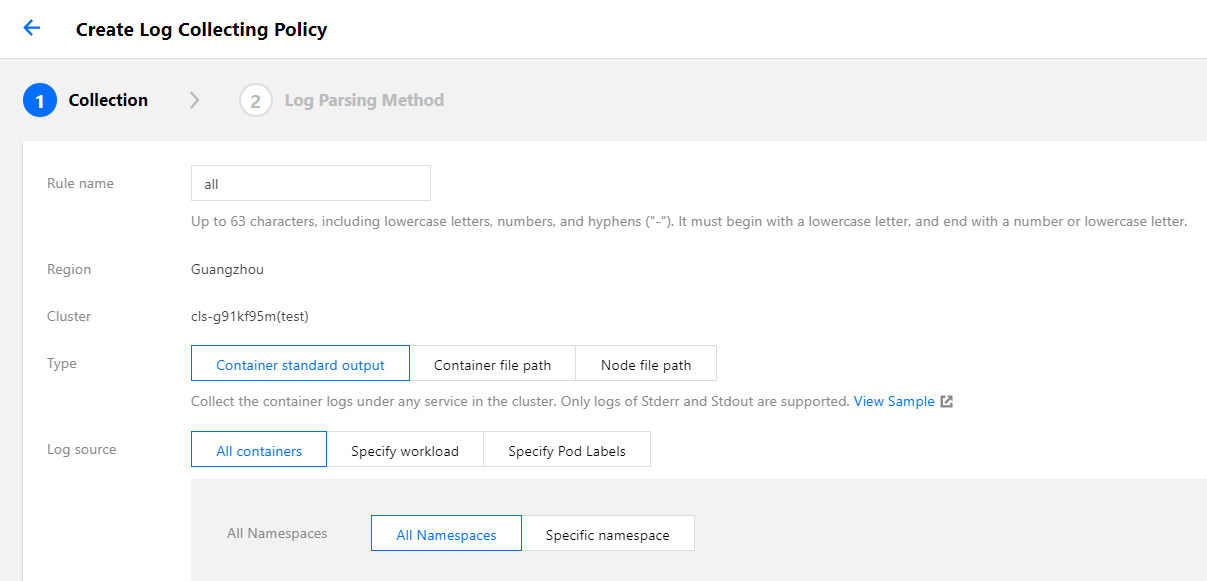

To use the TKE log collection feature, you need to determine the target data source for collection when creating log collection rules. TKE supports collection of standard output, files in a container and files on a host. See below for more details.

Collecting standard output

If you choose to collect from standard output, logs of containers in a pod are written to the standard output, and the log content will be managed by the container runtime (Docker or Containerd). We recommend using standard out as it is the simplest collection mode. Its advantages are as follows:

- No extra volume mounting is needed.

- You can view the log content by simply running

kubectl logs. - No worries about log rotation. The container runtime will perform storage and automatic rotation of logs to prevent situations where the disk capacity is exhausted because some pods write excessive logs.

- You don’t need to worry about the log file path. You can use unified collection rules to cover a wide range of workloads and reduce operation complexity.

The following figure shows a sample collection configuration. For more information on configuration, see Collecting standard output logs of a container.

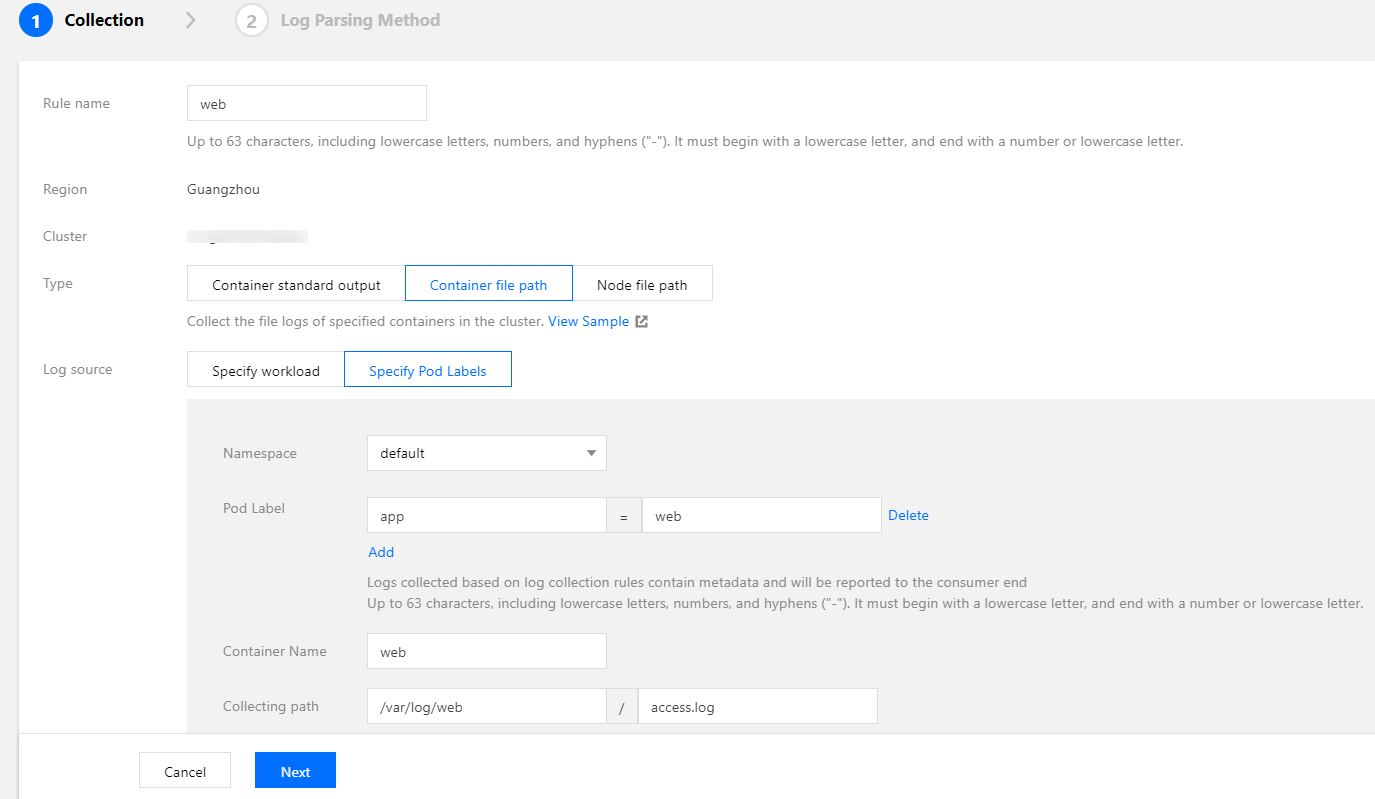

Collecting log files in container

Usually logs are written into log files. When containers are used, log files are written in containers. Please note:

- If no volume is mounted in the log file path:

Log files will be written to the container writable layer and stored in the container data disk. Usually, the path is/var/lib/docker. We recommend that you mount a volume to this path, and the volume should not be used for the system disk. After the container stops, the logs will be cleared. - If a volume is mounted in the log file path:

Log files will be stored in the backend storage of the corresponding volume type. Usually, emptydir is used. After the container stops, the logs will be cleared. During runtime, log files will be stored in/var/lib/kubeletof the host. This path usually does not have a mounted disk, so it will use the system disk. As unified storage is available when using the log collection feature, you are not advised to mount other persistent storage to store log files (such as CBS, COS, or CFS).

Most open-source log collectors require you to mount a volume to the pod log file path before collection, but TKE log collection does not require mounting. To output logs to files in containers, you do not need to consider whether to mount a volume. The following figure shows a sample collection configuration. For more information on configuration, see Collecting File Logs in a Container.

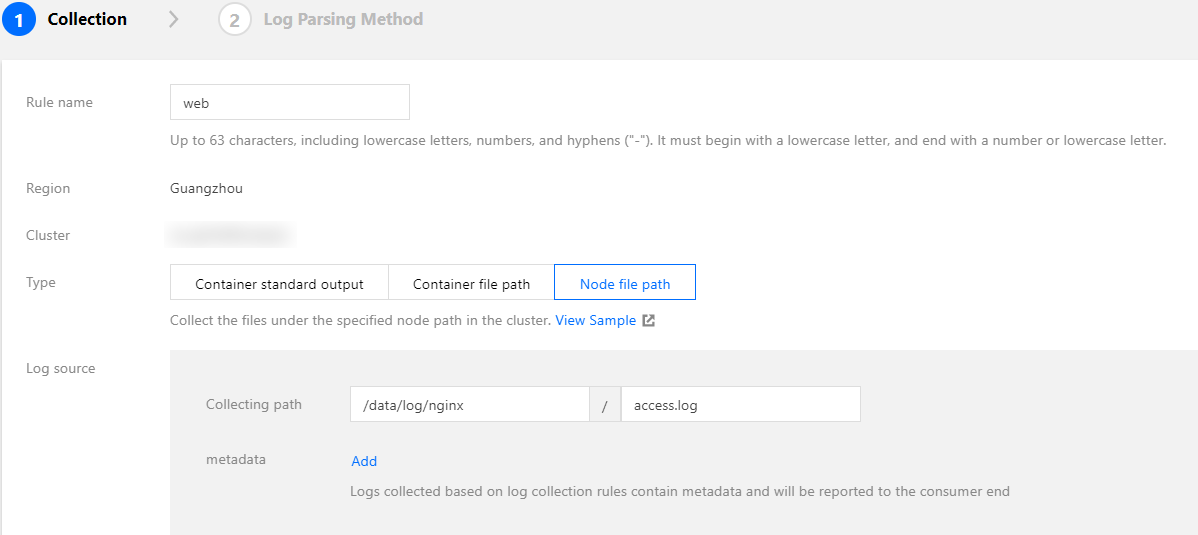

Collecting files on the host

If businesses need to write logs into log files and you hope to retain the original log files as a backup after the container stops to avoid complete log loss in the event of collection exceptions, you can mount a hostPath to the log file path. This way, log files will be stored in the specified directory on the host and these log files will not be cleared after the container stops.

As log files are not automatically cleared, the issue of repeated collection may occur if a pod is scheduled to another container and then scheduled back to the original container causing log files to be written into the same path. In that case, there are two collection scenarios:

- Same file name:

For example, assume the fixed file path is/data/log/nginx/access.log. In this case, repeated collection will not occur, because the collector will remember the time point of previously collected log files and collect only increments. - Different file names:

Usually, the log frameworks used by businesses automatically perform log rotation periodically, generally on a daily basis, and automatically rename old log files and add the timestamp suffix. If the collection rules use*as the wildcard character to match log file names, repeated collection may occur. After the log framework renames log files, the collector will mistakenly think it has found new log files, so it will collect the files again.

The following figure shows a sample collection configuration. For more information on configuration, see Collecting file logs in specified node paths.Note:Usually, repeated collection will not occur. If the log framework automatically performs rotation, we recommend that the wildcard character

*not be used to match log files.

Log Output

TKE log collection is integrated with CLS on the cloud, and log data is reported to CLS. CLS manages logs based on logsets and log topics. A logset is a project management unit of CLS and can include multiple log topics. Usually, the logs of the same business are put in the same logset, and applications or services of the same type in the same business use the same log topic.

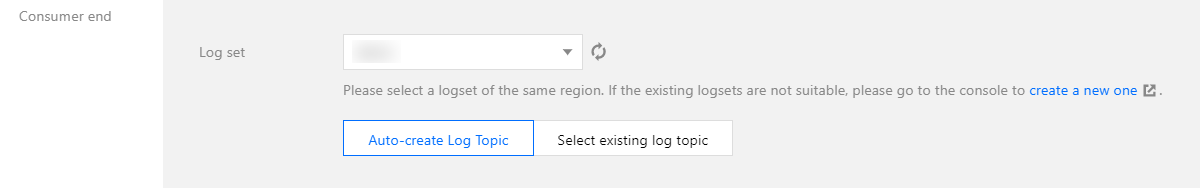

In TKE, log collection rules have a one-to-one correspondence with log topics. When selecting the consumer during the creation of TKE log collection rules, you need to specify the logset and log topic. Logsets are usually created in advance, and you can choose to automatically create log topics. See the figure below:

After a log topic is automatically created, you can go to Logset Management, open the details page of the corresponding logset, and rename the log topic to make it easier to find in future searches.

Configuring Log Format Parsing

When creating a log collection rule, you need to configure the log parsing format to facilitate future searches. Please refer to the following sections to complete configuration based on the actual situation.

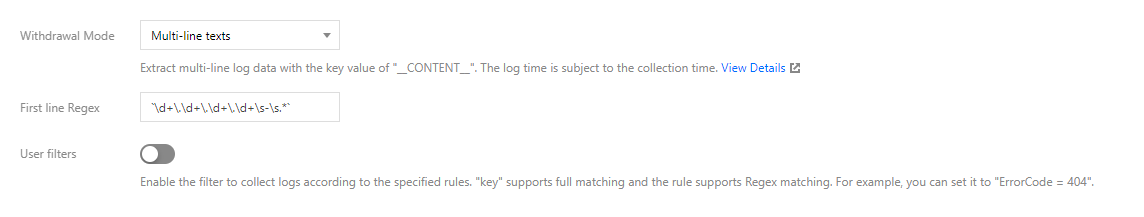

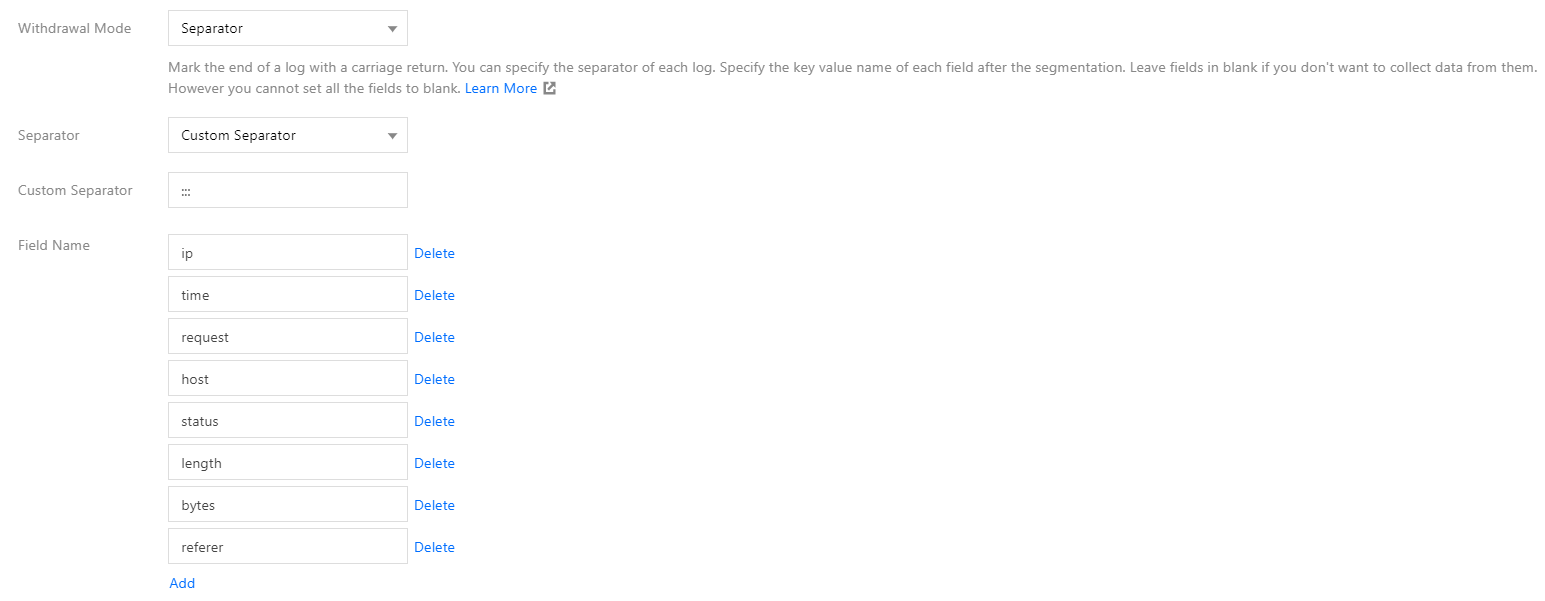

Selecting an extraction mode

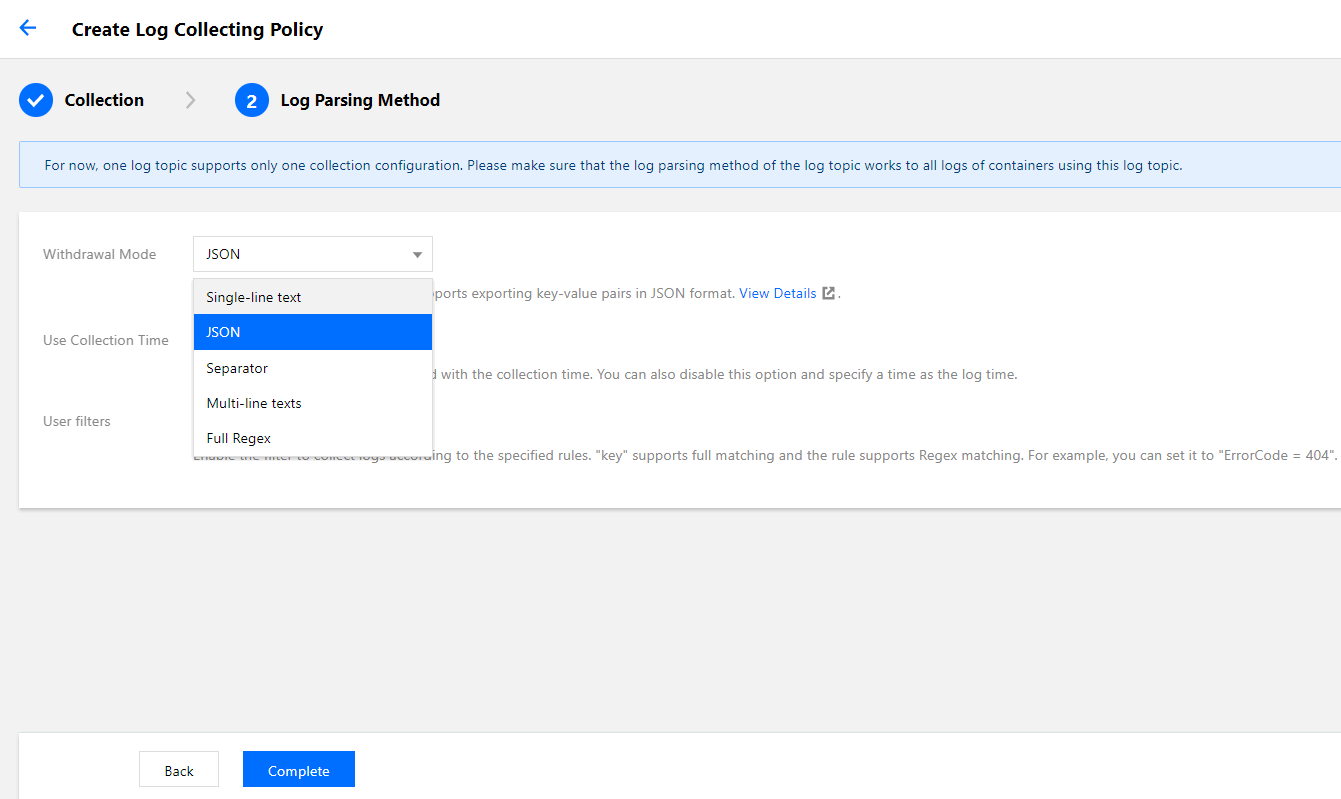

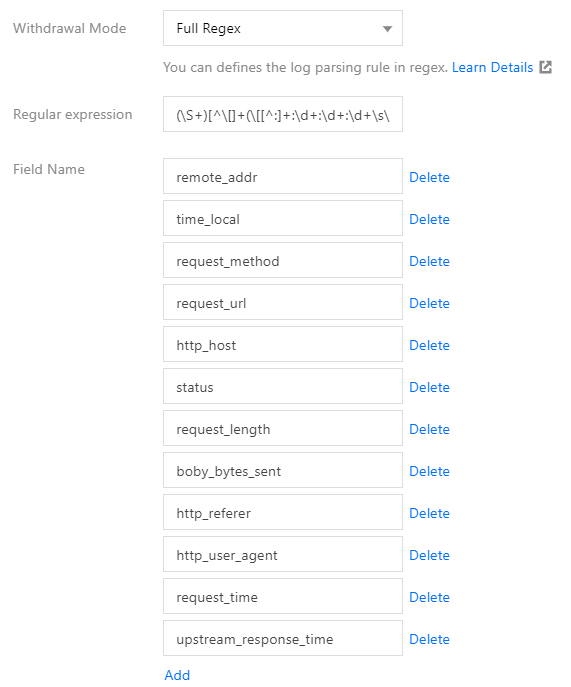

TKE supports five extraction modes: single-line text, JSON, separator, multi-line text, and full RegEx, as shown in the figure below:

You can only select JSON mode when logs are output in JSON format, in which case this mode is recommended. In JSON format, the logs are already structured, allowing CLS to extract the JSON key as the field name and value as the corresponding key value. This means you do not have to configure complex matching rules based on the business log output format. A sample of such logs is as follows:

{"remote_ip":"10.135.46.111","time_local":"22/Jan/2019:19:19:34 +0800","body_sent":23,"responsetime":0.232,"upstreamtime":"0.232","upstreamhost":"unix:/tmp/php-cgi.sock","http_host":"127.0.0.1","method":"POST","url":"/event/dispatch","request":"POST /event/dispatch HTTP/1.1","xff":"-","referer":"http://127.0.0.1/my/course/4","agent":"Mozilla/5.0 (Windows NT 10.0; WOW64; rv:64.0) Gecko/20100101 Firefox/64.0","response_code":"200"}

Configuring the content to be filtered out

You can choose to filter out useless log information to lower costs.

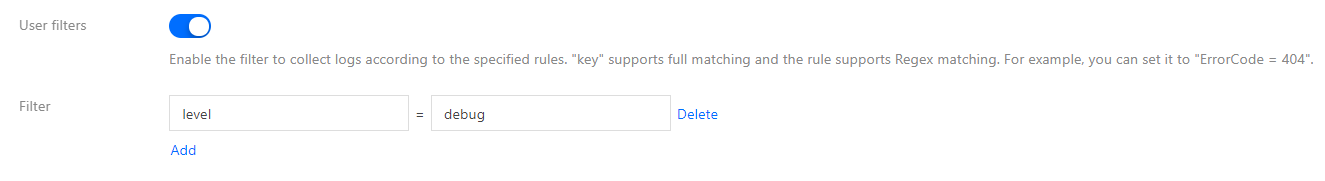

- If you use the JSON, separator, or full RegEx extraction mode, the log content is structured, and you can specify fields to perform regular expression matching for the log content to be retained, as shown in the figure below:

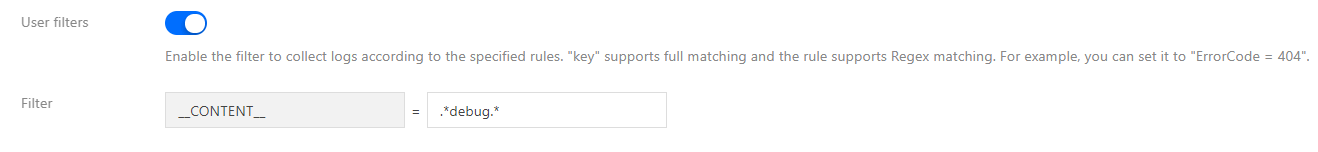

- If you use the single-line text or multi-line text extraction mode, the log content is not structured, so you cannot specify fields for filtering. Usually, you can use regular expressions to perform fuzzy matching on the full log content to be retained, as shown in the figure below:

Note:

The content should be matched using a regular expression, instead of perfect match. For example, to retain only the domain name

a.test.comin a log, the expression for matching should bea\.test\.com, instead ofa.test.com.

Customizing log timestamps

Each log should contain a timestamp used mainly for searching. This allows users to select a time period during searches. By default, the log timestamp is determined by the collection time, but you can customize it by selecting a certain field as the timestamp. This can allow for more precise searches. For example, assume that a service has been running for some time before you create a collection rule. If you do not set a custom time format, the timestamps of old logs will be set to the current time during collection, resulting in inaccurate timestamps.

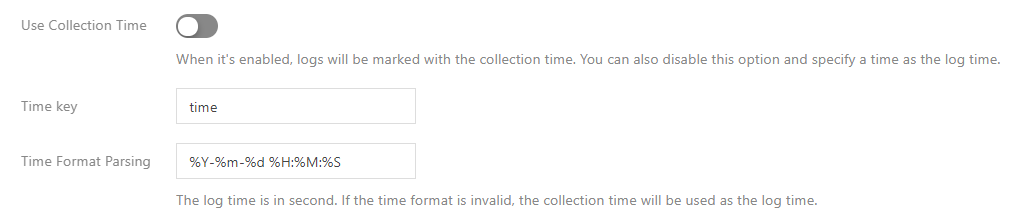

As the single-line text and multi-line text extraction modes do not structure log content, no field can be specified as the timestamp, which means these two modes do not support this feature. Other extraction modes support this feature. You need to disable "Use collection time", select a field name as the timestamp, and configure the time format. For example, assuming that the time field is used as the timestamp and the time value of a log is 2020-09-22 18:18:18, you can set the time format as: %Y-%m-%d %H:%M:%S, as shown in the figure below:

Note:The CLS timestamps currently support precision to the second. If the timestamp field of a business log is precise to the millisecond, you cannot use custom timestamps and can only use the default timestamp determined by the collection time.

For more information on the time format configuration, see Configuring the Time Format.

Log Query

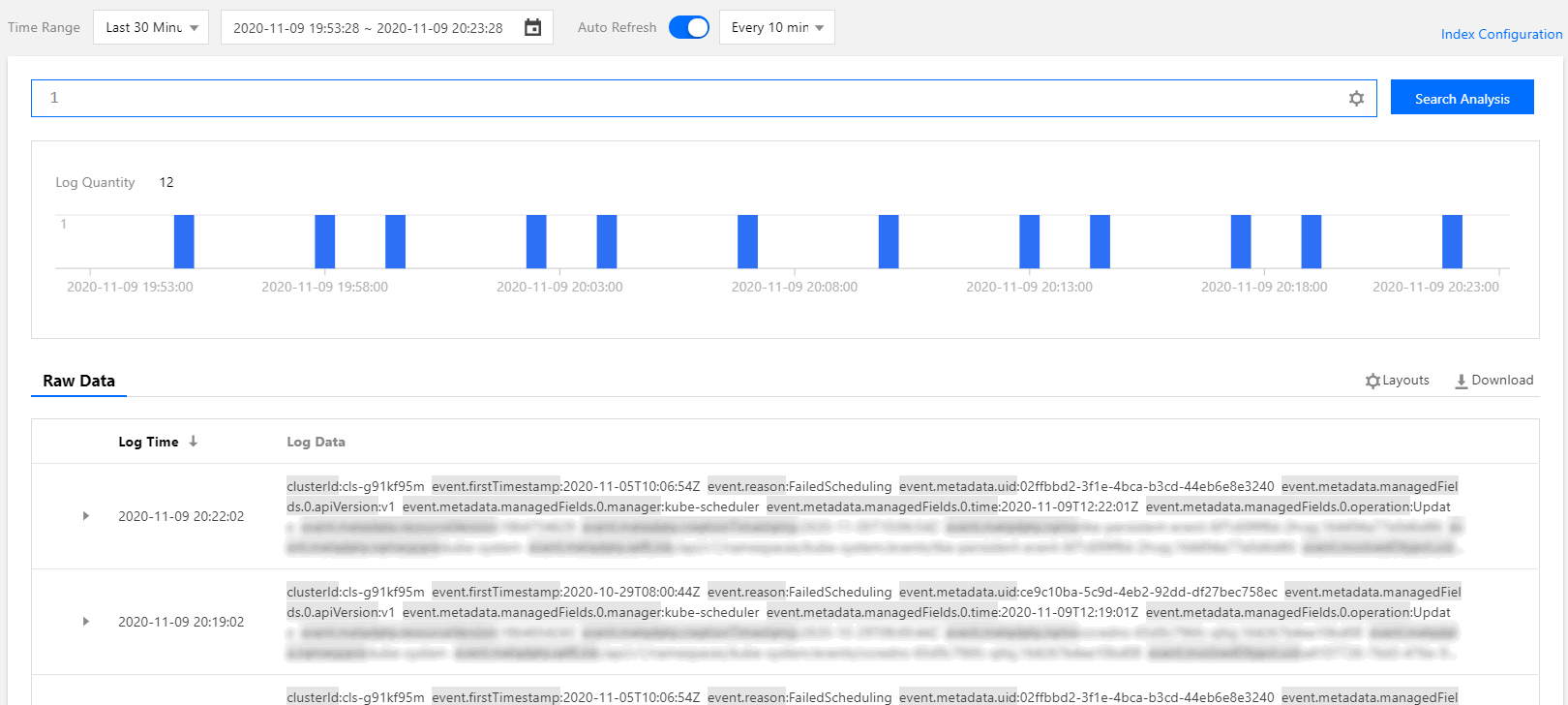

After log collection rules are configured, the collector will automatically start collecting logs and report them to CLS. You can query logs in Search Analysis on the CLS console. After an index is enabled, the Lucene syntax is supported. There are three types of indexes, as follows:

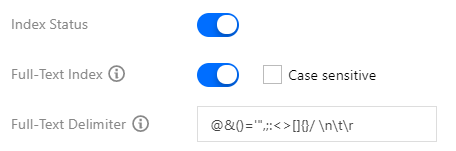

- Full-text index: used for fuzzy search. You do not need to specify a field. See the figure below:

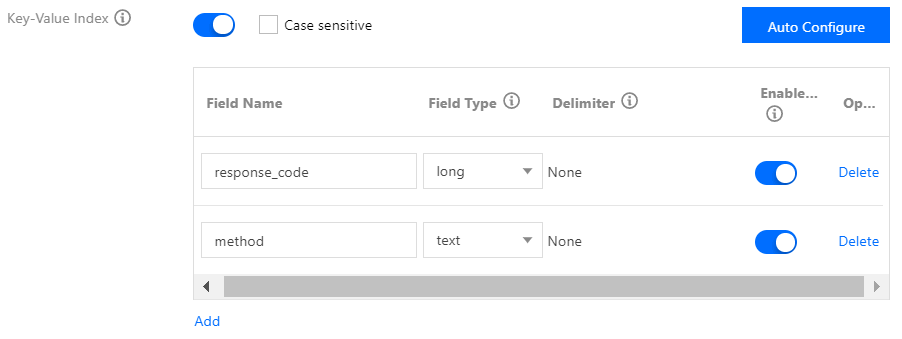

- Key value index: index for structured log content. You can specify log fields to search. See the figure below:

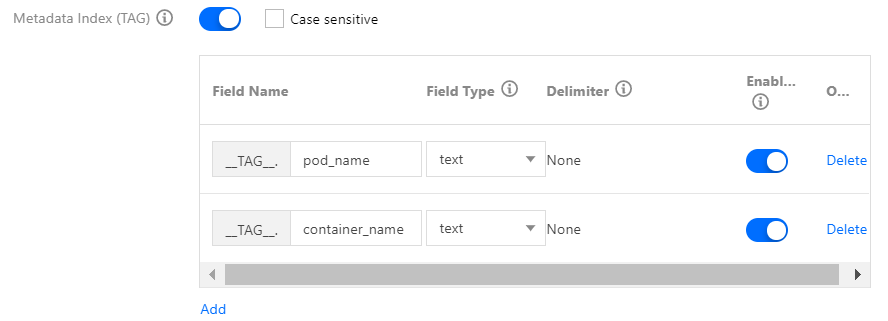

- Metadata field index: when some extra fields, such as pod name and namespace, are automatically attached during log reporting, this index allows you to specify these fields during search. See the figure below:

The following figure shows a query sample:

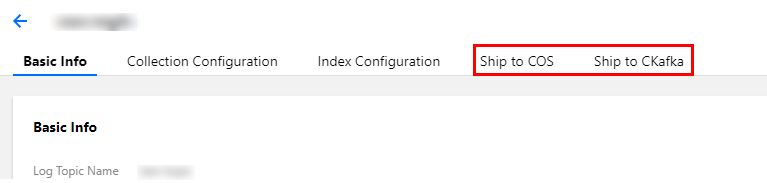

Publishing Logs to COS and Ckafka

CLS allows logs to be published to COS and the message queue CKafka. You can set it in the log topic, as shown in the figure below:

This is applicable to the following scenarios:

- Scenarios where long-term archiving and storage of log data are required. The logset stores log data for seven days by default. You can adjust the duration. The larger the data volume, the higher the cost. Usually, data is stored for a few days. If you need to store logs for a longer period, you can publish log data to COS for low-cost storage.

- Scenarios where further processing (such as offline calculation) of logs is required. You can publish log data to COS or Ckafka to be consumed and processed by other programs.

References

- TKE: Log Collection User Guide

- CLS: Configuring the Time Format

- CLS: Shipping to COS

- CLS: Shipping to Ckafka

Ya

Ya

Tidak

Tidak

Apakah halaman ini membantu?