- Release Notes and Announcements

- Release Notes

- Announcements

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Security Vulnerability Fix Description

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Register node management

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

- Release Notes and Announcements

- Release Notes

- Announcements

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Security Vulnerability Fix Description

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Register node management

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

Using VPA to Realize Pod Scaling up and Scaling down in TKE

Terakhir diperbarui:2021-06-09 17:46:36

Overview

Kubernetes Vertical Pod Autoscaler (VPA) can automatically adjust the reserved CPU and memory of Pod, improve cluster resource utilization and release CPU and memory for other Pods. This document describes how to use the VPA community edition in TKE to implement the scaling up and scaling down of Pods.

Use Cases

The auto-scaling feature of VPA makes the TKE very flexible and adaptive. When the business load increases sharply, VPA can quickly increase the Request of the container within the user's setting range. When the business load decreases, VPA can appropriately reduce the Request based on the actual needs to save computing resources. The entire process is automated without manual intervention. It is suitable for scenarios that require rapid expansion and stateful application expansion. In addition, VPA can be used to recommend a more reasonable Request to user, and improve the resource utilization of the container while ensuring that the container has sufficient available resources.

VPA Strengths

Compared with Horizontal Pod Autoscaler (HPA), VPA has the following advantages:

- VPA does not need to adjust the replicas of Pod for expansion, and the expansion speed is faster.

- VPA can achieve the expansion of the stateful applications, while HPA is not suitable for the scaling out of the stateful applications.

- If the Request is set too large, the cluster resource utilization is still very low when HPA is used to scale in the Pods to a Pod. In this case, you can use VPA to scale down to improve the cluster resource utilization.

VPA Limits

Note:VPA community edition is in testing. Use this feature with caution. We recommend setting "updateMode" to "Off" to ensure that VPA will not automatically change the value of Request. You can still view the recommended value of request bound to the load in the VPA object.

- You can use the VPA to update the resource configurations of the running Pods. This feature is in testing. The configuration updates will lead to Pod restart and rebuilding, and the Pods may be scheduled to other nodes.

- The VPA does not evict the Pods that are not run under a controller. For these Pods, the

Automode is equivalent to theInitialmode. - You cannot run VPA simultaneously with the HPA that uses the CPU and memory as metrics. If the HPA uses other metrics except CPU and memory, you can run the VPA with the HPA at the same time. For details, see Using Custom Metrics for Auto Scaling in TKE.

- The VPA uses an Admission Webhook as its admission controller. If there are other Admission Webhooks in the cluster, you need to ensure that they do not conflict with the Admission Webhooks of the VPA. The execution sequence of admission controllers is defined in the configuration parameters of the API Server.

- The VPA can react to most Out of Memory (OOM) events.

- The VPA performance has not been tested in large-scale clusters.

- The recommended value of Pod resource Request set by the VPA may exceed the upper limit of the available resources (such as node resources, idle resources, and resource quotas). In this case, the Pod may go to Pending and cannot be scheduled. This can be partly addressed by using the VPA together with the Cluster Autoscaler.

- Multiple VPA resources matching the same pod have undefined behavior.

For more limitations on VPA, see VPA Known limitations.

Prerequisites

- You have created a TKE cluster.

- The cluster has been connected via the command line tool Kubectl. For how to connect to a cluster, see Connecting to a Cluster.

Directions

Deploying VPA

- Log in to the CVM in the cluster.

- You can connect to a TKE cluster from a local client using the command line tool kubectl.

- Run the following command to clone the kubernetes/autoscaler from GitHub Repository.

sh git clone https://github.com/kubernetes/autoscaler.git - Run the following command to switch to the

vertical-pod-autoscalerdirectory.cd autoscaler/vertical-pod-autoscaler/ - (Optional) If you have already deployed another version of VPA, run the following command to remove it. Otherwise an exception may occur.

./hack/vpa-down.sh - Run the following command to deploy VPA related components to your cluster.

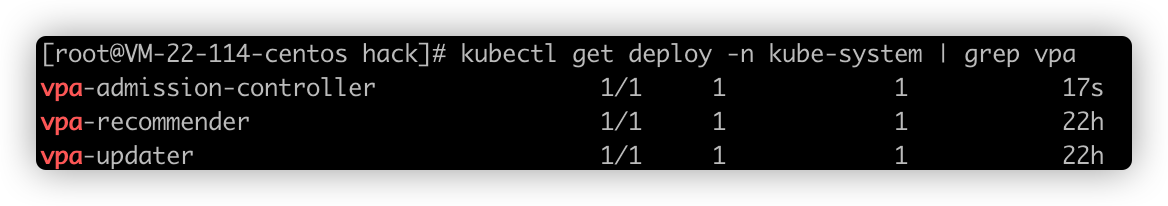

./hack/vpa-up.sh - Run the following command to verify whether the VPA component is successfully created.

kubectl get deploy -n kube-system | grep vpa

Sample 1: using VPA to obtain the recommended value of Request

Note:

- We do not recommend using VPA to automatically update Request in a production environment.

- You can use VPA to view the recommended value of Request and manually trigger the update as needed.

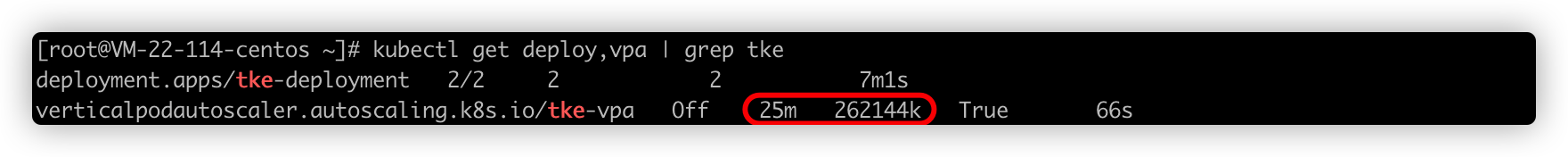

In this sample, you will create a VPA object with updateMode set to Off and create a Deployment with two Pods, and each Pod has a container. After the Pod is created, VPA will analyze the CPU and memory requirements of the container and record the recommended value of Request in the status field. VPA will not automatically update the resource requests of the running containers.

Run the following command in kubectl to generate a VPA object named tke-vpa, pointing to a Deployment named tke-deployment:

shell

cat <<EOF | kubectl apply -f -

apiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: tke-vpa

spec:

targetRef:

apiVersion: "apps/v1"

kind: Deployment

name: tke-deployment

updatePolicy:

updateMode: "Off"

EOF

Run the following command to generate a Deployment object named tke-deployment:

shell

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: tke-deployment

spec:

replicas: 2

selector:

matchLabels:

app: tke-deployment

template:

metadata:

labels:

app: tke-deployment

spec:

containers:

- name: tke-container

image: nginx

EOF

The generated Deployment object is show as follows:

Note:The

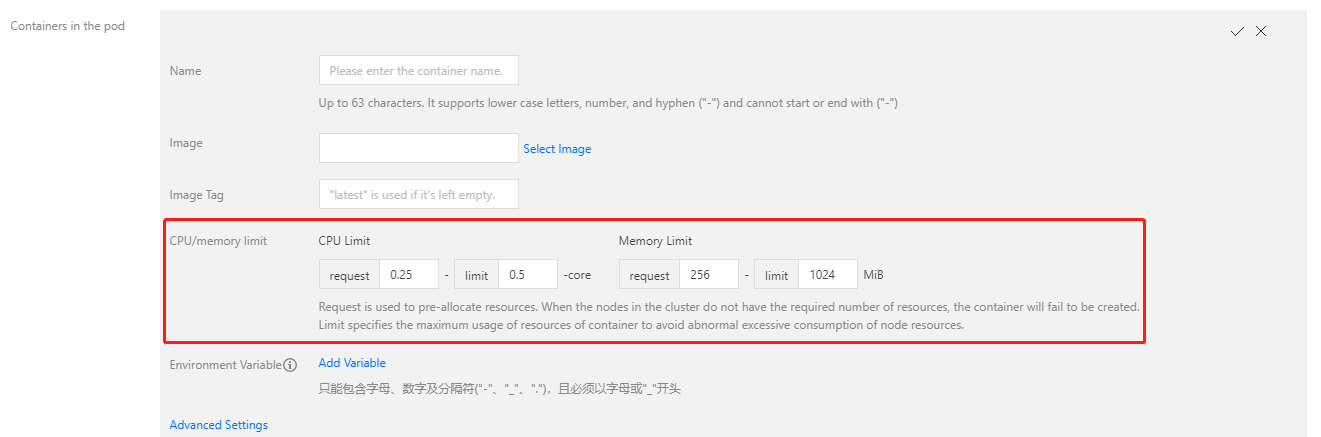

tke-deploymentcreated above does not set the Request of CPU or memory, and the Qos of the Pod is set to BestEffort. In this case, Pod is easy to be evicted. We recommend that you set the Request and Limit when creating the Deployment of the application. If you create a workload via the TKE console, the default Request and Limit of each container will be automatically set.

Run the following command to view the recommended Requests of CPU and memory by VPA:

shell

kubectl get vpa tke-vpa -o yaml

The execution results are as follows:

yaml

...

recommendation:

containerRecommendations:

- containerName: tke-container

lowerBound:

cpu: 25m

memory: 262144k

target:# Recommended value

cpu: 25m

memory: 262144k

uncappedTarget:

cpu: 25m

memory: 262144k

upperBound:

cpu: 1771m

memory: 1851500k

The CPU and memory corresponding to target are the recommended Requests. You can remove the previous Deployment and create a new Deployment with the recommended Request.

| Field | Description |

|---|---|

| lowerBound | The minimum value recommended. The use of a Request smaller than this value may have a major impact on performance or availability. |

| target | Recommended value. The VPA calculates the most appropriate Request. |

| uncappedTarget | The latest recommended value. It is only based on the actual resource usage and does not consider the recommended value range of the container set in .spec.resourcePolicy.containerPolicies. The uncappedTarget may differ from the recommended lowerBound and upperBound. This field is only used to indicate the status and will not affect the actual resource allocation. |

| upperBound | The maximum value recommended. The use of a Request larger than this value may cause a resource waste. |

Sample 2: Disabling a specific container

If there are multiple containers in the Pod, for example, one is an application container and the other is a secondary container. You can choose to stop recommending Request for the secondary container to save the cluster resources.

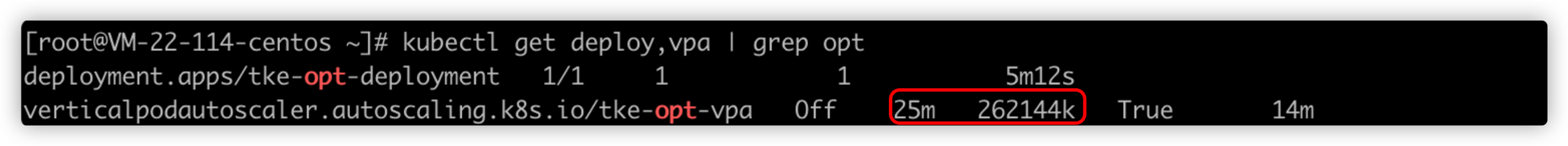

In this sample, you will create a VPA with a specific container disabled, and create a Deployment with a Pod, and the Pod contains two containers. After the Pod is created, VPA only creates and calculates the recommended value for one container, and stops recommending Request for the other container.

Run the following command in the kubectl to generate a VPA object named tke-opt-vpa, pointing to a Deployment named tke-opt-deployment:

shell

cat <<EOF | kubectl apply -f -

apiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: tke-opt-vpa

spec:

targetRef:

apiVersion: "apps/v1"

kind: Deployment

name: tke-opt-deployment

updatePolicy:

updateMode: "Off"

resourcePolicy:

containerPolicies:

- containerName: tke-opt-sidecar

mode: "Off"

EOF

Note:In the

.spec.resourcePolicy.containerPoliciesof the VPA, themodeoftke-opt-sidecaris set to "Off", and VPA will not calculate and recommend a new Request fortke-opt-sidecar.

Run the following command to generate a Deployment object named tke-deployment:

sh

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: tke-opt-deployment

spec:

replicas: 1

selector:

matchLabels:

app: tke-opt-deployment

template:

metadata:

labels:

app: tke-opt-deployment

spec:

containers:

- name: tke-opt-container

image: nginx

- name: tke-opt-sidecar

image: busybox

command: ["sh","-c","while true; do echo TKE VPA; sleep 60; done"]

EOF

The generated Deployment object is show as follows:

Run the following command to view the recommended Requests of CPU and memory by VPA:

shell

kubectl get vpa tke-opt-vpa -o yaml

The execution results are as follows:

yaml

...

recommendation:

containerRecommendations:

- containerName: tke-opt-container

lowerBound:

cpu: 25m

memory: 262144k

target:

cpu: 25m

memory: 262144k

uncappedTarget:

cpu: 25m

memory: 262144k

upperBound:

cpu: 1595m

memory: 1667500k

In the execution result, there is only the recommended value of tke-opt-container, and no recommended value of tke-opt-sidecar.

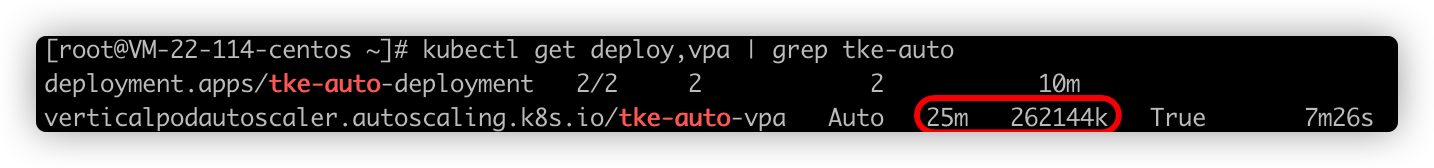

Sample 3: updating the Request automatically

Note:Automatic updating the resources of the running Pods is an experimental feature of VPA. We recommend that you do not use this feature in a production environment.

In this sample, you will create a VPA that can automatically adjust the CPU and memory Requests, and create a Deployment with two Pods. Each Pod will set the Request and Limit of the resource.

Run the following command in the kubectl to generate a VPA object named tke-auto-vpa, pointing to a Deployment named tke-auto-deployment:

yaml

cat <<EOF | kubectl apply -f -

apiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: tke-auto-vpa

spec:

targetRef:

apiVersion: "apps/v1"

kind: Deployment

name: tke-auto-deployment

updatePolicy:

updateMode: "Auto"

EOF

Note:The

updateModefield of this VPA is set toAuto, which means that the VPA can update the CPU and memory Requests during the life cycle of the Pod. VPA can remove the Pod, adjust the CPU and memory Requests, and then rebuild a Pod.

Run the following command to generate a Deployment object named tke-auto-deployment:

shell

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: tke-auto-deployment

spec:

replicas: 2

selector:

matchLabels:

app: tke-auto-deployment

template:

metadata:

labels:

app: tke-auto-deployment

spec:

containers:

- name: tke-container

image: nginx

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 200m

memory: 200Mi

EOF

Note:When the Deployment is created in the above operation, the Request and Limit of the resource have been set. In this case, VPA will not only recommend the Request, but also automatically recommend the Limit based on the initial ratio of Request and Limit. For example, the initial ratio of CPU’s Request and Limit in YAML is 100m:200m, namely 1:2, then the value of Limit recommended by VPA is twice the value of Request recommended in the VPA object.

The generated Deployment object is show as follows:

Run the following command to obtain the detailed information of the running Pod:

sh

kubectl get pod pod-name -o yaml

The execution result is shown below. VPA modified the original Request and Limits to the recommended value of VPA, and maintained the initial ratio of Request and Limits. At the same time, an annotation that recorded the updates is generated:

yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

...

vpaObservedContainers: tke-container

vpaUpdates: Pod resources updated by tke-auto-vpa: container 0: memory request, cpu request

...

spec:

containers:

...

resources:

limits:# The new Request and Limits will maintain the initial ratio

cpu: 50m

memory: 500Mi

requests:

cpu: 25m

memory: 262144k

...

Run the following command to obtain the detailed information of the relevant VPA:

sh

kubectl get vpa tke-auto-vpa -o yaml

The execution results are as follows:

yaml

...

recommendation:

containerRecommendations:

- containerName: tke-container

Lower Bound:

Cpu: 25m

Memory: 262144k

Target:

Cpu: 25m

Memory: 262144k

Uncapped Target:

Cpu: 25m

Memory: 262144k

Upper Bound:

Cpu: 101m

Memory: 262144k

target means that the container will run in the best state when the Requests of CPU and memory are 25m and 262144k respectively.

VPA uses the recommended values of lowerBound and upperBound to decide whether to evict a Pod and replace it with a new Pod. If the Pod’s Request is smaller than the lower limit or larger than the upper limit, VPA will remove the Pod and replace it with a Pod with a recommended value.

Troubleshooting

1. An error occurs when running the vpa-up.sh script.

Errors

shell

ERROR: Failed to create CA certificate for self-signing. If the error is "unknown option -addext", update your openssl version or deploy VPA from the vpa-release-0.8 branch.

Solutions

- If you have not run the command through the CVM in the cluster, we recommend that you download the Autoscaler project in the CVM and deploy VPA. If you need to connect the cluster to your CVM, see Connecting to a Cluster.

- If the errors still exist, please check whether the following problems exist:

- Check whether the

opensslversion of the cluster CVM is later than v1.1.1. - Whether the

vpa-release-0.8branch of the Autoscaler project is used.

- Check whether the

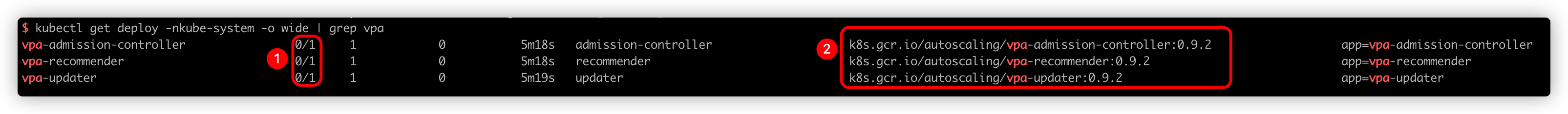

2. The VPA-related load could not be started up.

Errors

If the VPA-related load fails to start up, and the following message is generated:

Message 1: indicates that the Pods in the load fail to run.

Message 2: indicates the address of the image.

Solutions

The VPA-related load could not be started up because the image located in GCR could not be downloaded. You can try the following steps to solve the problem:

- Download the image.

Visit the "k8s.gcr.io/" image repository and download the images of vpa-admission-controller, vpa-recommender, and vpa-updater. - Replace the image tags and push the images.

Replace the image tags of vpa-admission-controller, vpa-recommender, and vpa-updater and push them to your image repository. For how to push and upload the image, please see TCR Personal Edition. - Change the image address in YAML.

In the YAML file, update the image addresses of vpa-admission-controller, vpa-recommender, and vpa-updater to the new addresses you set.

Ya

Ya

Tidak

Tidak

Apakah halaman ini membantu?