- Release Notes and Announcements

- Announcements

- Notification on Service Suspension Policy Change in Case of Overdue Payment for COS Pay-As-You-Go (Postpaid)

- Implementation Notice for Security Management of COS Bucket Domain (Effective January 2024)

- Notification of Price Reduction for COS Retrieval and Storage Capacity Charges

- Daily Billing for COS Storage Usage, Request, and Data Retrieval

- COS Will Stop Supporting New Default CDN Acceleration Domains

- Release Notes

- Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Console Guide

- Console Overview

- Bucket Management

- Bucket Overview

- Creating Bucket

- Deleting Buckets

- Querying Bucket

- Clearing Bucket

- Setting Access Permission

- Setting Bucket Encryption

- Setting Hotlink Protection

- Setting Origin-Pull

- Setting Cross-Origin Resource Sharing (CORS)

- Setting Versioning

- Setting Static Website

- Setting Lifecycle

- Setting Logging

- Accessing Bucket List Using Sub-Account

- Adding Bucket Policies

- Setting Log Analysis

- Setting INTELLIGENT TIERING

- Setting Inventory

- Domain Name Management

- Setting Bucket Tags

- Setting Log Retrieval

- Setting Cross-Bucket Replication

- Enabling Global Acceleration

- Setting Object Lock

- Object Management

- Uploading an Object

- Downloading Objects

- Copying Object

- Previewing or Editing Object

- Viewing Object Information

- Searching for Objects

- Sorting and Filtering Objects

- Direct Upload to ARCHIVE

- Modifying Storage Class

- Deleting Incomplete Multipart Uploads

- Setting Object Access Permission

- Setting Object Encryption

- Custom Headers

- Deleting Objects

- Restoring Archived Objects

- Folder Management

- Data Extraction

- Setting Object Tag

- Exporting Object URLs

- Restoring Historical Object Version

- Batch Operation

- Monitoring Reports

- Data Processing

- Content Moderation

- Smart Toolbox User Guide

- Data Processing Workflow

- Application Integration

- User Tools

- Tool Overview

- Installation and Configuration of Environment

- COSBrowser

- COSCLI (Beta)

- COSCLI Overview

- Download and Installation Configuration

- Common Options

- Common Commands

- Generating and Modifying Configuration Files - config

- Creating Buckets - mb

- Deleting Buckets - rb

- Tagging Bucket - bucket-tagging

- Querying Bucket/Object List - ls

- Obtaining Statistics on Different Types of Objects - du

- Uploading/Downloading/Copying Objects - cp

- Syncing Upload/Download/Copy - sync

- Deleting Objects - rm

- Getting File Hash Value - hash

- Listing Incomplete Multipart Uploads - lsparts

- Clearing Incomplete Multipart Uploads - abort

- Retrieving Archived Files - restore

- Getting Pre-signed URL - signurl

- FAQs

- COSCMD

- COS Migration

- FTP Server

- Hadoop

- COSDistCp

- Hadoop-cos-DistChecker

- HDFS TO COS

- Online Auxiliary Tools

- Diagnostic Tool

- Best Practices

- Overview

- Access Control and Permission Management

- ACL Practices

- CAM Practices

- Granting Sub-Accounts Access to COS

- Authorization Cases

- Working with COS API Authorization Policies

- Security Guidelines for Using Temporary Credentials for Direct Upload from Frontend to COS

- Generating and Using Temporary Keys

- Authorizing Sub-Account to Get Buckets by Tag

- Descriptions and Use Cases of Condition Keys

- Granting Bucket Permissions to a Sub-Account that is Under Another Root Account

- Performance Optimization

- Data Migration

- Accessing COS with AWS S3 SDK

- Data Disaster Recovery and Backup

- Domain Name Management Practice

- Image Processing

- Audio/Video Practices

- Workflow

- Direct Data Upload

- Content Moderation

- Data Security

- Data Verification

- Big Data Practice

- Using COS in the Third-party Applications

- Use the general configuration of COS in third-party applications compatible with S3

- Storing Remote WordPress Attachments to COS

- Storing Ghost Attachment to COS

- Backing up Files from PC to COS

- Using Nextcloud and COS to Build Personal Online File Storage Service

- Mounting COS to Windows Server as Local Drive

- Setting up Image Hosting Service with PicGo, Typora, and COS

- Managing COS Resource with CloudBerry Explorer

- Developer Guide

- Creating Request

- Bucket

- Object

- Data Management

- Data Disaster Recovery

- Data Security

- Cloud Access Management

- Batch Operation

- Global Acceleration

- Data Workflow

- Monitoring and Alarms

- Data Lake Storage

- Cloud Native Datalake Storage

- Metadata Accelerator

- Metadata Acceleration Overview

- Migrating HDFS Data to Metadata Acceleration-Enabled Bucket

- Using HDFS to Access Metadata Acceleration-Enabled Bucket

- Mounting a COS Bucket in a Computing Cluster

- Accessing COS over HDFS in CDH Cluster

- Using Hadoop FileSystem API Code to Access COS Metadata Acceleration Bucket

- Using DataX to Sync Data Between Buckets with Metadata Acceleration Enabled

- Big Data Security

- GooseFS

- Data Processing

- Troubleshooting

- API Documentation

- Introduction

- Common Request Headers

- Common Response Headers

- Error Codes

- Request Signature

- Action List

- Service APIs

- Bucket APIs

- Basic Operations

- Access Control List (acl)

- Cross-Origin Resource Sharing (cors)

- Lifecycle

- Bucket Policy (policy)

- Hotlink Protection (referer)

- Tag (tagging)

- Static Website (website)

- Intelligent Tiering

- Bucket inventory(inventory)

- Versioning

- Cross-Bucket Replication(replication)

- Log Management(logging)

- Global Acceleration (Accelerate)

- Bucket Encryption (encryption)

- Custom Domain Name (Domain)

- Object Lock (ObjectLock)

- Origin-Pull (Origin)

- Object APIs

- Batch Operation APIs

- Data Processing APIs

- Image Processing

- Basic Image Processing

- Scaling

- Cropping

- Rotation

- Converting Format

- Quality Change

- Gaussian Blurring

- Adjusting Brightness

- Adjusting Contrast

- Sharpening

- Grayscale Image

- Image Watermark

- Text Watermark

- Obtaining Basic Image Information

- Getting Image EXIF

- Obtaining Image’s Average Hue

- Metadata Removal

- Quick Thumbnail Template

- Limiting Output Image Size

- Pipeline Operators

- Image Advanced Compression

- Persistent Image Processing

- Image Compression

- Blind Watermark

- Basic Image Processing

- AI-Based Content Recognition

- Media Processing

- File Processing

- File Processing

- Image Processing

- Job and Workflow

- Common Request Headers

- Common Response Headers

- Error Codes

- Workflow APIs

- Workflow Instance

- Job APIs

- Media Processing

- Canceling Media Processing Job

- Querying Media Processing Job

- Media Processing Job Callback

- Video-to-Animated Image Conversion

- Audio/Video Splicing

- Adding Digital Watermark

- Extracting Digital Watermark

- Getting Media Information

- Noise Cancellation

- Video Quality Scoring

- SDRtoHDR

- Remuxing (Audio/Video Segmentation)

- Intelligent Thumbnail

- Frame Capturing

- Stream Separation

- Super Resolution

- Audio/Video Transcoding

- Text to Speech

- Video Montage

- Video Enhancement

- Video Tagging

- Voice/Sound Separation

- Image Processing

- Multi-Job Processing

- AI-Based Content Recognition

- Sync Media Processing

- Media Processing

- Template APIs

- Media Processing

- Creating Media Processing Template

- Creating Animated Image Template

- Creating Splicing Template

- Creating Top Speed Codec Transcoding Template

- Creating Screenshot Template

- Creating Super Resolution Template

- Creating Audio/Video Transcoding Template

- Creating Professional Transcoding Template

- Creating Text-to-Speech Template

- Creating Video Montage Template

- Creating Video Enhancement Template

- Creating Voice/Sound Separation Template

- Creating Watermark Template

- Creating Intelligent Thumbnail Template

- Deleting Media Processing Template

- Querying Media Processing Template

- Updating Media Processing Template

- Updating Animated Image Template

- Updating Splicing Template

- Updating Top Speed Codec Transcoding Template

- Updating Screenshot Template

- Updating Super Resolution Template

- Updating Audio/Video Transcoding Template

- Updating Professional Transcoding Template

- Updating Text-to-Speech Template

- Updating Video Montage Template

- Updating Video Enhancement Template

- Updating Voice/Sound Separation Template

- Updating Watermark Template

- Updating Intelligent Thumbnail Template

- Creating Media Processing Template

- AI-Based Content Recognition

- Media Processing

- Batch Job APIs

- Callback Content

- Appendix

- Content Moderation APIs

- Submitting Virus Detection Job

- SDK Documentation

- SDK Overview

- Preparations

- Android SDK

- Getting Started

- Android SDK FAQs

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Generating Pre-Signed URLs

- Configuring Preflight Requests for Cross-origin Access

- Server-Side Encryption

- Single-Connection Bandwidth Limit

- Extracting Object Content

- Remote Disaster Recovery

- Data Management

- Cloud Access Management

- Data Verification

- Image Processing

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- C SDK

- C++ SDK

- .NET(C#) SDK

- Getting Started

- .NET (C#) SDK

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Restoring Archived Objects

- Querying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Configuring Preflight Requests for Cross-Origin Access

- Server-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Moderation

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Backward Compatibility

- SDK for Flutter

- Go SDK

- iOS SDK

- Getting Started

- iOS SDK

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Listing Objects

- Copying and Moving Objects

- Extracting Object Content

- Checking Whether an Object Exists

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Server-Side Encryption

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Cross-region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Recognition

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Java SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Generating Pre-Signed URLs

- Restoring Archived Objects

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Uploading/Downloading Object at Custom Domain Name

- Data Management

- Cross-Region Disaster Recovery

- Cloud Access Management

- Image Processing

- Content Moderation

- File Processing

- Media Processing

- AI-Based Content Recognition

- Troubleshooting

- Setting Access Domain Names (CDN/Global Acceleration)

- JavaScript SDK

- Node.js SDK

- PHP SDK

- Python SDK

- Getting Started

- Python SDK FAQs

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Restoring Archived Objects

- Extracting Object Content

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Content Recognition

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Image Processing

- React Native SDK

- Mini Program SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Listing Objects

- Deleting Objects

- Copying and Moving Objects

- Restoring Archived Objects

- Querying Object Metadata

- Checking Whether an Object Exists

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Single-URL Speed Limits

- Server-Side Encryption

- Remote disaster-tolerant

- Data Management

- Cloud Access Management

- Data Verification

- Content Moderation

- Setting Access Domain Names (CDN/Global Acceleration)

- Image Processing

- Troubleshooting

- Error Codes

- FAQs

- Service Level Agreement

- Appendices

- Glossary

- Release Notes and Announcements

- Announcements

- Notification on Service Suspension Policy Change in Case of Overdue Payment for COS Pay-As-You-Go (Postpaid)

- Implementation Notice for Security Management of COS Bucket Domain (Effective January 2024)

- Notification of Price Reduction for COS Retrieval and Storage Capacity Charges

- Daily Billing for COS Storage Usage, Request, and Data Retrieval

- COS Will Stop Supporting New Default CDN Acceleration Domains

- Release Notes

- Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Console Guide

- Console Overview

- Bucket Management

- Bucket Overview

- Creating Bucket

- Deleting Buckets

- Querying Bucket

- Clearing Bucket

- Setting Access Permission

- Setting Bucket Encryption

- Setting Hotlink Protection

- Setting Origin-Pull

- Setting Cross-Origin Resource Sharing (CORS)

- Setting Versioning

- Setting Static Website

- Setting Lifecycle

- Setting Logging

- Accessing Bucket List Using Sub-Account

- Adding Bucket Policies

- Setting Log Analysis

- Setting INTELLIGENT TIERING

- Setting Inventory

- Domain Name Management

- Setting Bucket Tags

- Setting Log Retrieval

- Setting Cross-Bucket Replication

- Enabling Global Acceleration

- Setting Object Lock

- Object Management

- Uploading an Object

- Downloading Objects

- Copying Object

- Previewing or Editing Object

- Viewing Object Information

- Searching for Objects

- Sorting and Filtering Objects

- Direct Upload to ARCHIVE

- Modifying Storage Class

- Deleting Incomplete Multipart Uploads

- Setting Object Access Permission

- Setting Object Encryption

- Custom Headers

- Deleting Objects

- Restoring Archived Objects

- Folder Management

- Data Extraction

- Setting Object Tag

- Exporting Object URLs

- Restoring Historical Object Version

- Batch Operation

- Monitoring Reports

- Data Processing

- Content Moderation

- Smart Toolbox User Guide

- Data Processing Workflow

- Application Integration

- User Tools

- Tool Overview

- Installation and Configuration of Environment

- COSBrowser

- COSCLI (Beta)

- COSCLI Overview

- Download and Installation Configuration

- Common Options

- Common Commands

- Generating and Modifying Configuration Files - config

- Creating Buckets - mb

- Deleting Buckets - rb

- Tagging Bucket - bucket-tagging

- Querying Bucket/Object List - ls

- Obtaining Statistics on Different Types of Objects - du

- Uploading/Downloading/Copying Objects - cp

- Syncing Upload/Download/Copy - sync

- Deleting Objects - rm

- Getting File Hash Value - hash

- Listing Incomplete Multipart Uploads - lsparts

- Clearing Incomplete Multipart Uploads - abort

- Retrieving Archived Files - restore

- Getting Pre-signed URL - signurl

- FAQs

- COSCMD

- COS Migration

- FTP Server

- Hadoop

- COSDistCp

- Hadoop-cos-DistChecker

- HDFS TO COS

- Online Auxiliary Tools

- Diagnostic Tool

- Best Practices

- Overview

- Access Control and Permission Management

- ACL Practices

- CAM Practices

- Granting Sub-Accounts Access to COS

- Authorization Cases

- Working with COS API Authorization Policies

- Security Guidelines for Using Temporary Credentials for Direct Upload from Frontend to COS

- Generating and Using Temporary Keys

- Authorizing Sub-Account to Get Buckets by Tag

- Descriptions and Use Cases of Condition Keys

- Granting Bucket Permissions to a Sub-Account that is Under Another Root Account

- Performance Optimization

- Data Migration

- Accessing COS with AWS S3 SDK

- Data Disaster Recovery and Backup

- Domain Name Management Practice

- Image Processing

- Audio/Video Practices

- Workflow

- Direct Data Upload

- Content Moderation

- Data Security

- Data Verification

- Big Data Practice

- Using COS in the Third-party Applications

- Use the general configuration of COS in third-party applications compatible with S3

- Storing Remote WordPress Attachments to COS

- Storing Ghost Attachment to COS

- Backing up Files from PC to COS

- Using Nextcloud and COS to Build Personal Online File Storage Service

- Mounting COS to Windows Server as Local Drive

- Setting up Image Hosting Service with PicGo, Typora, and COS

- Managing COS Resource with CloudBerry Explorer

- Developer Guide

- Creating Request

- Bucket

- Object

- Data Management

- Data Disaster Recovery

- Data Security

- Cloud Access Management

- Batch Operation

- Global Acceleration

- Data Workflow

- Monitoring and Alarms

- Data Lake Storage

- Cloud Native Datalake Storage

- Metadata Accelerator

- Metadata Acceleration Overview

- Migrating HDFS Data to Metadata Acceleration-Enabled Bucket

- Using HDFS to Access Metadata Acceleration-Enabled Bucket

- Mounting a COS Bucket in a Computing Cluster

- Accessing COS over HDFS in CDH Cluster

- Using Hadoop FileSystem API Code to Access COS Metadata Acceleration Bucket

- Using DataX to Sync Data Between Buckets with Metadata Acceleration Enabled

- Big Data Security

- GooseFS

- Data Processing

- Troubleshooting

- API Documentation

- Introduction

- Common Request Headers

- Common Response Headers

- Error Codes

- Request Signature

- Action List

- Service APIs

- Bucket APIs

- Basic Operations

- Access Control List (acl)

- Cross-Origin Resource Sharing (cors)

- Lifecycle

- Bucket Policy (policy)

- Hotlink Protection (referer)

- Tag (tagging)

- Static Website (website)

- Intelligent Tiering

- Bucket inventory(inventory)

- Versioning

- Cross-Bucket Replication(replication)

- Log Management(logging)

- Global Acceleration (Accelerate)

- Bucket Encryption (encryption)

- Custom Domain Name (Domain)

- Object Lock (ObjectLock)

- Origin-Pull (Origin)

- Object APIs

- Batch Operation APIs

- Data Processing APIs

- Image Processing

- Basic Image Processing

- Scaling

- Cropping

- Rotation

- Converting Format

- Quality Change

- Gaussian Blurring

- Adjusting Brightness

- Adjusting Contrast

- Sharpening

- Grayscale Image

- Image Watermark

- Text Watermark

- Obtaining Basic Image Information

- Getting Image EXIF

- Obtaining Image’s Average Hue

- Metadata Removal

- Quick Thumbnail Template

- Limiting Output Image Size

- Pipeline Operators

- Image Advanced Compression

- Persistent Image Processing

- Image Compression

- Blind Watermark

- Basic Image Processing

- AI-Based Content Recognition

- Media Processing

- File Processing

- File Processing

- Image Processing

- Job and Workflow

- Common Request Headers

- Common Response Headers

- Error Codes

- Workflow APIs

- Workflow Instance

- Job APIs

- Media Processing

- Canceling Media Processing Job

- Querying Media Processing Job

- Media Processing Job Callback

- Video-to-Animated Image Conversion

- Audio/Video Splicing

- Adding Digital Watermark

- Extracting Digital Watermark

- Getting Media Information

- Noise Cancellation

- Video Quality Scoring

- SDRtoHDR

- Remuxing (Audio/Video Segmentation)

- Intelligent Thumbnail

- Frame Capturing

- Stream Separation

- Super Resolution

- Audio/Video Transcoding

- Text to Speech

- Video Montage

- Video Enhancement

- Video Tagging

- Voice/Sound Separation

- Image Processing

- Multi-Job Processing

- AI-Based Content Recognition

- Sync Media Processing

- Media Processing

- Template APIs

- Media Processing

- Creating Media Processing Template

- Creating Animated Image Template

- Creating Splicing Template

- Creating Top Speed Codec Transcoding Template

- Creating Screenshot Template

- Creating Super Resolution Template

- Creating Audio/Video Transcoding Template

- Creating Professional Transcoding Template

- Creating Text-to-Speech Template

- Creating Video Montage Template

- Creating Video Enhancement Template

- Creating Voice/Sound Separation Template

- Creating Watermark Template

- Creating Intelligent Thumbnail Template

- Deleting Media Processing Template

- Querying Media Processing Template

- Updating Media Processing Template

- Updating Animated Image Template

- Updating Splicing Template

- Updating Top Speed Codec Transcoding Template

- Updating Screenshot Template

- Updating Super Resolution Template

- Updating Audio/Video Transcoding Template

- Updating Professional Transcoding Template

- Updating Text-to-Speech Template

- Updating Video Montage Template

- Updating Video Enhancement Template

- Updating Voice/Sound Separation Template

- Updating Watermark Template

- Updating Intelligent Thumbnail Template

- Creating Media Processing Template

- AI-Based Content Recognition

- Media Processing

- Batch Job APIs

- Callback Content

- Appendix

- Content Moderation APIs

- Submitting Virus Detection Job

- SDK Documentation

- SDK Overview

- Preparations

- Android SDK

- Getting Started

- Android SDK FAQs

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Generating Pre-Signed URLs

- Configuring Preflight Requests for Cross-origin Access

- Server-Side Encryption

- Single-Connection Bandwidth Limit

- Extracting Object Content

- Remote Disaster Recovery

- Data Management

- Cloud Access Management

- Data Verification

- Image Processing

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- C SDK

- C++ SDK

- .NET(C#) SDK

- Getting Started

- .NET (C#) SDK

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Restoring Archived Objects

- Querying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Configuring Preflight Requests for Cross-Origin Access

- Server-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Moderation

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Backward Compatibility

- SDK for Flutter

- Go SDK

- iOS SDK

- Getting Started

- iOS SDK

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Listing Objects

- Copying and Moving Objects

- Extracting Object Content

- Checking Whether an Object Exists

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Server-Side Encryption

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Cross-region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Recognition

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Java SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Generating Pre-Signed URLs

- Restoring Archived Objects

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Uploading/Downloading Object at Custom Domain Name

- Data Management

- Cross-Region Disaster Recovery

- Cloud Access Management

- Image Processing

- Content Moderation

- File Processing

- Media Processing

- AI-Based Content Recognition

- Troubleshooting

- Setting Access Domain Names (CDN/Global Acceleration)

- JavaScript SDK

- Node.js SDK

- PHP SDK

- Python SDK

- Getting Started

- Python SDK FAQs

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Restoring Archived Objects

- Extracting Object Content

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Content Recognition

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Image Processing

- React Native SDK

- Mini Program SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Listing Objects

- Deleting Objects

- Copying and Moving Objects

- Restoring Archived Objects

- Querying Object Metadata

- Checking Whether an Object Exists

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Single-URL Speed Limits

- Server-Side Encryption

- Remote disaster-tolerant

- Data Management

- Cloud Access Management

- Data Verification

- Content Moderation

- Setting Access Domain Names (CDN/Global Acceleration)

- Image Processing

- Troubleshooting

- Error Codes

- FAQs

- Service Level Agreement

- Appendices

- Glossary

Deploying with Tencent Cloud EKS

Terakhir diperbarui:2024-03-25 16:04:01

Elastic Kubernetes Service (EKS) is a TKE service mode that allows you to deploy workloads without purchasing any nodes. EKS is fully compatible with native Kubernetes, allowing you to purchase and manage resources natively. This service is billed based on the actual amount of resources used by containers. In addition, EKS provides extended support for Tencent Cloud products, such as storage and network products, and can ensure the secure isolation of containers. EKS is ready to use out-of-the-box.

Deploying GooseFS with Tencent Cloud EKS can make full use of the elastic computing resources from EKS and construct an on-demand, pay-as-you-go COS access acceleration service billed on a per second basis.

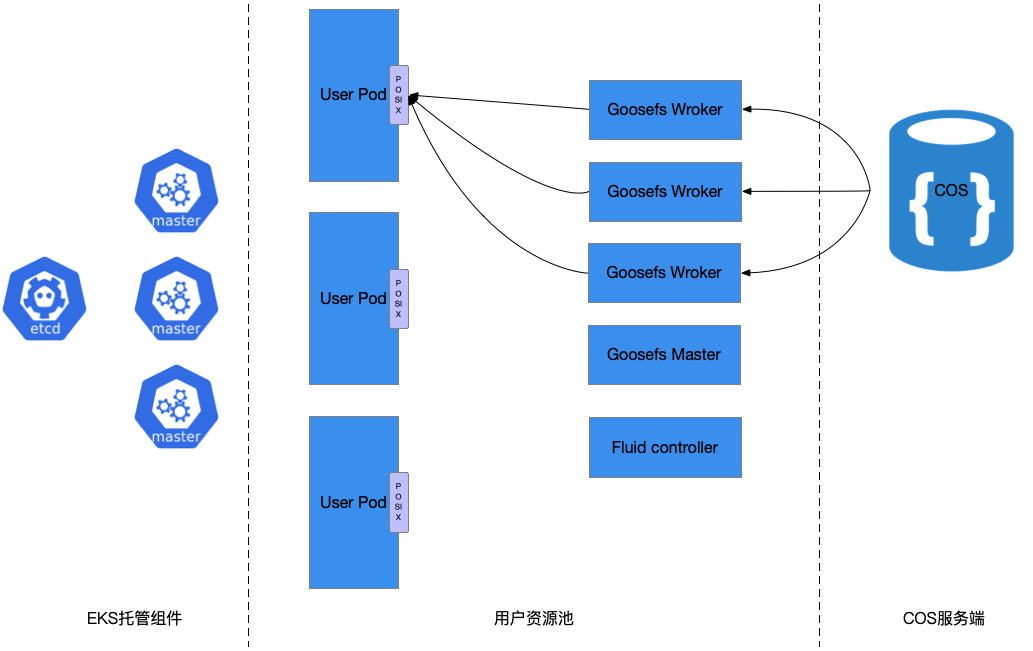

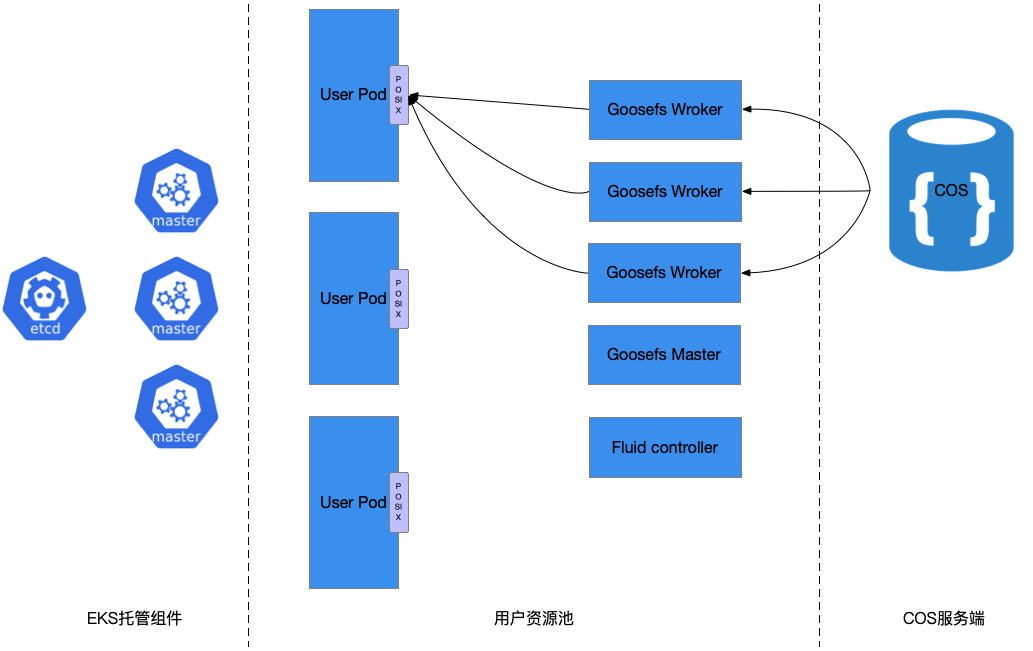

Architecture

The figure below shows the general architecture of deploying GooseFS with Tencent EKS.

As shown in the figure, the entire architecture consists of three parts: EKS Managed Components, User Resource Pool, and COS Server. User Resource Pool is mainly used to deploy GooseFS clusters, and COS Server is used as a remote storage system and can be replaced by CHDFS, a public cloud storage service. During the construction process:

Both GooseFS Master and Worker are deployed as Kubernetes StatefulSet.

Fluid is used to start a GooseFS cluster.

Fuse Client is integrated into the sandbox of the User Pod.

The usage method is the same as standard Kubernetes.

Directions

Preparing the environment

1. Create an EKS cluster. For directions, see Creating a Cluster.

2. Enable cluster access and select internet access or private network access as appropriate. Refer to Connecting to a Cluster for directions.

3. Run the

kubectl get ns command to make sure the cluster is available:-> goosefs kubectl get nsNAME STATUS AGEdefault Active 7h31mkube-node-lease Active 7h31mkube-public Active 7h31mkube-system Active 7h31m

4. Obtain

helm. Refer to Helm docs for directions.Installing GooseFS

1. Enter the

helm install command to install a chart package and Fluid:-> goosefs helm install fluid ./charts/fluid-on-tkeNAME: fluidLAST DEPLOYED: Tue Jul 6 17:41:20 2021NAMESPACE: defaultSTATUS: deployedREVISION: 1TEST SUITE: None

2. View the status of pods related to

fluid:-> goosefs kubectl -n fluid-system get podNAME READY STATUS RESTARTS AGEalluxioruntime-controller-78877d9d47-p2pv6 1/1 Running 0 59sdataset-controller-5f565988cc-wnp7l 1/1 Running 0 59sgoosefsruntime-controller-6c55b57cd6-hr78j 1/1 Running 0 59s

3. Create a

dataset, modify the relevant variables as appropriate, and run the kubectl apply -f dataset.yaml command to apply the dataset:apiVersion: data.fluid.io/v1alpha1kind: Datasetmetadata:name: ${dataset-name}spec:mounts:- mountPoint: cosn://${bucket-name}name: ${dataset-name}options:fs.cosn.userinfo.secretKey: XXXXXXXfs.cosn.userinfo.secretId: XXXXXXXfs.cosn.bucket.region: ap-${region}fs.cosn.impl: org.apache.hadoop.fs.CosFileSystemfs.AbstractFileSystem.cosn.impl: org.apache.hadoop.fs.CosNfs.cos.app.id: ${user-app-id}

4. Create a

GooseFS cluster with yaml below, and run kubectl apply -f runtime.yaml:apiVersion: data.fluid.io/v1alpha1kind: GooseFSRuntimemetadata:name: slice1annotations:master.goosefs.eks.tencent.com/model: c6worker.goosefs.eks.tencent.com/model: c6spec:replicas: 6 # Number of workers. Although the controller can be expanded, GooseFS currently does not support automatic data re-balance.data:replicas: 1 # Number of GooseFS data replicasgoosefsVersion:imagePullPolicy: Alwaysimage: ccr.ccs.tencentyun.com/cosdev/goosefs # Image and version of a GooseFS clusterimageTag: v1.0.1tieredstore:levels:- mediumtype: MEM # Supports MEM, HDD, and SSD, which represent memory, premium cloud storage, and SSD cloud storage respectively.path: /dataquota: 5G # Both memory and cloud storage will take effect. The minimum capacity of cloud storage is 10 GB.high: "0.95"low: "0.7"properties:goosefs.user.streaming.data.timeout: 5sgoosefs.job.worker.threadpool.size: "22"goosefs.master.journal.type: UFS # UFS or EMBEDDED. UFS for the case of only one master.# goosefs.worker.network.reader.buffer.size: 128MBgoosefs.user.block.size.bytes.default: 128MB# goosefs.user.streaming.reader.chunk.size.bytes: 32MB# goosefs.user.local.reader.chunk.size.bytes: 32MBgoosefs.user.metrics.collection.enabled: "false"goosefs.user.metadata.cache.enabled: "true"goosefs.user.metadata.cache.expiration.time: "2day"master:# A required parameter, which sets the VM specification of pods. Default value: 1c1gresources:requests:cpu: 8memory: "16Gi"limits:cpu: 8memory: "16Gi"replicas: 1# journal:# volumeType: pvc# storageClass: goosefs-hddjvmOptions:- "-Xmx12G"- "-XX:+UnlockExperimentalVMOptions"- "-XX:ActiveProcessorCount=8"- "-Xms10G"worker:jvmOptions:- "-Xmx28G"- "-Xms28G"- "-XX:+UnlockExperimentalVMOptions"- "-XX:MaxDirectMemorySize=28g"- "-XX:ActiveProcessorCount=8"resources:requests:cpu: 16memory: "32Gi"limits:cpu: 16memory: "32Gi"fuse:jvmOptions:- "-Xmx4G"- "-Xms4G"- "-XX:+UseG1GC"- "-XX:MaxDirectMemorySize=4g"- "-XX:+UnlockExperimentalVMOptions"- "-XX:ActiveProcessorCount=24"

5. Check the statuses of the cluster and PVC:

-> goosefs kubectl get podNAME READY STATUS RESTARTS AGEslice1-master-0 2/2 Running 0 8m8sslice1-worker-0 2/2 Running 0 8m8sslice1-worker-1 2/2 Running 0 8m8sslice1-worker-2 2/2 Running 0 8m8sslice1-worker-3 2/2 Running 0 8m8sslice1-worker-4 2/2 Running 0 8m8sslice1-worker-5 2/2 Running 0 8m8s-> goosefs kubectl get pvcslice1 Bound default-slice1 100Gi ROX fluid 7m37s # PVC and dataset share the same name. 100Gi is a dummy value used as a placeholder.

Loading data

To preload data, you only need to create a resource with the yaml below (e.g.

kubectl apply -f dataload.yaml). A response example after running is as follows:apiVersion: data.fluid.io/v1alpha1kind: DataLoadmetadata:name: slice1-dataloadspec:# Configuring the dataset that needs to load datadataset:name: slice1namespace: default

After creating, you can check the status via

kubectl get dataload slice1-dataload.Mounting PVC to a service pod

The user service container should be used in accordance to the K8s instruction. Refer to Kubernetes documentation for details.

Terminating a GooseFS cluster

To terminate a GooseFS cluster, you can specify the master and worker nodes to be deleted and run the

delete command. This is a high-risk operation. Make sure that there is no I/O operation on GooseFS in the service pod.-> goosefs kubectl get stsNAME READY AGEslice1-master 1/1 14mslice1-worker 6/6 14m-> goosefs kubectl delete sts slice1-master slice1-workerstatefulset.apps "slice1-master" deletedstatefulset.apps "slice1-worker" deleted

Ya

Ya

Tidak

Tidak

Apakah halaman ini membantu?